Table of Contents

Key Takeaways

ElevenLabs is an AI audio platform that generates human-like speech from text, offering voice cloning, multilingual support, and conversational AI agents. The platform serves over 1 million users globally with multiple AI models optimized for different use cases—from ultra-low latency conversational agents (75ms with Flash v2.5) to emotionally expressive voiceovers (Eleven v3).

- Best for: Professional content creators, audiobook production, enterprise customer service

- Pricing reality: Starts at $5/month, but budget 2-3x for production use due to regenerations

- Key limitation: Voice quality demands professional audio engineering for cloning

- Top alternatives: Cartesia (lower latency), Kokoro TTS (open source), Uberduck (music focus)

What Is ElevenLabs and How Does It Work?

ElevenLabs is a generative AI voice platform founded in 2022 that converts text into natural-sounding speech using deep learning models trained on human voice patterns. Unlike traditional text-to-speech systems that sound robotic, ElevenLabs analyzes contextual meaning, emotional tone, and linguistic patterns to produce voices indistinguishable from human recordings.

The technology works through three core processes:

1. Text Analysis: The AI parses input text to understand context, punctuation cues, and intended emotional delivery

2. Voice Synthesis: Neural networks generate audio waveforms matching the selected voice characteristics

3. Audio Rendering: Post-processing applies prosody, intonation, and timing for natural speech patterns

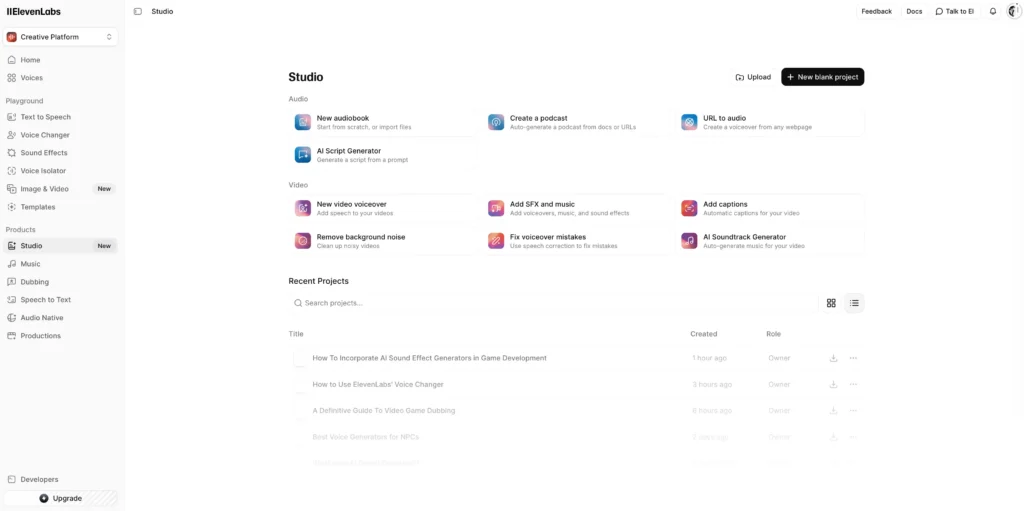

ElevenLabs Core Features: What You Can Actually Do

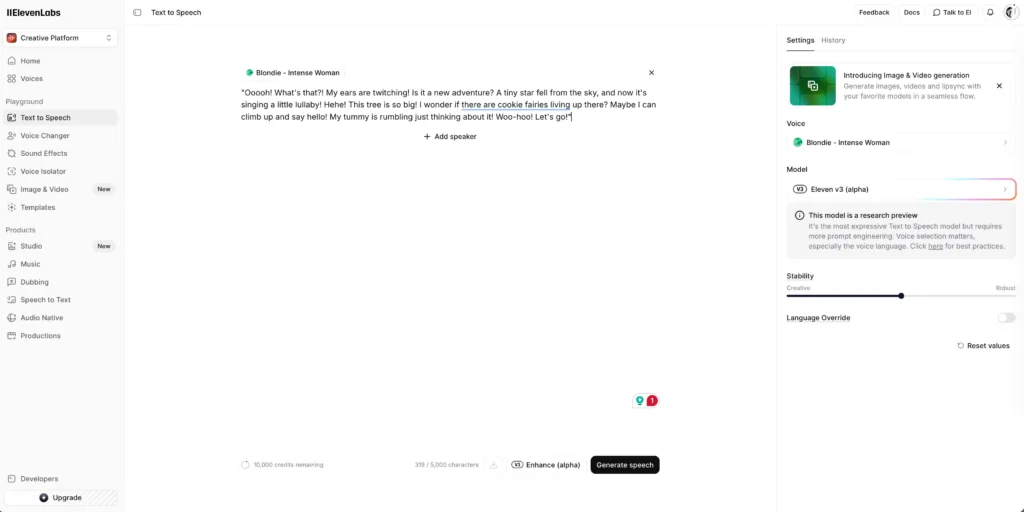

Text to Speech: The Foundation

ElevenLabs text to speech transforms written content into spoken audio with three model options tailored to different needs. The platform supports 29+ languages including English, Spanish, French, German, Portuguese, Italian, Hindi, and more.

Model Comparison:

- Eleven Flash v2.5: 75ms latency for real-time conversational AI, optimized for speed over expressiveness

- Eleven Multilingual v2: Balanced performance across 29 languages with consistent voice quality

- Eleven v3 (Alpha): Most expressive model with emotional depth, advanced prosody, and contextual understanding

The text-to-speech API processes up to 5,000 characters per request with response times under 2 seconds for standard requests.

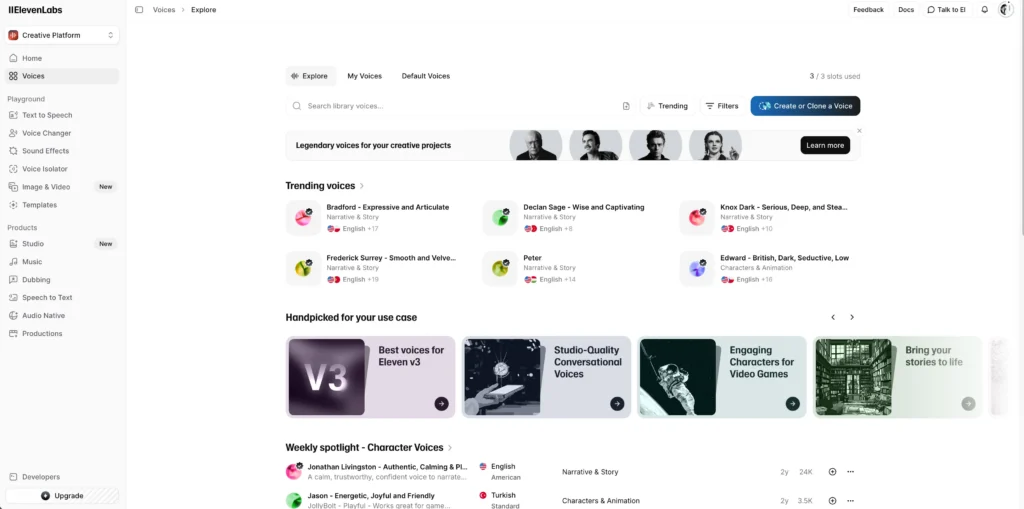

Voice Cloning: Creating Custom AI Voices

ElevenLabs voice cloning creates digital replicas of any voice from audio samples, with two tiers based on quality requirements.

Instant Voice Clone requires 1-5 minutes of audio and generates usable voices within minutes. This works adequately for internal projects but lacks the refinement needed for commercial production.

Professional Voice Clone demands 30+ minutes of high-quality audio recorded in controlled environments. The technical requirements include:

- Audio format: WAV or FLAC at 44.1kHz or 48kHz

- Bit depth: 24-bit minimum

- RMS level: -23dB to -18dB

- Background noise: Below -60dB

- Varied emotional range across samples

Professional clones achieve 95%+ similarity to source voices and maintain consistency across extended content.

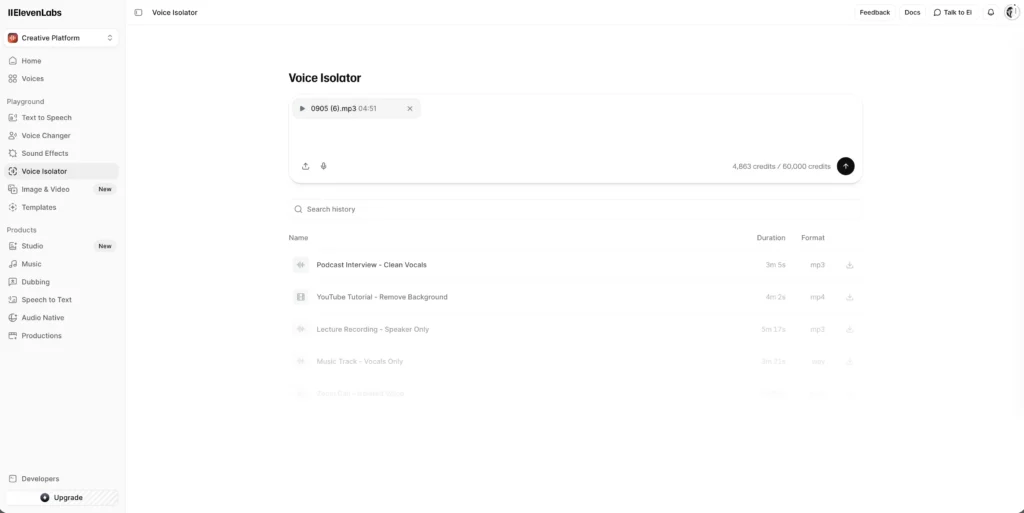

Voice Isolator: Audio Cleanup Technology

The ElevenLabs voice isolator removes background noise, reverb, and unwanted artifacts from recordings using AI-powered source separation. This feature proves invaluable for:

- Cleaning podcast recordings with ambient noise

- Removing room echo from video voiceovers

- Isolating dialogue from music or sound effects

- Preparing audio samples for voice cloning

The isolator processes audio files up to 2 hours long and supports multiple formats (MP3, WAV, M4A, FLAC).

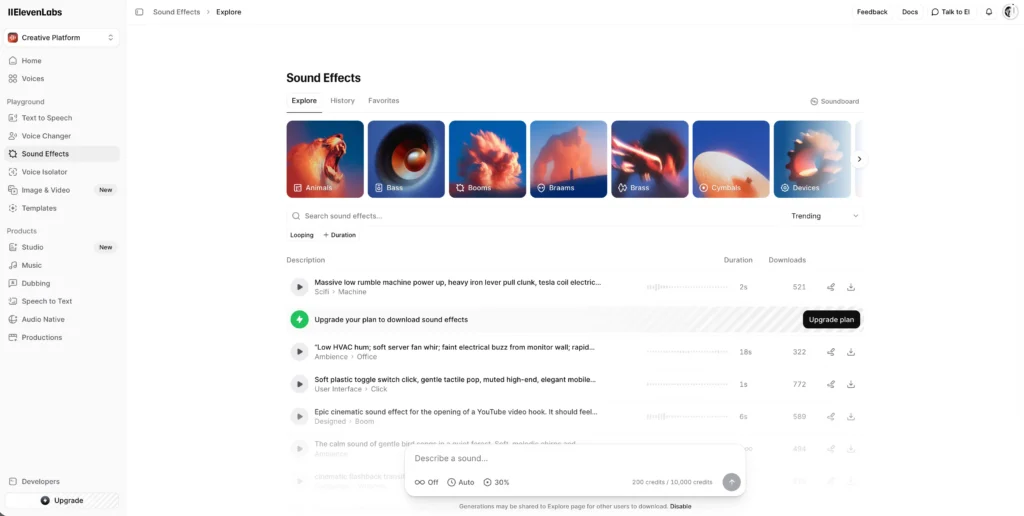

Sound Effects Generation

ElevenLabs sound effects generator creates custom audio from text descriptions, producing royalty-free sounds for media projects. Users can generate effects like:

- Environmental ambience (rain, forest, city traffic)

- Action sounds (explosions, footsteps, door creaks)

- Musical elements (drums, transitions, whooshes)

- Sci-fi and fantasy sounds (laser blasts, magic spells)

Sound effects are delivered in high-quality WAV format suitable for professional video production.

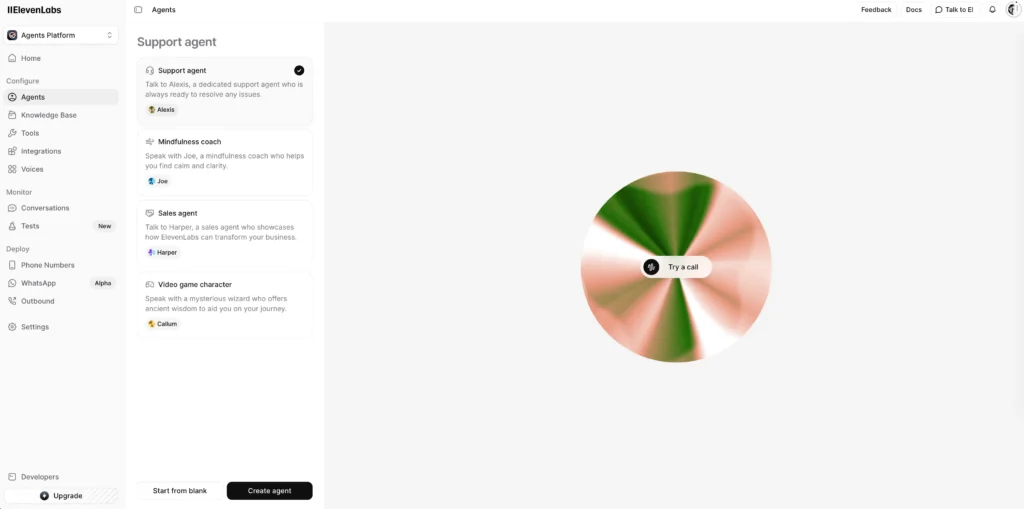

Conversational AI Agents

The Agents Platform enables developers to build voice-enabled AI assistants that handle customer interactions with natural conversation flow. Key capabilities include:

- Sub-second response times for natural dialogue

- Advanced turn-taking that detects conversation pauses

- Integration with any LLM (GPT-4, Claude, Gemini)

- Function calling for task automation

- Telephony integration for customer service calls

Agents support 31 languages and can handle complex multi-turn conversations with context awareness.

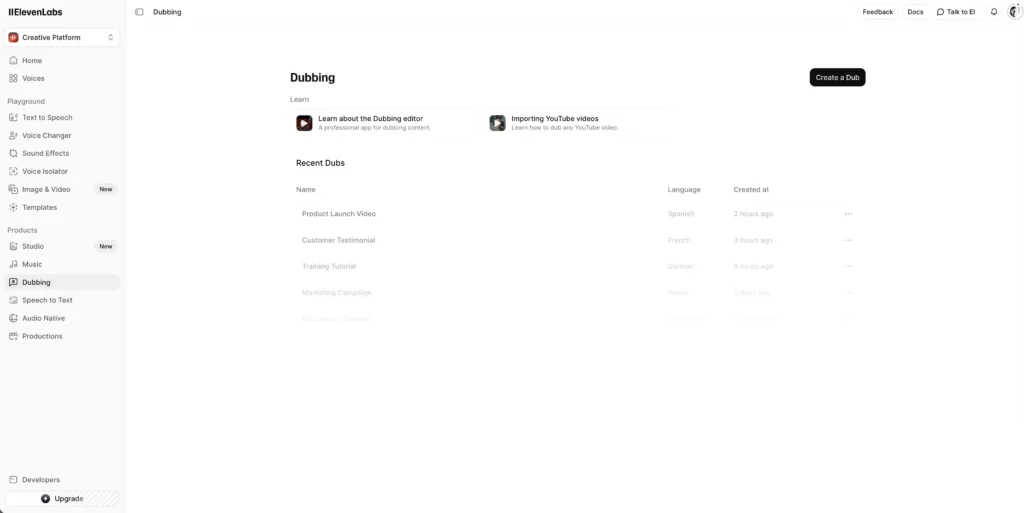

Dubbing Studio: Multilingual Translation

ElevenLabs dubbing translates video content into 30+ languages while preserving the original speaker’s voice characteristics. The automated dubbing workflow:

1. Uploads video (up to 5GB per file)

2. Detects and transcribes original speech

3. Translates transcript to target language

4. Generates new audio matching original voice

5. Syncs translated speech to video timing

For precise control, Dubbing Studio provides manual editing of timestamps, translations, and voice assignments.

Is ElevenLabs Free? Understanding the Pricing Structure

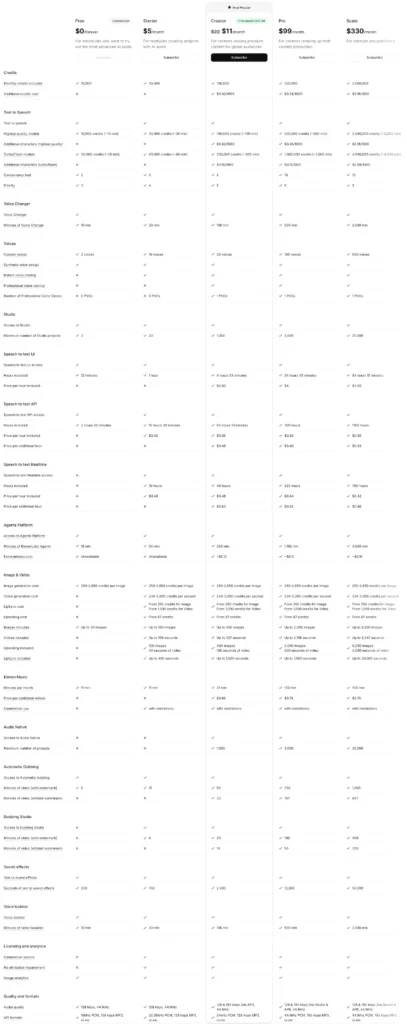

ElevenLabs offers a free tier with 10,000 characters monthly (approximately 3-4 minutes of audio), but serious usage requires paid plans starting at $5/month.

Free Plan Limitations

The free tier includes:

- 10,000 characters per month

- 3 custom voices

- Access to voice library

- Standard quality output

- Attribution required for commercial use

This suffices for testing but proves insufficient for regular content production.

Paid Plan Breakdown

Starter ($5/month):

- 30,000 characters (12-15 minutes audio)

- Instant voice cloning

- Commercial license included

- No attribution required

Creator ($11/month):

- 100,000 characters (40-50 minutes audio)

- Professional voice cloning

- Voice isolator access

- Projects and history

Pro ($99/month):

- 500,000 characters (3+ hours audio)

- Priority generation queue

- Advanced voice settings

- Usage analytics

Scale ($330/month):

- 2,000,000 characters

- Dedicated account management

- Custom voice development

- Enterprise SLA

Hidden Cost Reality

The effective cost runs 2.2-2.8x advertised rates due to failed generations and necessary regenerations. Budget an additional 40-60% for:

- Audio editing software subscriptions

- Cloud storage for generated files

- Quality control time investment

- Professional microphone for voice cloning

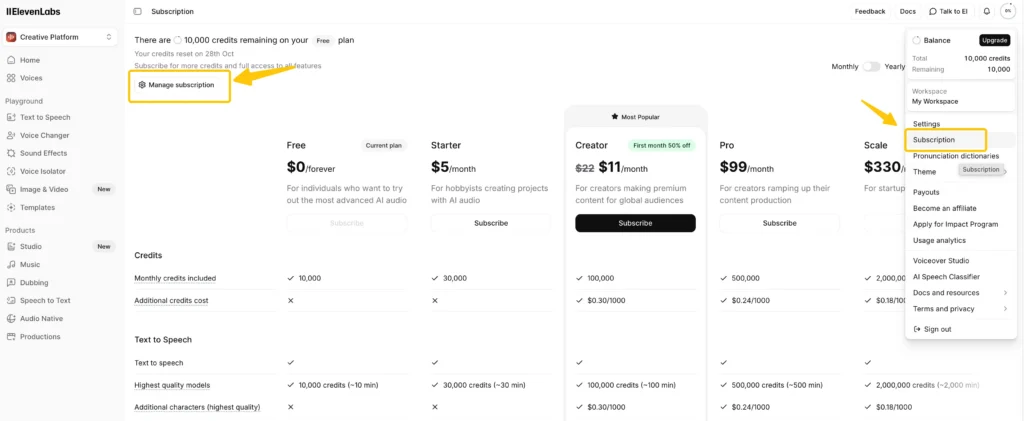

How to Cancel ElevenLabs Subscription

Canceling your ElevenLabs subscription takes 3 steps through the account settings, with cancellation effective at the end of your current billing period.

1. Access Settings: Log into elevenlabs.io and click your profile icon → “Settings”

2. Navigate to Billing: Select “Subscription” tab → “Manage Subscription”

3. Cancel Plan: Click “Cancel Subscription” → Confirm cancellation

Your account reverts to the free tier after cancellation, retaining access to generated audio but losing premium features immediately. Unused credits don’t roll over or qualify for refunds.

Pause Alternative: Instead of canceling, consider pausing your subscription for up to 3 billing cycles if you’re between projects.

ElevenLabs Alternatives: Platform Comparison

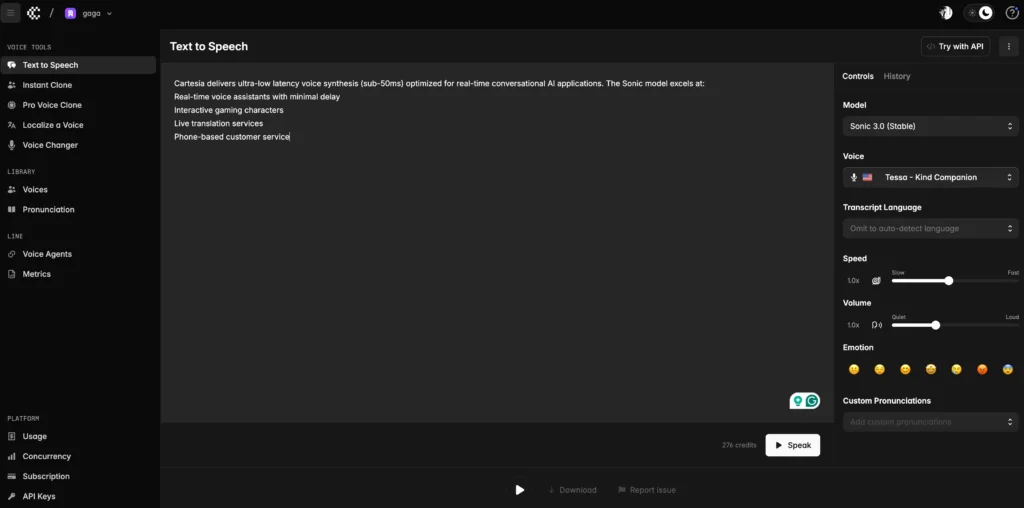

#1 – Cartesia AI

Cartesia delivers ultra-low latency voice synthesis (sub-50ms) optimized for real-time conversational AI applications. The Sonic model excels at:

- Real-time voice assistants with minimal delay

- Interactive gaming characters

- Live translation services

- Phone-based customer service

Cartesia prioritizes speed over emotional expressiveness, making it ideal for applications where response time matters more than voice nuance.

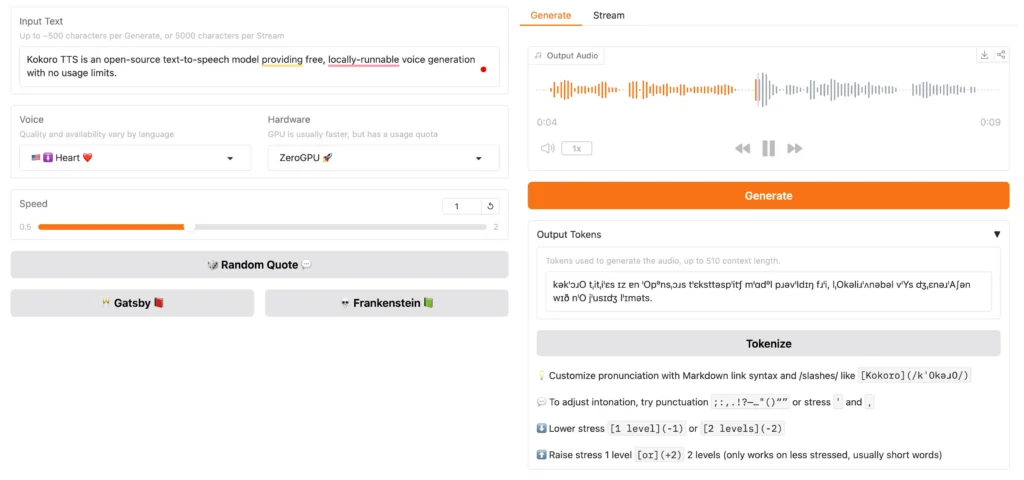

#2 – Kokoro TTS

Kokoro TTS is an open-source text-to-speech model providing free, locally-runnable voice generation with no usage limits. Key advantages:

- Complete data privacy (runs offline)

- No subscription costs

- Customizable model fine-tuning

The tradeoff: Kokoro requires technical expertise for setup and lacks the polish of commercial platforms.

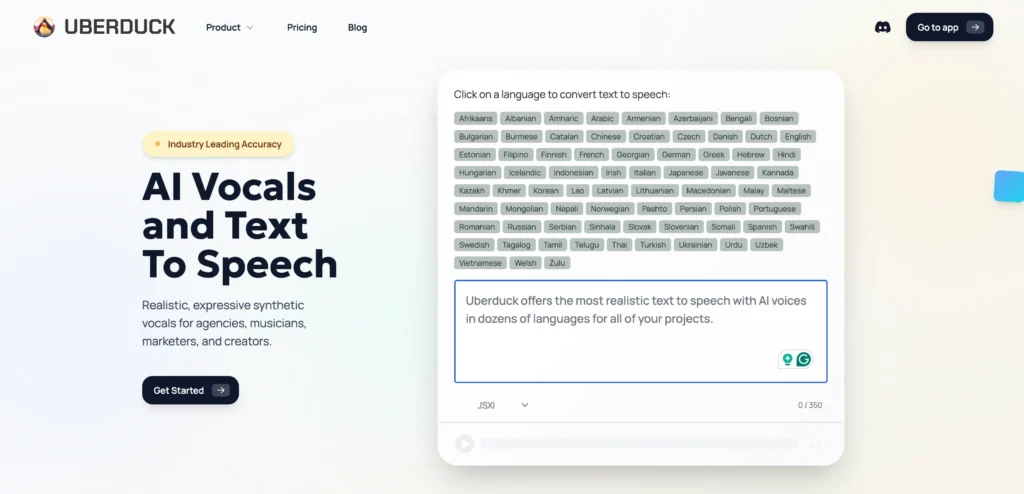

#3 – Uberduck

Uberduck specializes in creative voice synthesis with extensive rap and music capabilities, offering 5,000+ voice options including celebrity soundalikes. Standout features:

- Music generation and vocal synthesis

- Voice-to-voice conversion

- API for custom integrations

- Lower pricing than ElevenLabs ($10/month for 625,000 characters)

Uberduck suits content creators focusing on entertainment and music-adjacent projects.

#4 – OpenAI Text-to-Speech (TTS)

OpenAI’s TTS API provides six high-quality preset voices with straightforward pricing at $15 per million characters (significantly cheaper than ElevenLabs at scale). Benefits include:

- Simple API integration

- Predictable costs

- Reliable infrastructure

- Multiple voice options (Alloy, Echo, Fable, Onyx, Nova, Shimmer)

Limitation: No voice cloning capability limits brand voice consistency.

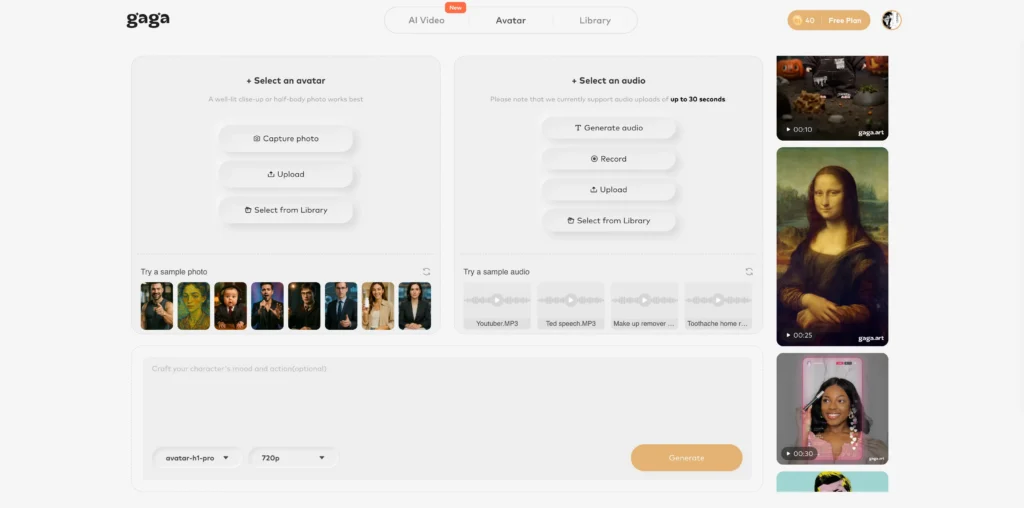

#5 – Gaga AI

Gaga AI uniquely visualizes audio through animated characters synchronized to generated speech, creating engaging video content from text inputs. This platform targets:

- Social media content creators

- Educational video production

- Marketing and advertising

- Presentation enhancement

Gaga AI combines voice generation with visual avatars, streamlining video production workflows.

#6 – Viblo.ai

Viblo.ai focuses on Vietnamese language optimization with natural-sounding voices specifically trained for Vietnamese phonetics and tonal patterns. For Vietnamese content creators, Viblo.ai outperforms global platforms with:

- Native Vietnamese voice quality

- Accurate tone reproduction

- Region-specific accents (Northern, Central, Southern)

- Lower latency for Vietnamese text

ElevenLabs AI Voice Quality: What Makes It Different

ElevenLabs achieves superior voice realism through contextual understanding that adapts delivery based on punctuation, sentence structure, and implied emotion.

Emotional Intelligence

The AI detects and responds to emotional cues:

- Questions: Upward inflection at sentence end

- Exclamations: Increased energy and volume

- Parenthetical remarks: Softer delivery with slight speed adjustment

- Ellipses: Natural pauses suggesting hesitation

- ALL CAPS: Emphasis without unnatural shouting

This contextual awareness creates voices that sound genuinely conversational rather than mechanically reading text.

Pronunciation Accuracy

ElevenLabs handles complex pronunciation better than competitors through its pronunciation dictionary and phonetic override system. For technical content, you can:

- Add custom pronunciations using IPA notation

- Create pronunciation libraries for branded terms

- Use SSML-style tags for precise control

However, the system still struggles with:

- Large numbers (200,000+ often mispronounced)

- Mixed-language content (accent bleeding)

- Uncommon acronyms and neologisms

Voice Consistency Across Content

Professional voice models maintain timbral consistency across projects, critical for audiobooks and branded content requiring hundreds of generated segments.

Consistency factors:

- Stability slider: Higher values (70-80%) reduce variation

- Same voice model across all segments

- Identical audio post-processing chain

- Regular quality audits during long projects

Real-World Use Cases: Where ElevenLabs Excels

Audiobook Production

ElevenLabs revolutionizes audiobook creation by reducing production costs 80-90% compared to human narration while maintaining professional quality. Publishers use the platform to:

- Generate multi-character voices for fiction

- Produce audiobooks in weeks instead of months

- Create multilingual versions simultaneously

- Test market audiobook concepts before investing in full production

A 50,000-word novel costs approximately $30-50 in credits versus $3,000-5,000 for human narration.

YouTube Content Creation

Content creators use ElevenLabs for consistent voiceovers across video series, eliminating recording variability and accelerating production schedules.

Workflow benefits:

- Script-to-audio in minutes

- No recording booth required

- Consistent audio quality

- Easy corrections and updates

- Multilingual channel expansion

Popular niches: educational content, documentary-style videos, explainer animations, meditation and sleep content.

E-Learning and Corporate Training

Educational technology companies integrate ElevenLabs to narrate courses, providing scalable content delivery without human narrator costs.

Training applications:

- Compliance training modules

- Product knowledge courses

- Safety instruction videos

- Onboarding materials

- Language learning content

The conversational AI agents additionally enable interactive learning scenarios with personalized feedback.

Customer Service Automation

Enterprises deploy ElevenLabs agents for phone-based customer support, handling routine inquiries with natural conversation while escalating complex issues to human agents.

ROI metrics from early adopters:

- 60-70% reduction in basic inquiry handling costs

- 24/7 availability without staffing overhead

- Average handle time reduced by 40%

- Customer satisfaction scores comparable to human agents for transactional queries

Technical Considerations: Integration and Development

API Implementation

The ElevenLabs API provides RESTful endpoints for text-to-speech, voice cloning, and speech-to-speech conversion with SDKs for Python, TypeScript, and JavaScript.

Core endpoints:

- /v1/text-to-speech: Generate audio from text

- /v1/voices: List available voices

- /v1/voice-generation: Create custom voices

- /v1/speech-to-speech: Convert voice characteristics

Rate limits vary by plan (100-1000 requests/minute) with burst capacity for traffic spikes.

Latency Optimization

For real-time applications, achieving sub-200ms latency requires strategic model selection and architecture choices:

1. Use Flash v2.5: Specifically optimized for low latency

2. Enable Streaming: Receive audio chunks as generated

3. Implement Local Caching: Store common phrases

4. Optimize Text Chunking: Smaller segments process faster

WebSocket connections reduce overhead compared to HTTP requests for continuous conversations.

Audio Quality Management

Maintaining consistent audio quality across deployments requires standardized post-processing:

- Normalization: -16 LUFS for broadcast, -20 LUFS for podcasts

- EQ: High-pass filter at 80Hz removes rumble

- Compression: Light compression (2:1 ratio) for consistency

- Limiting: -1dB true peak prevents clipping

Automated pipelines using ffmpeg or similar tools ensure every generated file meets quality standards.

Common Problems and Solutions

Issue: Voice Sounds Robotic or Unnatural

Lower the stability slider to 40-60% and increase the style exaggeration to 30-50%. Lower stability introduces natural variation while style exaggeration enhances emotional expressiveness.

Additional fixes:

- Add punctuation for natural pauses

- Break long texts into shorter segments

- Use the “Eleven v3” model for maximum expressiveness

- Add emotional direction in brackets: [excited], [whispered], [serious]

Issue: Inconsistent Voice Across Segments

Enable the “Stability Boost” feature in Studio and maintain identical generation settings across all segments. Save your successful parameter combination as a preset.

Consistency checklist:

- Same voice model for entire project

- Identical stability/clarity/style settings

- Consistent text formatting

- Sequential generation (avoid mixing old/new generations)

Issue: Credits Draining Faster Than Expected

Generate shorter segments (under 500 words), preview before full generation, and use the Studio preview feature to test before committing credits.

Credit preservation strategies:

- Test with free voices before using cloned voices

- Proof text carefully before generation

- Use the pronunciation dictionary upfront

- Generate during off-peak hours (fewer errors)

Issue: Poor Voice Clone Quality

Professional voice cloning requires studio-quality audio: 30+ minutes of clean recordings at 48kHz, 24-bit, with RMS between -23dB to -18dB.

Voice clone improvement steps:

1. Record in acoustically treated space

2. Use decent microphone ($200+ minimum)

3. Maintain consistent mic distance (6-8 inches)

4. Include varied emotional deliveries

5. Apply professional audio processing

6. Test clone with short samples before bulk recording

Frequently Asked Questions

What is ElevenLabs used for?

ElevenLabs generates realistic AI voices for audiobook narration, video voiceovers, podcast production, customer service automation, e-learning content, and conversational AI agents. Content creators, developers, and enterprises use it to scale voice content production without human narrators.

Does ElevenLabs have a free plan?

Yes, ElevenLabs offers 10,000 free characters monthly (approximately 3-4 minutes of audio) with access to the voice library and standard quality. Commercial use requires attribution unless you upgrade to a paid plan starting at $5/month.

Can I clone any voice with ElevenLabs?

You can clone voices you have legal rights to use. ElevenLabs prohibits cloning voices without explicit consent. Professional voice cloning requires 30+ minutes of high-quality audio recordings, while instant cloning works with 1-5 minutes but produces lower quality results.

Which ElevenLabs model is best?

Eleven v3 (alpha) delivers the most expressive, emotionally nuanced voices for voiceovers and creative content. Eleven Flash v2.5 provides the lowest latency (75ms) for real-time conversational AI. Eleven Multilingual v2 offers the best balance for non-English languages.

How accurate is ElevenLabs speech to text?

ElevenLabs Speech to Text achieves 98% accuracy with features including speaker diarization (identifying different speakers) and character-level timestamps. Pricing starts at $0.22 per hour on business plans, competitive with alternatives like OpenAI Whisper.

Can ElevenLabs handle multiple languages in one project?

Yes, but language switching within single texts often causes accent bleeding and inconsistent delivery. For best results, generate each language separately using voices specifically trained for that language rather than multilingual voices.

Is ElevenLabs better than OpenAI TTS?

ElevenLabs offers superior voice cloning, more expressive delivery, and extensive customization options. OpenAI TTS provides simpler implementation, more predictable pricing ($15 per million characters vs ElevenLabs’ $22-40), and six quality preset voices without cloning capability.

How do I improve ElevenLabs pronunciation?

Use the pronunciation dictionary in Studio to define custom pronunciations, write numbers as words (“two hundred thousand” instead of “200,000”), add phonetic spellings in brackets, and break problematic words into syllables with hyphens.

Can businesses use ElevenLabs for customer service?

Yes, the Agents Platform specifically enables customer service automation with phone integration, function calling for task automation, and sub-second response times. Enterprises including Decagon use ElevenLabs for AI-powered customer interactions at scale.

What audio editing software works with ElevenLabs?

Audacity (free), Adobe Audition ($240/year), Reaper ($60), or DaVinci Resolve (free) handle ElevenLabs audio for normalization, noise reduction, and mastering. Professional workflows benefit from DAWs like Pro Tools or Logic Pro for complex editing.

Does ElevenLabs work offline?

No, ElevenLabs requires internet connectivity for all voice generation. The platform runs cloud-based AI models that cannot operate offline. For offline capability, consider open-source alternatives like Kokoro TTS or Piper TTS.

How long does voice generation take?

Standard text-to-speech generates audio at 2-5x real-time speed (a 1-minute script takes 12-30 seconds). Flash v2.5 model achieves sub-second generation for real-time applications. Voice cloning setup requires 10-30 minutes for processing.

Can I get refunds for unused ElevenLabs credits?

ElevenLabs offers limited refunds within 30 days for substantially unused accounts. Credits used for testing count as “used” and disqualify refunds. Unused credits don’t roll over monthly, so timing subscriptions carefully prevents waste.

What’s the difference between ElevenLabs and traditional TTS?

Traditional text-to-speech uses concatenative synthesis (stitching recorded phonemes) producing robotic voices. ElevenLabs uses neural networks trained on human speech patterns, generating contextually aware, emotionally expressive voices indistinguishable from human recordings.

Is ElevenLabs GDPR compliant?

Yes, ElevenLabs maintains GDPR compliance and SOC 2 Type II certification for data security. Enterprise plans include custom data processing agreements and on-premise deployment options for sensitive applications requiring air-gapped environments.