Breakthrough Engram Memory Architecture | 1M+ Token Context | 10x Cost Reduction

⚡ BREAKING: DeepSeek Quietly Tests V4 Features

On February 11, 2026, users discovered DeepSeek silently rolling out major upgrades: context window expanded from 128K to 1M tokens, and knowledge cutoff updated to May 2025. This may be the V4 preview. Official release expected around February 17, 2026 (Lunar New Year).

Key Takeaways

- Release Date: Mid-February 2026 (around February 17, coinciding with Lunar New Year)

- Core Innovation: Engram conditional memory architecture separates static knowledge retrieval from dynamic reasoning with O(1) hash lookups

- Performance Claims: Internal benchmarks show V4 outperforms Claude 3.5 Sonnet and GPT-4o on coding tasks, with reportedly superior performance against Claude Opus 4.5 on SWE-bench Verified

- Context Capability: Processes 1M+ tokens for entire codebase analysis in a single pass—eliminating context fragmentation that plagues current AI tools

- Cost Advantage: Expected to reduce frontier AI coding costs by up to 10x through memory efficiency and computational optimization

- Open-Source Approach: Likely to be released as open-weight model for local deployment on consumer hardware (dual RTX 4090s or single RTX 5090)

Table of Contents

What is DeepSeek V4?

DeepSeek V4 is the next-generation flagship AI model from Chinese AI startup DeepSeek, specifically engineered for advanced coding capabilities with revolutionary long-context processing. The model represents a massive architectural shift focused on long-context coding mastery and extreme efficiency, with an expected release in mid-February 2026.

Unlike general-purpose language models, V4 prioritizes software development tasks including code generation, debugging, refactoring, and architectural analysis. The model introduces revolutionary memory management through its Engram architecture, enabling it to handle entire codebases within a single context window exceeding one million tokens—a game-changer for enterprise development workflows.

How DeepSeek V4 Differs from Previous Models

While DeepSeek V3 served as a general-purpose large language model, V4 refines the balance between reasoning capabilities and specialized technical output through three breakthrough innovations:

• Engram Conditional Memory: Published January 13, 2026, this architecture performs O(1) lookups for static patterns, freeing GPU resources for complex reasoning

• mHC (Manifold-Constrained Hyperconnection): Released January 1, 2026, addresses training stability issues, enabling larger models on same hardware

• Coding Specialization: Reflects market demand for AI tools that excel at specific professional tasks rather than attempting to be generalists across all domains

DeepSeek V4 Release Date: When Will It Launch?

DeepSeek plans to release V4 around February 17, 2026, coinciding with Lunar New Year festivities. This timing mirrors the company’s previous release strategy with R1, which debuted during a major holiday period and triggered a $1 trillion tech stock selloff, including $600 billion from NVIDIA alone.

Evidence from February 11 Gray Testing:

- Context window: Expanded from 128K (V3.1) to 1M tokens

- Knowledge cutoff: Updated to May 2025 (can answer April 2025 events without web search)

- GitHub clues: FlashMLA repository revealed ‘Model 1’ identifier appearing in 114 files, indicating major architectural overhaul

- Response speed: Noticeably faster than 671B V3 series, suggesting potential 200B parameter model optimized for efficiency

The DeepSeek V4 Paper: Understanding Engram Architecture

The technical foundation for DeepSeek V4 lies in groundbreaking research published in January 2026. Two key papers reveal the architectural innovations powering V4’s capabilities.

Conditional Memory via Scalable Lookup

Released jointly by DeepSeek and Peking University on January 12, 2026 (arXiv:2601.07372), the Engram paper introduces conditional memory as a complementary sparsity axis to traditional Mixture-of-Experts (MoE) computation.

The paper addresses a fundamental limitation in transformer architectures: they lack native knowledge lookup primitives. When processing text, transformers must simulate retrieval of static patterns through expensive neural computation across multiple layers.

How Engram Memory Works: The Breakthrough

Engram separates two fundamentally different workloads in language modeling:

1. Dynamic Reasoning (requires expensive computation):

• Logical composition and multi-step inference

• Mathematical problem solving

• Code generation and architectural decisions

• Tasks requiring genuine adaptive computation

2. Static Pattern Recall (should use cheap lookups):

• Named entities (people, places, organizations)

• Common phrases and idioms

• Technical terminology and formulaic expressions

• Patterns that are local, repetitive, and context-invariant

Engram’s Innovation:

Instead of forcing the model to ‘remember’ facts through expensive neural computation, Engram uses deterministic hash-based lookups for static patterns—achieving O(1) constant-time retrieval complexity.

The Technical Implementation

When DeepSeek V4 encounters a phrase like “Alexander the Great,” traditional models would spend computational resources across multiple layers to reconstruct contextual information about who this person was. Engram instead:

1. Identifies the N-gram pattern (two or three token sequences)

2. Performs hash-based lookup using a deterministic formula (O(1) complexity)

3. Retrieves pre-stored embeddings containing contextual information

4. Gates the information based on current context to ensure relevance

5. Adds the information to the token’s hidden state without expensive computation

This eases reliance on reasoning for AI models, allowing GPUs to only handle more complex tasks, increasing performance and reducing reliance on high-bandwidth memory.

The U-Shaped Scaling Law

DeepSeek found the optimal balance between computation and memory with 75% of sparse model capacity allocated to dynamic reasoning and 25% to static lookups.

Testing revealed that pure MoE models (100% computation) proved suboptimal because they waste depth reconstructing static patterns. Conversely, too much memory allocation loses reasoning capacity. This U-shaped performance curve guides optimal parameter allocation.

Manifold-Constrained Hyper-Connections (mHC)

The second architectural innovation comes from DeepSeek’s mHC paper, released January 1, 2026. The mHC framework addresses fundamental problems in scaling large language models, improving scalability while reducing computational and energy demands during training.

Traditional hyperconnections suffer from broken identity mapping and catastrophic signal amplification that reaches gains of 10³ to 10⁵ in deep networks. mHC solves these stability issues, enabling DeepSeek to train larger models more reliably on the same hardware that would otherwise limit capacity.

Performance Results from the Engram Paper

Under strictly controlled conditions with equal parameters and FLOPs, Engram-27B demonstrated consistent improvements over MoE baselines:

Knowledge Tasks:

- MMLU: +3.4 improvement

- CMMLU: +4.0 improvement

- MMLU-Pro: +1.8 improvement

Reasoning Tasks (even larger gains):

- BBH (Big-Bench Hard): +5.0 improvement

- ARC-Challenge: +3.7 improvement

- DROP: +3.3 improvement

Code and Math:

- HumanEval: +3.0 improvement

- MATH: +2.4 improvement

- GSM8K: +2.2 improvement

The surprising finding: gains were not limited to knowledge-intensive tasks; even more significant improvements appeared in general reasoning and code/math domains.

DeepSeek V4 Coding Capabilities: What Sets It Apart

Internal testing by DeepSeek employees indicates V4 demonstrates impressive performance advantages specifically for coding tasks.

Superior Code Generation

Internal testing by DeepSeek employees has shown that V4 outperforms Anthropic’s Claude 3.5 Sonnet and OpenAI’s GPT-4o in coding tasks. These claims, while unverified by independent testing, have generated significant interest among engineers and technology leaders.

The model shows particular strength in:

- Code generation accuracy for multiple programming languages and frameworks

- Enhanced handling of complex programming scenarios that typically challenge AI models

- Improved understanding of software architecture patterns and best practices

- More reliable debugging suggestions for sophisticated codebases

Revolutionary Long-Context Processing

One of the most significant breakthroughs in DeepSeek V4 is its ability to process and manage extremely long coding prompts. With context windows exceeding 1 million tokens, DeepSeek V4 can process entire codebases in a single pass.

Why Long-Context Matters for Developers

Traditional AI coding models often struggle with context limitations, forcing developers to fragment complex problems into smaller pieces. This creates several challenges:

- Loss of architectural context when working across multiple files

- Difficulty maintaining consistency in large-scale refactoring projects

- Reduced effectiveness in understanding system-wide dependencies

- Interrupted workflow requiring multiple separate AI interactions

DeepSeek V4’s long-context capabilities promise to eliminate these friction points entirely.

Practical Applications for Software Development

The extended context window opens up transformative possibilities:

Large-Scale Refactoring

Developers can provide entire modules or subsystems as context, enabling the AI to suggest refactoring strategies that maintain consistency across thousands of lines of code.

Repository-Level Understanding

V4 enables true multi-file reasoning, where the model can understand relationships between components, trace dependencies, and maintain consistency across large-scale refactoring operations.

Deep Code Reviews

V4 can analyze complete feature implementations spanning multiple files, identifying potential issues with architecture, performance, security, and maintainability in a single comprehensive review.

Cross-File Dependency Management

The model can track dependencies and relationships across numerous files, helping developers understand ripple effects of changes throughout the codebase.

Comprehensive Documentation Generation

V4 can generate technical documentation by understanding the full scope of a project, including how different components interact and why specific design decisions were made.

DeepSeek V4 vs Claude vs GPT: Performance Comparison

How does DeepSeek V4 stack up against the current industry leaders in AI-assisted coding?

Internal Benchmark Claims

Internal leaks indicate that V4 is consistently outperforming Claude 4.5 on the SWE-bench Verified. The model reportedly handles entire full-stack repositories, understands architectural debt, and suggests refactors that compile successfully on the first try.

Comparison with Leading Models

vs. Claude 4.5 Sonnet

While Claude maintains advantages in conversational nuance and general reasoning, DeepSeek V4 reportedly handles ultra-long code contexts using a refined Sparse Attention mechanism that leaves Claude’s 200k token limit behind.

vs. GPT-4o/GPT-5.2

GPT models excel at general reasoning across diverse domains, but for specialized, high-density coding tasks, V4’s architecture provides targeted advantages. The comparison is between a Swiss Army knife and a laser-guided scalpel—both valuable, but optimized for different purposes.

vs. Other Specialized Coding Models

DeepSeek V4 competes directly with specialized coding assistants like:

- GitHub Copilot (Microsoft-backed, GPT-powered)

- Amazon CodeWhisperer (AWS-integrated)

- Cursor (AI-first editor gaining developer traction)

Key Benchmarks to Watch

The AI community will be monitoring several critical benchmarks at V4’s release:

SWE-bench: The industry-standard benchmark for real-world software engineering tasks. Claude Opus 4.5 currently leads with an 80.9% solve rate; for V4 to claim the coding crown, it will need to exceed this threshold.

HumanEval: Measures code generation quality across programming problems.

MBPP (Mostly Basic Python Problems): Tests practical coding ability.

CodeContests: Evaluates performance on competitive programming challenges.

Independent verification of DeepSeek’s performance claims will be crucial. The developer community approaches internal benchmarks with appropriate skepticism, awaiting third-party validation.

Cost Efficiency: The 10x Advantage

One of DeepSeek V4’s most compelling features is its dramatic cost reduction compared to frontier AI models.

The Economic Impact

Recent analysis suggests that DeepSeek V4 could bring the price of frontier AI coding models down by approximately 10 times. This cost efficiency stems from multiple architectural innovations:

Engram’s Memory Efficiency

Engram has significant implications for the AI industry, as the methodology is no longer bound by HBM (high-bandwidth memory), but instead utilizes longer-term storage through system DRAM.

This means:

- Much more expensive HBM is reserved only for computationally heavy queries

- Cheaper system RAM handles static knowledge storage

- Overall infrastructure costs decrease dramatically

Computational Optimization

By separating static memory retrieval from dynamic reasoning, V4 reduces unnecessary computational waste. The model doesn’t burn GPU cycles reconstructing facts it could simply look up.

Efficient Sparse Architecture

The combination of MoE (Mixture-of-Experts) with Engram memory creates a model that activates only the parameters needed for each task, reducing active computation requirements.

Implications for Developers and Organizations

The cost reduction creates several opportunities:

Accessible AI for Startups

Cost-conscious startups building initial products can access frontier-level coding AI without prohibitive expenses.

Increased Development Velocity

Development teams can use AI assistance more freely without budget constraints limiting usage.

Competitive Pressure

If DeepSeek V4 matches or exceeds GPT-4/Claude performance at 1-2% of training cost, this pressures Western AI companies to reduce pricing or improve efficiency.

Local Deployment Economics

For organizations running V4 locally, the cost savings compound through elimination of API fees and per-token charges.

Local Deployment and Hardware Requirements

One of DeepSeek V4’s strategic advantages is its accessibility for local deployment on consumer-grade hardware.

Running V4 Locally

DeepSeek V4 is designed to run on consumer-grade hardware: Consumer Tier with dual NVIDIA RTX 4090s or a single RTX 5090; Enterprise Tier on standard data center GPU configurations.

This accessibility represents a significant departure from the trend toward ever-larger hardware requirements. Running a state-of-the-art coding model on hardware that fits in a standard workstation opens possibilities for developers who need air-gapped environments or prefer local deployment for security reasons.

Expected Architecture

If DeepSeek follows the V3 architecture pattern, V4 will likely feature:

- Total parameters: ~671 billion (MoE architecture)

- Active parameters: ~37 billion per inference

- Quantization support: FP8 or INT4 for reduced memory requirements

- Memory footprint: Manageable on high-end consumer hardware with quantization

Advantages of Local Deployment

Data Privacy and Security

Organizations with strict data governance requirements can run V4 entirely within their own infrastructure, eliminating concerns about sending proprietary code to external APIs.

Air-Gapped Environments

Development teams working in secure facilities can leverage V4’s capabilities without network connectivity, valuable for classified projects or systems with strict network isolation.

Cost Control at Scale

Self-hosting enables organizations to optimize inference costs through techniques like quantization, batching, and custom hardware deployment. At scale, this can be significantly more economical than API-based pricing.

Customization and Fine-Tuning

Open weights enable researchers and developers to fine-tune V4 for specific programming languages, frameworks, or organizational coding standards.

DeepSeek’s Strategic Position in the Global AI Race

DeepSeek’s emergence as a competitive force in AI represents more than incremental technological progress—it signals a fundamental shift in the global AI landscape.

The Chinese AI Ecosystem

DeepSeek has positioned itself as a champion of open-source AI, a strategy that distinguishes it from many Western counterparts and has triggered an “arms race” in China.

The company, headquartered in Hangzhou, has emerged as a significant contributor to China’s broader initiative to build a self-reliant and competitive AI ecosystem. This positioning reflects both technological ambition and strategic considerations within the global AI landscape.

Building on Previous Success

DeepSeek’s earlier models established credibility:

DeepSeek-V3 (December 2024): Attracted attention for strong benchmark performance and gained praise from influential technology sector figures.

DeepSeek-R1 (January 2025): The company’s reasoning model demonstrated capabilities in complex problem-solving and logical inference. The R1 model sent shockwaves from Silicon Valley to Wall Street on January 27, 2025, triggering a $1 trillion tech stock selloff including $600 billion from NVIDIA alone.

The Efficiency Philosophy

DeepSeek’s approach fundamentally challenges the “bigger is better” paradigm that has dominated AI development. Co-founder Liang Wenfeng, who co-authored both the Engram and mHC papers, has stated the company’s commitment to achieving more with less compute.

This philosophy manifests in:

- Algorithmic innovation over brute-force scaling

- Architectural efficiency through techniques like Engram and mHC

- Cost optimization making advanced AI accessible to broader audiences

- Open-source strategy accelerating community innovation

Global Ambitions

While rooted in China’s AI ecosystem, DeepSeek clearly harbors global ambitions. The company’s emphasis on performance benchmarking against international competitors and focus on universally relevant capabilities like coding assistance signal intentions to compete worldwide.

Security, Privacy, and Compliance Considerations

As DeepSeek V4 gains attention, organizations must evaluate security and compliance implications before adoption.

Data Privacy Concerns

Organizations handling proprietary code need assurance that their intellectual property remains secure and confidential when using AI coding assistants. Key questions include:

- How does V4 handle sensitive code and proprietary information?

- What data retention and privacy policies apply?

- Are there options for on-premises or private deployment?

- What security certifications or audits are available?

Regulatory Compliance

Companies in regulated industries must ensure AI tools meet specific compliance requirements:

- Data handling procedures and audit trail maintenance

- Geographic or jurisdictional restrictions

- Industry-specific regulations (HIPAA, SOC 2, GDPR)

- Export control considerations

Cross-Border Considerations

DeepSeek’s rising profile has brought increased scrutiny, particularly regarding security and privacy practices, reflecting broader tensions in the global AI landscape.

Some countries and organizations maintain policies restricting AI services based in specific jurisdictions, which could limit V4’s adoption in certain markets. Organizations must evaluate their policies regarding Chinese-origin AI tools.

Mitigation Through Local Deployment

The open-source nature and local deployment capability of V4 addresses many security concerns:

- Air-gapped operation: No data leaves the organization

- Full control: Organization maintains complete control over the model

- Audit capability: Open weights enable security auditing

- No third-party risk: Eliminates concerns about external API providers

How to Prepare for DeepSeek V4’s Launch

Organizations and developers interested in evaluating V4 should begin preparation now.

Develop an Evaluation Framework

Define Success Metrics

Establish clear, measurable criteria for evaluating coding AI performance relevant to your specific needs:

- Code generation accuracy for your programming languages and frameworks

- Debugging effectiveness on your typical error types

- Documentation quality and comprehensiveness

- Time savings on common development tasks

- Developer satisfaction and workflow integration

Create Test Scenarios

Prepare representative coding challenges that reflect your actual development work:

- Realistic refactoring tasks from your codebase

- Common debugging scenarios your team encounters

- Architecture design questions for your problem domain

- Code review cases representing your quality standards

- Documentation generation for your typical components

Establish Comparison Baseline

Document current performance with existing AI coding tools to enable meaningful comparisons with V4.

Conduct Risk Assessment

Security Review

- How does V4 handle sensitive code and proprietary information?

- What data retention and privacy policies apply?

- Are there options for on-premises or private deployment?

- What security certifications or audits are available?

Compliance Check

- Does V4 meet regulatory requirements for your industry?

- Can you maintain necessary audit trails when using the tool?

- Are there geographic or jurisdictional restrictions?

Operational Impact

- How does V4 integrate with your existing development tools?

- What training will developers need to use it effectively?

- What are the cost implications at your anticipated usage scale?

Plan a Structured Pilot

Consider implementing V4 through a structured pilot program:

1. Start Small: Select a small team or specific project for initial evaluation

2. Define Timeline: Establish a clear evaluation period (4-6 weeks)

3. Gather Feedback: Create structured mechanisms for collecting developer experiences

4. Measure Impact: Track relevant metrics throughout the pilot

5. Make Decision: Use pilot data to inform broader adoption decisions

Prepare Infrastructure

For Local Deployment:

- Verify hardware requirements match your available resources

- Plan for quantization options if memory is constrained

- Set up isolated testing environment

- Prepare deployment tooling (Ollama, vLLM, etc.)

For API Access:

- Review API documentation when available

- Plan integration with existing development workflows

- Establish usage monitoring and cost tracking

- Prepare fallback options

The Broader AI Coding Assistant Landscape

DeepSeek V4 enters a rapidly evolving market where AI coding assistants are becoming standard tools in professional software development.

Current Market Players

GitHub Copilot: Widely adopted with deep IDE integration and Microsoft backing

Amazon CodeWhisperer: Cloud-focused solution with tight AWS integration

Tabnine: Privacy-focused with on-premises deployment options

Cursor: AI-first code editor gaining significant developer traction

ChatGPT/Claude: General-purpose models frequently adapted for coding assistance

Emerging Industry Trends

Agentic AI

Moving beyond code completion to AI agents that execute complex, multi-step development tasks with minimal human intervention.

Specialized Domain Models

Development of AI models trained specifically for particular programming languages, frameworks, or application domains.

Security-First Design

Increased emphasis on AI tools that actively identify and prevent security vulnerabilities during code generation.

Collaborative AI

Tools designed to enhance team productivity through AI-facilitated code review, pair programming, and knowledge sharing.

Testing Integration

AI systems that automatically generate comprehensive test suites alongside implementation code.

Market Impact of V4

DeepSeek V4’s arrival will likely:

- Increase competitive pressure on established providers

- Accelerate price reductions across the AI coding assistant market

- Validate specialized models over general-purpose approaches

- Raise baseline expectations for context window capabilities

- Expand access to frontier AI coding assistance

What DeepSeek V4 Means for Different Stakeholders

For Individual Developers

- V4 represents a potentially powerful alternative with reported superior coding performance

- Long-context capabilities could significantly improve productivity on complex projects

- Cost-effectiveness may make advanced AI assistance more accessible

- Worth evaluating once launched, particularly if current tools feel limited

For Development Teams

- Monitor V4’s performance in independent benchmarks and real-world usage

- Prepare evaluation frameworks to assess team fit

- Consider security and compliance implications before adoption

- View as opportunity to negotiate better terms with current AI tool providers

For Startups and Small Organizations

- Cost efficiency makes frontier AI coding capabilities accessible

- Local deployment options reduce dependency on expensive cloud services

- Open-source nature enables customization for specific needs

- Levels the playing field against competitors with larger budgets

For Enterprise Organizations

- Evaluate security and compliance requirements carefully

- Consider pilot programs before broad deployment

- Assess integration with existing development infrastructure

- Weigh benefits of local deployment against API convenience

For the AI Industry

- Increased competition benefits the entire developer ecosystem

- Specialized models may outperform general-purpose alternatives for specific tasks

- Long-context processing could become an expected baseline feature

- Global AI competition is driving rapid innovation in developer tools

Frequently Asked Questions About DeepSeek V4

When is the DeepSeek V4 release date?

DeepSeek V4 is expected to launch in mid-February 2026, likely around February 17 to coincide with the Lunar New Year celebrations. The company has not officially confirmed the exact date but multiple reports point to this timeframe based on insider information.

What is the DeepSeek V4 paper about?

The DeepSeek V4 paper introduces “Engram,” a conditional memory architecture published January 13, 2026. The paper describes how Engram separates static knowledge retrieval from dynamic reasoning, enabling more efficient AI processing. A companion paper on mHC (manifold-constrained Hyperconnection) addresses training stability for large-scale models.

How does DeepSeek V4 compare to Claude and GPT-4?

According to internal testing, DeepSeek V4 outperforms Claude 3.5 Sonnet and GPT-4o specifically on coding tasks. While independent verification is pending, V4’s long-context capabilities (1M+ tokens) and specialized coding focus give it advantages for software development workflows compared to general-purpose models.

Can I run DeepSeek V4 locally?

Yes, DeepSeek V4 is designed to run on consumer-grade hardware including dual RTX 4090s or a single RTX 5090. The expected open-source release with quantization support makes local deployment accessible to developers who need air-gapped environments or prefer private deployment.

What is Engram memory in DeepSeek V4?

Engram is a conditional memory module that performs O(1) lookups for static patterns like named entities and common phrases. Instead of forcing the model to “remember” facts through expensive neural computation, Engram uses hash-based lookups that retrieve pre-stored information instantly, freeing GPU resources for complex reasoning.

How much will DeepSeek V4 cost to use?

While official pricing hasn’t been announced, DeepSeek V4 is expected to reduce costs by approximately 10x compared to frontier AI models from OpenAI and Anthropic. The combination of efficient architecture, local deployment options, and DeepSeek’s cost-focused strategy should make V4 highly economical.

What programming languages does DeepSeek V4 support?

As a general coding model, DeepSeek V4 should support all major programming languages including Python, JavaScript, TypeScript, Java, C++, Go, Rust, and others. The long-context capability is particularly valuable for polyglot codebases that mix multiple languages.

Is DeepSeek V4 open source?

DeepSeek is expected to release V4 as an open-weight model under permissive licensing, following the pattern established with V3 and R1. This allows developers to download, modify, and deploy the model locally without restrictions, though the exact license terms will be confirmed at release.

What are the hardware requirements for DeepSeek V4?

Expected requirements include dual NVIDIA RTX 4090s or single RTX 5090 for consumer deployment. With quantization (FP8 or INT4), requirements may be lower. Enterprise deployments can use standard data center GPU configurations. Exact specifications will be confirmed at launch.

How does DeepSeek V4 handle code security and privacy?

For local deployments, DeepSeek V4 operates entirely within your infrastructure with no data transmitted externally. For API usage, security and privacy policies will be detailed at launch. Organizations with strict data governance should prioritize local deployment for maximum control.

Can DeepSeek V4 understand my entire codebase at once?

Yes, with a context window exceeding 1 million tokens, DeepSeek V4 can process entire codebases in a single pass. This enables true repository-level understanding, cross-file dependency tracking, and comprehensive architectural analysis without context fragmentation.

Will DeepSeek V4 work with my IDE?

Integration details will be revealed at launch. Based on V3’s approach, expect API access that can be integrated with popular IDEs through extensions or plugins. The open-source nature should facilitate community development of IDE integrations for Visual Studio Code, JetBrains products, and others.

How does the long-context capability work technically?

DeepSeek V4’s long-context processing combines Engram’s efficient memory retrieval with optimized sparse attention mechanisms. The Engram architecture offloads static pattern recognition to O(1) lookups, preserving computational capacity for complex reasoning across extensive contexts.

What benchmarks will prove DeepSeek V4’s performance?

Key benchmarks include SWE-bench (currently led by Claude Opus 4.5 at 80.9%), HumanEval for code generation, MBPP for Python programming, CodeContests for competitive programming, and domain-specific tests for reasoning (BBH), math (MATH, GSM8K), and knowledge (MMLU).

Is DeepSeek V4 suitable for production use?

Suitability depends on your security requirements, compliance needs, and risk tolerance. Organizations should conduct thorough evaluation pilots before production deployment. The open-source nature enables security auditing and local deployment, which may satisfy security-conscious organizations.

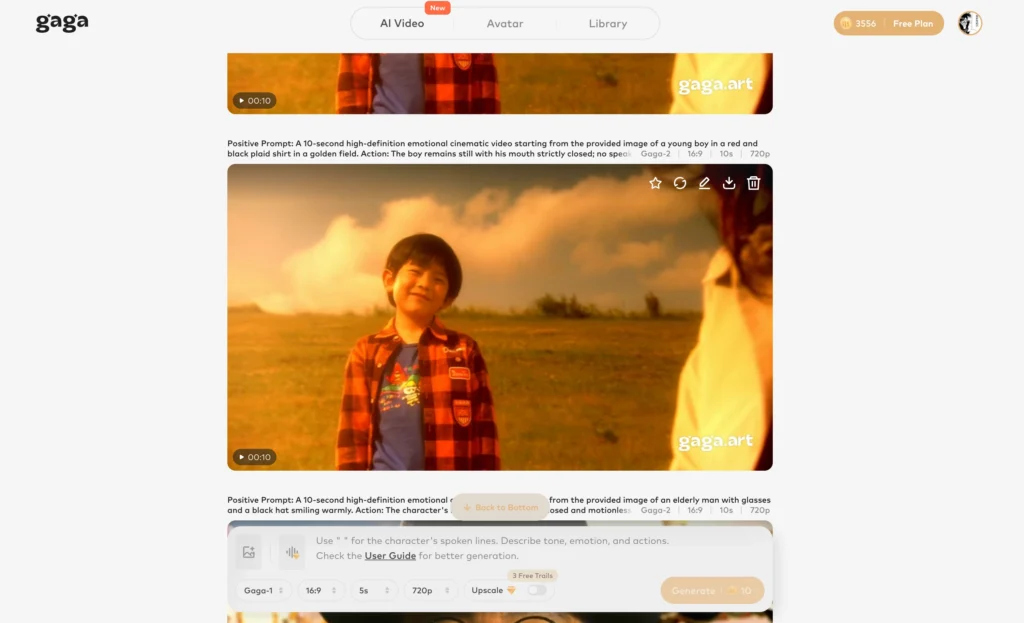

Bonus: Enhance Your AI Workflow with Gaga AI Video Generator

While DeepSeek V4 revolutionizes coding workflows, creating compelling visual content for documentation, presentations, and marketing remains essential for developers and teams. Gaga AI video generator complements your development toolkit with powerful multimedia creation capabilities.

Gaga AI Features for Developers

Image to Video AI

Transform static screenshots, diagrams, and UI mockups into engaging video demonstrations. Perfect for creating:

- Product demo videos from static designs

- Tutorial content showing step-by-step workflows

- Animated explanations of complex algorithms

- Visual documentation that’s more engaging than text

AI Avatar Generator

Create professional presenter avatars for:

- Tutorial videos and online courses

- Product announcement videos

- Team introduction videos

- Developer conference presentations

Voice Clone Technology

Generate consistent narration for:

- Technical documentation videos

- Product walkthroughs

- Tutorial series with unified voice

- Multi-language versions of training content

Text-to-Speech (TTS)

Convert written documentation into:

- Audio versions for accessibility

- Podcast-style technical content

- Voice-guided tutorials

- Hands-free learning materials

Why Developers Need Multimedia Tools

Modern software development extends beyond code. Effective teams need to:

- Document features with video demonstrations

- Create tutorials for onboarding and training

- Present at conferences with engaging visual content

- Market products with professional videos

- Communicate remotely with asynchronous video updates

Gaga AI provides these capabilities without requiring video production expertise, allowing developers to focus on code while still creating professional multimedia content.

Integration with Your Development Workflow

Use Gaga AI to:

1. Document releases: Turn changelogs into video release notes

2. Explain architecture: Animate system diagrams for stakeholder presentations

3. Create demos: Transform Figma designs into working product demonstrations

4. Build courses: Develop training materials for new team members

5. Share updates: Create async video updates for distributed teams

By combining DeepSeek V4’s coding excellence with Gaga AI’s multimedia capabilities, development teams can optimize both their technical implementation and their communication workflows.

Conclusion: The February 2026 AI Coding Milestone

DeepSeek V4’s mid-February 2026 launch represents a significant milestone in AI-assisted software engineering. With its revolutionary Engram memory architecture, 1M+ token context windows, and cost-efficient operation, V4 has the potential to reshape expectations for AI coding assistants.

Key Implications

For Technology: The Engram architecture demonstrates that algorithmic innovation can match or exceed brute-force scaling, potentially establishing a new paradigm for AI model design.

For Developers: V4 offers a powerful alternative to existing tools with specialized coding capabilities, long-context processing, and cost-effective pricing that makes frontier AI accessible to broader audiences.

For Industry: Increased competition from DeepSeek accelerates innovation across the entire AI coding assistant ecosystem, benefiting all developers through lower costs, better features, and more options.

Looking Ahead

V4’s success will depend on multiple factors beyond pure technical performance. Security, privacy, compliance considerations, and real-world usability will determine adoption. Independent benchmarks and community feedback will validate or challenge DeepSeek’s internal performance claims.

Regardless of whether V4 becomes the dominant coding AI, its arrival is welcome news for the developer community. More competition drives innovation, reduces costs, and expands options—ultimately benefiting everyone who relies on AI assistance for software development.

As the February launch approaches, developers and organizations should prepare evaluation frameworks, consider pilot programs, and stay informed about V4’s capabilities and limitations. The AI coding landscape is evolving rapidly, and DeepSeek V4 promises to be a transformative force in that evolution.