Key Takeaways

- LongCat video AI generates videos up to 4 minutes using a 13.6B parameter model released under MIT license

- Supports three modes: text-to-video, image-to-video, and video continuation in one unified architecture

- Requires GPU infrastructure (CUDA-compatible) and Python 3.10+ for local deployment

- Scores 3.38/5 in quality benchmarks, comparable to commercial solutions like PixVerse-V5

- Best for developers and researchers who need customization; non-technical users should consider cloud alternatives like Gaga AI

Table of Contents

What Is LongCat AI Video Generator?

Direct Answer: LongCat AI video generator is an open-source, 13.6-billion-parameter deep learning model developed by Meituan that converts text prompts and static images into video sequences up to 4 minutes long.

Released in late 2024, the longcat video AI system addresses a specific technical challenge in AI video generation: maintaining visual consistency across extended durations. Most AI video models degrade in quality after 30-60 seconds due to temporal drift and color inconsistency. The long cat video AI model solves this through native pretraining on Video-Continuation tasks.

Core Technical Specifications

The longcat AI video generator operates with these parameters:

- Model size: 13.6B parameters (dense architecture, all activated)

- Output resolution: 720p at 30 frames per second

- Maximum length: 240 seconds (4 minutes)

- Inference strategy: Coarse-to-fine generation along temporal and spatial axes

- Attention mechanism: Block Sparse Attention for computational efficiency

- License: MIT (permits commercial use without restrictions)

How Does LongCat Video AI Work?

Direct Answer: The longcat video AI model uses a three-stage process:

(1) coarse spatial layout generation,

(2) temporal coherence modeling, and

(3) fine detail refinement, all within a unified transformer architecture.

The Technical Architecture

Unlike mixture-of-experts (MoE) models that route inputs to specialized sub-networks, the longcat ai video generator uses a dense architecture. This design choice results in:

- Simpler deployment (no routing overhead)

- More predictable memory usage

- Competitive performance compared to the 28B parameter MoE alternatives

The model employs Group Relative Policy Optimization (GRPO) with multi-reward signals during training. This approach balances:

1. Text-prompt alignment (semantic accuracy)

2. Motion quality (physical plausibility)

3. Visual fidelity (aesthetic coherence)

Why It Handles Long Videos Better

Traditional video diffusion models generate frames sequentially, accumulating error over time. The longcat video AI system instead:

- Pretrains on continuation tasks (learning to extend existing video segments)

- Uses block sparse attention to maintain long-range temporal dependencies

- Applies temporal smoothing across generated segments

This architecture enables users to generate a base clip, then iteratively extend it with new prompts—similar to writing chapters in a story rather than generating an entire narrative at once.

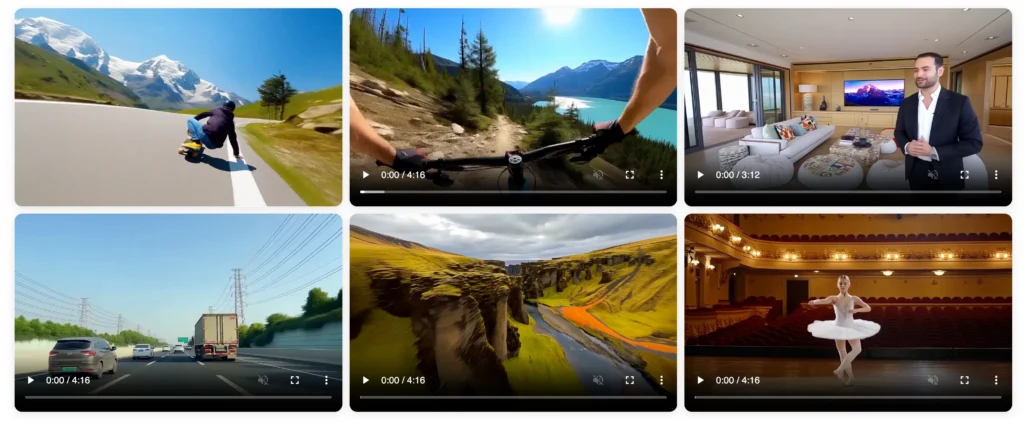

What Are the Three Modes of LongCat AI Video Generator?

Direct Answer: The longcat ai video generator operates in three distinct modes within one model: text-to-video (T2V), image-to-video (I2V), and video continuation.

Mode 1: Text-to-Video (T2V)

Input a natural language prompt, and the longcat video ai generates a complete video sequence.

Example prompt: “A woman in a white dress performs ballet on a frozen lake surface, her reflection visible in the ice, golden hour lighting”

Use cases:

- Concept visualization for storyboards

- Social media content creation

Mode 2: Image-to-Video (I2V)

With the image to video AI feature, upload a static image, and the long cat video ai animates it with realistic motion.

Technical note: The model infers motion patterns from the image composition (e.g., a person mid-jump suggests continuation of that motion arc).

Use cases:

- Product demonstrations (animating product photos)

- E-commerce listings (showing 360° views from a single image)

- Photo enhancement (bringing still images to life)

You may like: The Ultimate Guide to the Best Image to Video AI Generators in 2025: Free Tools & Pro Tips

Mode 3: Video Continuation

Provide an existing video clip, and the longcat video ai extends it with coherent new frames.

Critical advantage: This mode enables narrative storytelling by chaining multiple prompts. Generate a 30-second base clip, then extend it three times to reach 2 minutes of cohesive content.

Use cases:

- Extending stock footage

- Creating serialized content

- Building longer narratives from shorter clips

How Do You Set Up LongCat AI Video Generator?

Direct Answer: Setting up the longcat ai video generator requires a CUDA-compatible GPU, Python 3.10+, PyTorch 2.6.0+, and FlashAttention-2, followed by cloning the GitHub repository and downloading model weights from Hugging Face.

Prerequisites Checklist

Before installing longcat video ai, verify your system meets these requirements:

Hardware:

- NVIDIA GPU with CUDA support (minimum 16GB VRAM recommended)

- For multi-GPU setups: 2-4 GPUs for parallel inference

- 64GB+ system RAM for longer video generation

Software:

- Python 3.10 or later

- PyTorch 2.6.0 or later with CUDA support

- FlashAttention-2 (or FlashAttention-3/xformers as alternatives)

- Git for repository cloning

Step-by-Step Installation

Step 1: Clone the Repository

git clone https://github.com/meituan/LongCat-Video.git

cd LongCat-Video

Step 2: Create Virtual Environment

python3.10 -m venv longcat_env

source longcat_env/bin/activate # On Windows: longcat_env\Scripts\activate

Step 3: Install Dependencies

pip install torch==2.6.0 torchvision torchaudio –index-url https://download.pytorch.org/whl/cu118

pip install -r requirements.txt

pip install flash-attn –no-build-isolation

Step 4: Download Model Weights

The longcat ai video generator weights are hosted on Hugging Face:

# Using huggingface-cli (recommended)

huggingface-cli download meituan/LongCat-Video –local-dir ./models/

# Or using git-lfs

git lfs install

git clone https://huggingface.co/meituan/LongCat-Video ./models/

Step 5: Verify Installation

python -c “import torch; print(torch.cuda.is_available())” # Should return True

python verify_setup.py # Included in the repository

Common Installation Issues

Problem: CUDA out of memory during inference

Solution: Reduce batch size in config.yaml or use gradient checkpointing

Problem: FlashAttention compilation fails

Solution: Use xformers as alternative: pip install xformers

Problem: Model download interrupted

Solution: Resume with huggingface-cli download –resume-download meituan/LongCat-Video

How Do You Generate Videos with LongCat Video AI?

Direct Answer: Run inference scripts from the command line with either text prompts (inference_t2v.py), image files (inference_i2v.py), or existing videos (inference_continuation.py), specifying generation parameters in the command arguments.

Basic Text-to-Video Generation

python inference_t2v.py \

–prompt “A skateboarder performs a kickflip in slow motion, urban skatepark setting” \

–duration 30 \

–resolution 720p \

–fps 30 \

–output ./outputs/skateboard_kickflip.mp4

Key parameters:

- –prompt: Natural language description (be specific about motion, lighting, camera angle)

- –duration: Video length in seconds (maximum 240)

- –seed: Integer for reproducible results

- –guidance_scale: Controls prompt adherence (default 7.5)

Image-to-Video Generation

python inference_i2v.py \

–image_path ./inputs/product_photo.jpg \

–motion_prompt “Rotate 360 degrees, smooth camera movement” \

–duration 15 \

–output ./outputs/product_demo.mp4

Best practices:

- Use high-resolution input images (1024×1024 or larger)

- Specify motion explicitly in the motion_prompt parameter

- Shorter durations (10-20 seconds) yield more stable results for I2V

Creating Long-Form Videos with Continuation

For videos beyond 60 seconds, use the sequential generation approach:

python inference_long_video.py \

–prompts “A woman enters a modern bathroom” \

“She approaches the mirror and adjusts her hair” \

“She washes her hands at the sink” \

“She dries her hands with a towel” \

–segment_duration 15 \

–output ./outputs/bathroom_sequence.mp4

Each prompt generates a segment that connects seamlessly to the previous one.

Using the Web Interface

For users who prefer a graphical interface, the longcat ai video generator includes a Streamlit app:

streamlit run app.py

This launches a local web server (typically at http://localhost:8501) with form fields for prompts, file uploads, and parameter adjustments.

How Does LongCat AI Video Generator Perform Compared to Alternatives?

Direct Answer: LongCat video ai scores 3.38/5 in overall quality evaluations, performing comparably to commercial solutions like PixVerse-V5 and Google’s Veo3 on specific metrics, while outperforming most open-source alternatives in temporal consistency.

Quantitative Benchmark Results

Meituan’s published evaluations tested the longcat ai video generator against both open-source and commercial models:

| Model | Overall Quality | Visual Quality | Motion Quality | Text Alignment |

| LongCat Video AI | 3.38/5 | 3.25/5 | 3.41/5 | 3.48/5 |

| Wan 2.2-T2V-A14B | 3.35/5 | 3.22/5 | 3.39/5 | 3.44/5 |

| PixVerse-V5 | 3.42/5 | 3.28/5 | 3.45/5 | 3.51/5 |

| Google Veo3 | 3.55/5 | 3.40/5 | 3.58/5 | 3.67/5 |

Key findings:

- The longcat video ai excels in text alignment (3.48/5), meaning it follows prompts accurately

- Visual quality (3.25/5) lags slightly behind top commercial models

- Motion quality (3.41/5) is competitive, particularly for extended durations

Qualitative Strengths

Where longcat ai video generator outperforms alternatives:

1. Temporal consistency beyond 60 seconds – Commercial models often show quality degradation after 1 minute; long cat video ai maintains coherence up to 4 minutes

2. Narrative continuity – The continuation mode produces more coherent multi-segment videos than chaining outputs from other models

3. Deployment flexibility – As open-source software, it can be fine-tuned, modified, and integrated into custom pipelines

Where it falls short:

1. Inference speed – Slower than optimized commercial APIs (minutes vs. seconds)

2. Aesthetic refinement – Generated videos sometimes lack the polished look of proprietary models

3. Prompt sensitivity – Requires more specific, detailed prompts than some user-friendly alternatives

What Are the Licensing Terms for LongCat Video AI?

Direct Answer: LongCat ai video generator is released under the MIT License, which permits unlimited commercial use, modification, and distribution without royalties or usage restrictions.

What the MIT License Means in Practice

You can:

- Use the longcat video ai in commercial products and services

- Modify the source code for proprietary applications

- Distribute your modifications under any license

- Integrate it into SaaS platforms without disclosure requirements

You must:

- Include the original MIT license text in distributions

- Attribute the original work to Meituan

You cannot:

- Hold Meituan liable for damages from model outputs

- Use Meituan’s trademarks without permission

Comparison to Other Model Licenses

Many AI models use restrictive licenses:

- Stable Video Diffusion: Requires attribution and non-compete clauses for commercial use

- Runway Gen-2: Proprietary API with usage-based pricing

- Pika Labs: Closed-source with limited commercial licensing

The longcat video ai’s MIT license is unusually permissive for a model of this capability level.

When Should You Use LongCat AI Video Generator vs. Cloud Alternatives?

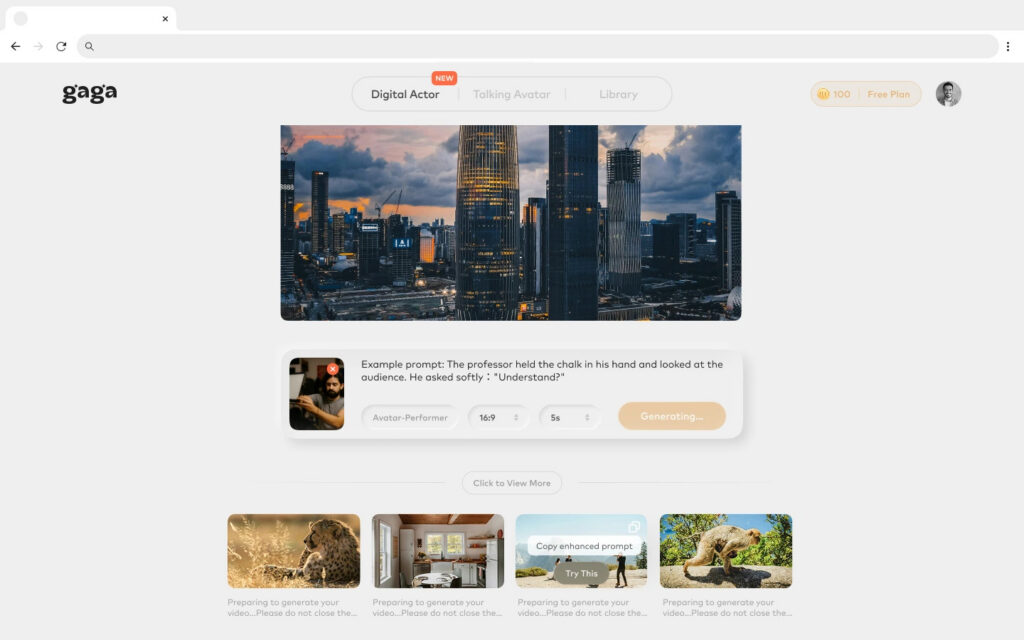

Direct Answer: Use longcat AI video generator when you need maximum customization, data privacy, or batch processing at scale; use cloud services like Gaga AI when you prioritize ease of use, minimal setup, or inconsistent hardware access.

Decision Framework

Choose the longcat AI video generator if:

1. You have GPU infrastructure – Already own CUDA-compatible GPUs or can provision them affordably

2. Data privacy is critical – Need to keep prompts and outputs on-premises (e.g., confidential product demos)

3. Customization required – Plan to fine-tune the model on proprietary data or modify the architecture

4. High-volume production – Generating hundreds of videos monthly (cloud APIs charge per generation)

5. Research or development – Studying video generation techniques or building on top of the model

Choose cloud services (like Gaga AI) if:

1. No GPU access – Don’t own or want to manage GPU infrastructure

2. Occasional use – Generate a few videos weekly rather than daily batches

3. Non-technical team – Team lacks ML engineering expertise for model deployment

4. Instant setup needed – Start generating videos within minutes rather than hours of setup

5. Budget flexibility – Prefer pay-per-use pricing over upfront hardware investment

Cost Analysis Example

Scenario: Generate 100 videos per month (30 seconds each)

Self-hosted longcat video AI:

- GPU rental: $300-500/month (e.g., 1x A100 on cloud providers)

- Setup time: 4-8 hours (one-time)

- Maintenance: 2-3 hours/month

- Total first month: ~$500 + 12 hours labor

- Total subsequent months: ~$400 + 3 hours labor

Cloud API (Gaga AI or similar):

- Per-video cost: ~$0.50-2.00

- Setup time: 5 minutes

- Maintenance: None

- Total per month: ~$50-200

- Total time: <1 hour

Break-even point: Self-hosting becomes cost-effective around 250-500 videos/month, assuming you already have the technical expertise.

What Are the Current Limitations of LongCat Video AI?

Direct Answer: The longcat AI video generator’s primary limitations include high computational requirements (16GB+ VRAM), occasional prompt misinterpretation with complex scenes, and a lack of official support for real-time generation.

Technical Limitations

1. Hardware Barriers

The longcat video AI requires substantial resources:

- Minimum 16GB VRAM for basic generation

- 24GB+ VRAM recommended for 4-minute videos

- Multi-GPU setup is needed for batch processing

Impact: Excludes users with consumer-grade GPUs (e.g., RTX 3060 with 12GB VRAM struggles with longer videos)

2. Inference Speed

Generation times on single GPU:

- 30-second video: 3-5 minutes

- 2-minute video: 12-18 minutes

- 4-minute video: 25-35 minutes

Impact: Not suitable for interactive applications requiring near-instant results

3. Prompt Complexity Ceiling

The longcat AI video generator struggles with:

- Scenes involving 4+ distinct subjects

- Complex physical interactions (e.g., “two dancers lift a third person while spinning”)

- Precise spatial relationships (“place the red cube exactly 2 feet left of the blue sphere”)

Impact: Requires prompt engineering skills and iteration for complex compositions

Content Quality Limitations

1. Photorealism Gaps

While generally coherent, the long cat video ai occasionally produces:

- Unnatural facial expressions in close-ups

- Blurry textures in background elements

- Inconsistent lighting between frames

Severity: Noticeable in professional contexts but acceptable for drafts, storyboards, or social media

2. Motion Artifacts

In fast-motion scenes:

- Object boundaries may blur excessively

- Sudden camera movements cause temporal jitter

- Fine details (hair, fabric texture) can lose definition

Mitigation: Use shorter segment durations (15-30 seconds) and stitch them together

3. Limited Style Control

Unlike models trained on specific artistic styles:

- No built-in anime/cartoon modes

- Difficult to achieve specific cinematographic looks (e.g., “film noir lighting”)

- Style transfer requires fine-tuning the base model

Operational Limitations

1. No Official Web Demo

Users must self-deploy, which creates friction for:

- Content creators evaluating the model

- Stakeholders requiring proof-of-concept demonstrations

- Workshops or educational settings

2. Community Support Gaps

As a recent release:

- Limited third-party tutorials and documentation

- Smaller user community than established models (Stable Diffusion, etc.)

- Fewer pre-built integrations with creative tools (Adobe, DaVinci Resolve)

3. Evaluation Scope

The longcat ai video generator hasn’t been:

- Extensively tested for all content categories (medical visualization, architectural walkthroughs)

- Audited for bias in demographic representation

- Validated for accessibility features (e.g., generating videos with optimized closed captions)

Recommendation: Conduct internal testing for your specific use case before production deployment

How Is the AI Community Extending LongCat Video AI?

Direct Answer: The community is developing acceleration plugins (CacheDiT with 1.7x speedup), fine-tuning datasets for niche domains, and integrating the longcat AI video generator into creative tools like Blender and ComfyUI.

Notable Community Projects

1. CacheDiT Acceleration

Developed by an independent research group, this plugin:

- Implements DBCache and TaylorSeer optimizations

- Achieves 1.7x inference speedup without quality loss

- Reduces VRAM requirements by 15-20%

Installation: Available on GitHub as a drop-in replacement for default attention layers

2. Fine-Tuned Variants

Community members are training domain-specific versions:

- LongCat-Product: Optimized for e-commerce product demos (jewelry, apparel)

- LongCat-Anime: Fine-tuned on anime datasets for stylized content

- LongCat-Architecture: Specialized in architectural visualizations and walkthroughs

Access: Shared on Hugging Face as separate model repositories

3. Creative Tool Integrations

Early adopters are building:

- Blender plugin: Generate video textures and backgrounds directly in 3D scenes

- ComfyUI nodes: Drag-and-drop interface for the long cat video ai in visual workflows

- Automatic1111 extension: Integrate with existing Stable Diffusion pipelines

Contributing to Development

The longcat ai video generator’s MIT license encourages contributions:

Where to contribute:

- Report bugs and request features on GitHub Issues

- Submit pull requests for bug fixes or optimizations

- Share fine-tuned models on Hugging Face (attribute original model)

- Create tutorials, documentation, or video guides

Active areas needing contribution:

- Docker containerization for easier deployment

- Benchmark comparisons with newly released models

- Prompt engineering guides for specific industries

- Fine-tuning scripts for custom datasets

Frequently Asked Questions About LongCat AI Video Generator

Can LongCat AI video generator run on Mac M1/M2/M3 chips?

No, the longcat video AI currently requires NVIDIA GPUs with CUDA support. Apple Silicon (M1/M2/M3) uses Metal for GPU acceleration, which is incompatible with the CUDA-dependent libraries (FlashAttention, PyTorch CUDA extensions) that the long cat video AI requires.

Workaround: Use cloud GPU services (RunPod, Vast.ai) or wait for community ports to Apple Silicon (none confirmed as of December 2025).

How long does it take to generate a 4-minute video with LongCat video AI?

On a single NVIDIA A100 GPU, a 4-minute video takes approximately 25-35 minutes. Generation time scales roughly linearly with duration:

- 30 seconds: 3-5 minutes

- 1 minute: 6-10 minutes

- 2 minutes: 12-18 minutes

- 4 minutes: 25-35 minutes

Optimization tip: Use multi-GPU inference with context parallelization to reduce time by 40-60%.

Is LongCat AI video generator suitable for commercial production?

Yes, with caveats. The MIT license permits commercial use without restrictions. However, assess these factors:

1. Quality consistency: Review all outputs before publication (occasional artifacts may require regeneration)

2. Brand safety: The model hasn’t been specifically fine-tuned to avoid brand-unsafe content

3. Legal compliance: Ensure your prompts don’t infringe on third-party copyrights or trademarks

Recommendation: Use the longcat AI video generator for drafts, previews, and internal content; consider manual review or post-processing for client-facing deliverables.

Can you fine-tune LongCat video AI on custom datasets?

Yes, the model architecture supports fine-tuning, though Meituan hasn’t released official fine-tuning scripts. Community members report success with:

Approach 1: Low-Rank Adaptation (LoRA)

- Train lightweight adapter layers on custom data

- Requires 500-2000 video clips for reasonable results

- VRAM needs: 40GB+ (use gradient checkpointing to reduce)

Approach 2: Full fine-tuning

- Update all 13.6B parameters (requires multi-GPU cluster)

- Achieves better domain specialization, but computationally expensive

Resources: Check the GitHub Discussions tab for community fine-tuning guides and shared configurations.

What video formats does LongCat AI video generator output?

The longcat video AI generates MP4 files by default (H.264 codec, AAC audio track for silence). You can modify the output format in the config:

# In config.yaml

output:

format: ‘mp4’ # Options: mp4, webm, avi

codec: ‘h264’ # Options: h264, h265, vp9

bitrate: ‘5M’ # Higher = better quality, larger file

Note: The model generates a silent video; you must add audio separately in post-production.

Does LongCat video AI support upscaling to 4K resolution?

No, the longcat AI video generator’s maximum native resolution is 720p (1280×720 pixels). Upscaling to 4K would require:

Option 1: Use external video upscaling AI (Topaz Video AI, ESRGAN)

- Generates 4K from 720p but may introduce softness or artifacts

Option 2: Fine-tune the model for higher resolution

- Requires retraining on 4K datasets (computationally prohibitive for most users)

Current limitation: Higher resolutions drastically increase VRAM requirements (4K generation would need 80GB+ VRAM).

Can LongCat AI video generator create videos with audio?

No, the long cat video AI is a visual-only model. It generates video frames without accompanying audio. For audio:

Add audio in post-production:

1. Generate video with the longcat AI video generator

2. Use audio generation AI (MusicGen, AudioCraft) for music/SFX

3. Sync in video editing software (DaVinci Resolve, Adobe Premiere)

Future possibility: Community developers are discussing audio-conditioned variants that sync audio to video, but none have been released as of December 2025.

How does LongCat AI video generator handle copyrighted content in prompts?

The model will attempt to generate content matching any prompt, including those referencing copyrighted characters, brands, or works. You are legally responsible for ensuring your prompts and outputs don’t infringe copyrights.

Meituan’s guidance:

- Do not use the longcat video AI to recreate copyrighted characters or scenes

- Review outputs for unintended trademark appearances

- Follow fair use principles if creating transformative or educational content

Content policy: The model hasn’t been trained with content filters, so users must self-regulate.

What is the difference between LongCat video AI and Stable Video Diffusion?

| Feature | LongCat Video AI | Stable Video Diffusion |

| Max duration | 4 minutes | 4-6 seconds (base); up to 25 seconds (extended) |

| Model size | 13.6B parameters | 1.5B parameters |

| License | MIT (fully open) | CreativeML (restrictions on commercial use) |

| Primary strength | Long-form, narrative videos | Short clips with high visual quality |

| Hardware needs | 16GB+ VRAM | 12GB+ VRAM |

Choose longcat ai video generator for: Multi-minute storytelling, video continuation, commercial projects requiring full rights.

Choose Stable Video Diffusion for: Short social media clips, quick prototypes, lower hardware requirements

Can LongCat AI video generator create videos from voice prompts?

Not natively. The longcat video AI accepts text prompts only. For voice-to-video workflow:

Step 1: Use speech-to-text (Whisper, Google Speech API) to transcribe voice

Step 2: Pass the transcription to the long cat video AI as a text prompt.

Step 3: Generate a video from the text

Alternative: The community is exploring multimodal wrappers that accept audio input, but these add latency and complexity.

How does LongCat video AI ensure temporal consistency across long videos?

The longcat ai video generator uses three mechanisms:

1. Continuation pretraining: Model learns to extend existing video coherently (trained on segmented videos)

2. Block sparse attention: Maintains long-range dependencies between frames hundreds of timesteps apart

3. Temporal smoothing layers: Penalize frame-to-frame variations during generation

Result: Color palettes, lighting conditions, and subject appearance remain consistent even across 200+ second durations—a challenge for models that generate frames independently.

What are the ethical considerations when using LongCat AI video generator?

Key concerns:

1. Deepfakes and misinformation: The longcat video AI can generate realistic scenes that never occurred

- Mitigation: Watermark-generated content, disclose AI origin

2. Bias in outputs: A Model trained on internet data may reflect demographic biases

- Mitigation: Review outputs for stereotyping, diversify prompts

3. Consent and likeness: Generated people resemble real individuals

- Mitigation: Don’t use the long cat video AI to impersonate identifiable people without consent

Meituan’s recommendation: Conduct an internal ethics review before deploying in sensitive domains (journalism, education, legal contexts).

Where can I find example prompts for the LongCat AI video generator?

Official resources:

- GitHub repository includes 50+ example prompts in /examples/prompts.txt

- Meituan’s blog post showcases 10 detailed prompt templates

Community resources:

- Reddit r/AIVideoGeneration: Weekly prompt-sharing thread

- Discord server: #longcat-prompts channel (link in GitHub README)

- Hugging Face model page: Comments section has user-submitted prompts with outputs

Prompt engineering tip: Be specific about:

- Subject (who/what)

- Action (doing what)

- Setting (where)

- Lighting/mood (atmosphere)

- Camera movement (static, pan, zoom)

Example: “A chef flips a pancake in a rustic kitchen, morning sunlight through window, slow-motion, eye-level camera angle”

Resources and Next Steps

Official LongCat AI Video Generator Links:

- Project homepage: https://longcat-video.github.io/

- Model download: https://huggingface.co/meituan/LongCat-Video

- GitHub repository: https://github.com/meituan/LongCat-Video

- Technical paper: arXiv (search “LongCat-Video Meituan”)

Cloud Alternative for Non-Technical Users:

- Gaga AI Video Generator: https://gaga.art (browser-based, no setup required)

Community Support:

- GitHub Issues (bug reports, feature requests)

- Hugging Face Discussions (usage questions, showcase)

- Discord server (real-time help, link in GitHub README)

Next steps:

1. If you have GPU access: Clone the repository and follow the setup guide

2. If testing feasibility: Try cloud GPU rentals (RunPod, Vast.ai) for $0.50-1/hour

3. If non-technical: Evaluate cloud services like Gaga AI before committing to self-hosting