Key Takeaways

- InteractAvatar is an AI avatar generator that creates realistic talking avatars with controllable human-object interactions from text commands

- Uses dual-stream DiT technology to generate videos where avatars interact naturally with objects based on text, audio, or motion inputs

- Supports multi-step actions, long-form video generation (up to 29 seconds), and maintains audio-visual synchronization

- Outperforms current text-to-video AI models in generating complex interaction sequences while preserving natural lip-sync and gestures

- Enables creators to produce professional AI video content without motion capture equipment or complex 3D modeling

Table of Contents

What Is InteractAvatar?

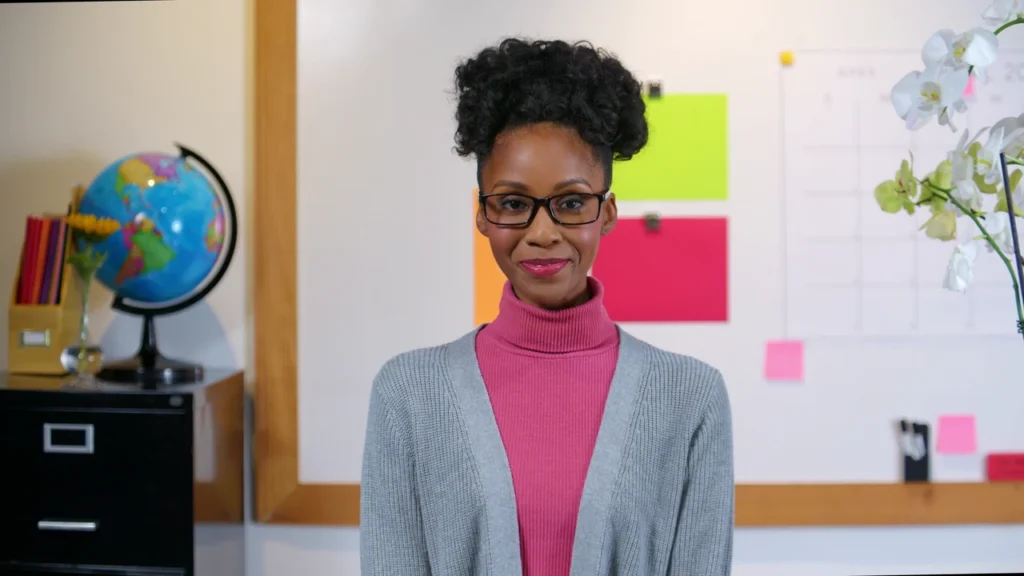

InteractAvatar is a text-driven AI avatar generator developed by Tencent that creates realistic talking avatars capable of interacting with objects in video format. Unlike traditional AI video generators that only animate faces or produce static avatars, InteractAvatar understands and executes complex commands involving physical interactions, multi-step sequences, and coordinated movements.

The technology represents a breakthrough in controllable video generation. Users input text descriptions of desired actions—such as “pick up the coffee cup with one hand, then take a sip”—and the AI generates a video where a digital avatar performs exactly those actions with realistic motion, proper object handling, and synchronized audio.

How InteractAvatar Works

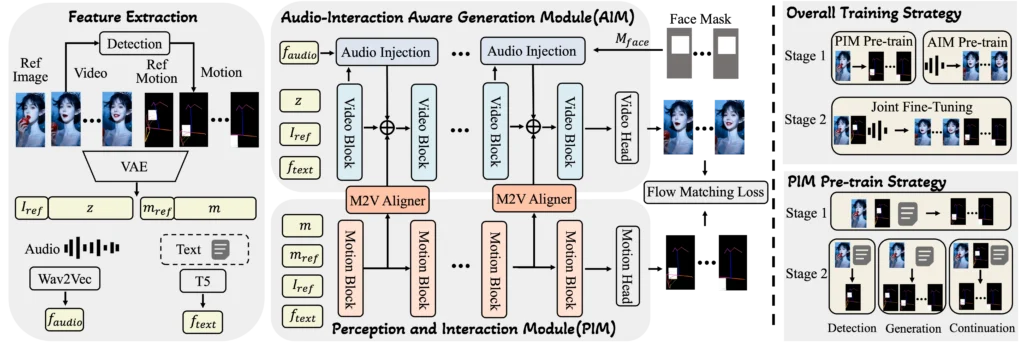

InteractAvatar employs a dual-stream Diffusion Transformer (DiT) architecture that separates perception planning from video synthesis. This approach allows the model to:

- Analyze the scene: Identify objects, their positions, and physical properties from a reference image

- Plan interactions: Determine how the avatar should move to accomplish the requested actions

- Generate motion: Create realistic human movements that respect physics and object constraints

- Synchronize audio: Match lip movements and co-speech gestures to spoken or sung content

The system accepts multimodal inputs—any combination of text instructions, audio files, or motion capture data—making it a unified framework for digital human generation.

Core Capabilities: What Makes InteractAvatar Different

Multi-Object Scene Understanding

InteractAvatar can identify and interact with multiple objects in a single frame based solely on text commands. When presented with a reference image containing several items (a phone, headphones, and a coffee cup, for example), the model correctly interprets commands like “pick up the headphones and put them on your ears” without requiring object labels or bounding boxes.

This capability stems from the model’s Grounded Human-Object Interaction (GHOI) system, which infers:

- Object locations and dimensions from visual input

- Appropriate grip patterns for different object types

- Physical constraints (weight, fragility, size)

- Natural movement trajectories for pick-up and placement actions

Practical application: Content creators can set up a single scene with props and generate multiple interaction variations without reshooting or manual animation.

Fine-Grained Multi-Step Control

The system excels at sequential action execution. A single text prompt can contain multiple instructions that the avatar performs in correct temporal order:

- “First, gently touch the flower in front of you. Then, extend one hand to pick up the hat on the table.”

- “First, extend both hands and pick up the bag on the table. Then, stand up and hold the bag up in front of your chest.”

Each action completes fully before the next begins, demonstrating temporal reasoning that most image-to-video AI tools lack. The model maintains context across steps, ensuring smooth transitions and logical progression.

Long-Form Video Generation

InteractAvatar generates coherent videos up to 29 seconds in length by processing segmented action descriptions with timestamps:

0-4s: Touch the apple with your hand

12-16s: Move the apple on the table forward

16-20s: Pick up the apple with one hand

The system maintains:

- Temporal coherence: Actions flow naturally across segments

- ID consistency: The avatar’s appearance remains stable throughout

- Spatial continuity: Object positions track correctly across time

This addresses a major limitation in current text-to-video AI generators, which typically cap at 4-6 second clips with inconsistent characters.

Audio-Driven Performance

Beyond interaction control, InteractAvatar functions as a high-quality talking avatar generator. The audio-driven mode produces:

- Realistic lip-sync: Mouth movements precisely match spoken phonemes

- Co-speech gestures: Natural hand movements and head nods that accompany speech

- Emotional expression: Facial micro-expressions that align with vocal tone

The model handles challenging scenarios like singing, where rapid syllable changes and sustained notes require precise audio-visual alignment.

InteractAvatar vs. Traditional AI Video Generators

Comparison with Current SOTA Models

When tested against leading AI avatar and text-to-video models (HY-Avatar, HuMo, Fantasy, Wan-S2V, OminiAvatar), InteractAvatar demonstrates:

| Capability | InteractAvatar | Traditional Models |

| Object interaction accuracy | Correctly executes “pick up vase with both hands and move forward” | Generate lip-sync but ignore interaction instructions |

| Multi-step adherence | Follows sequential commands in order | Often blend actions or skip steps |

| Audio preservation | Maintains high-quality lip-sync during interactions | Interaction attempts degrade audio synchronization |

| Object generalization | Works with 32+ object categories from training | Limited to specific object types or requires fine-tuning |

Key differentiator: Most audio-driven talking avatar tools excel at facial animation but treat the body as static. Conversely, text-to-video AI tools generate motion but struggle with precise object manipulation. InteractAvatar bridges this gap.

Advanced Features and Use Cases

Song-Driven Generation with Choreography

InteractAvatar handles synchronized singing and gesture performance:

- Input: Music audio file + segmented gesture commands (“0-4s: Make a heart shape. 12-16s: Clap hands”)

- Output: Avatar singing with accurate lip-sync plus choreographed movements timed to music beats

This enables creation of AI-generated music videos, virtual idol performances, or educational songs with demonstrative gestures.

Multilingual Support

The model processes commands in English and Chinese, making it accessible for:

- International content teams

- Localized video production

- Cross-cultural training materials

Motion-Driven Control

For users with motion capture data, InteractAvatar accepts:

- Skeletal pose sequences

- Keyframe animations

- Blended motion-text inputs

This allows professional animators to combine hand-crafted motion with AI-generated refinement and facial animation.

Technical Implementation: Under the Hood

Grounded Human-Object Interaction (GHOI)

The GHOI system is InteractAvatar’s core innovation. It:

- Grounds objects spatially: Maps text references (“the camera on the table”) to pixel regions in the reference image

- Plans grasp trajectories: Calculates hand paths that avoid collisions and achieve stable grips

- Models physics constraints: Ensures movements respect object weight, size, and stability

- Maintains temporal consistency: Tracks object states across frames (is it being held? placed? moved?)

This explicit decoupling of perception from generation allows the model to handle novel objects and scenarios not seen during training.

Dual-Stream Diffusion Transformer Architecture

The technical architecture comprises:

- Perception stream: Processes reference images and text to create action plans

- Synthesis stream: Generates video frames conditioned on action plans and audio

- Cross-attention mechanisms: Link object positions, text semantics, and motion trajectories

This design enables flexible multimodal control while maintaining high output quality.

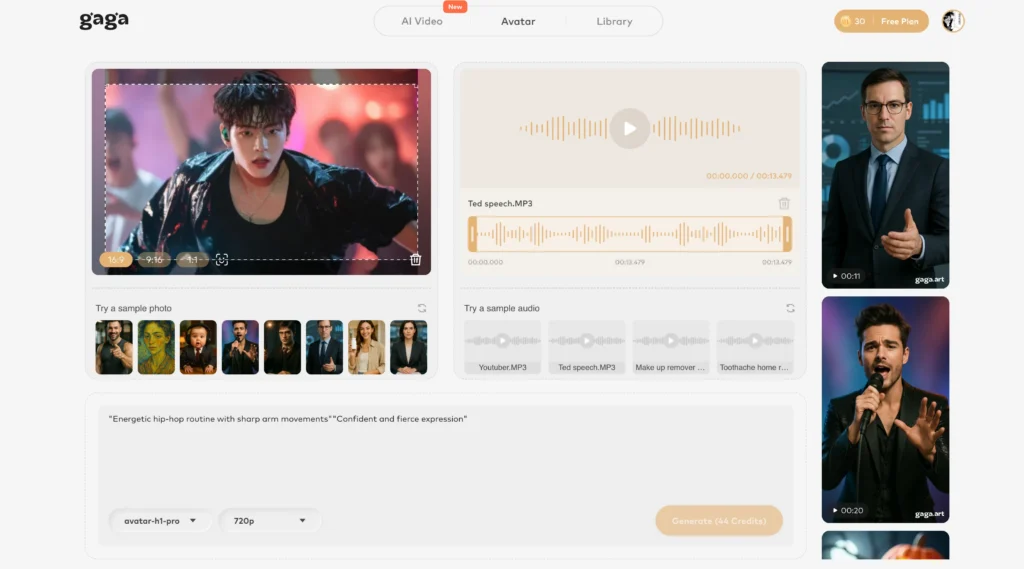

Bonus: Gaga AI Video Generator Features

For users seeking additional AI video creation tools, the Gaga AI video generator offers complementary capabilities:

Image to Video AI

Transform static images into dynamic video content with camera movements, subtle animations, and scene transitions. Ideal for bringing photos to life or creating establishing shots for video projects.

Video and Audio Infusion

Combine multiple video sources with custom audio tracks, enabling:

- Background music synchronization

- Voiceover integration

- Sound effect layering

AI Avatar Creation

Generate custom digital avatars from photos with style transfer, age progression, and appearance customization options.

AI Voice Cloning

Create synthetic voices that match specific speakers for:

- Consistent narration across video series

- Multilingual dubbing with voice preservation

- Text-to-speech with personality

Advanced TTS (Text-to-Speech)

Convert written scripts to natural-sounding speech with:

- Emotion control (happy, serious, empathetic)

- Pacing adjustments

- Multiple voice profiles

Integration workflow: Use Gaga AI for initial avatar design and voice creation, then import into InteractAvatar for advanced interaction and gesture control.

Frequently Asked Questions

What is the difference between InteractAvatar and other AI avatar generators?

InteractAvatar uniquely combines talking avatar generation with controllable object interaction. While tools like D-ID or Synthesia excel at creating speaking head videos, and Runway or Pika focus on general text-to-video generation, InteractAvatar specializes in avatars that physically interact with their environment based on text commands. It’s the only model that can generate “pick up the coffee cup and take a sip” with realistic hand-object physics and maintained lip-sync.

Can InteractAvatar create videos from just text without a reference image?

No. InteractAvatar requires a reference image showing the avatar and any objects for interaction. This image serves as the starting point and provides visual context for object positions and avatar appearance. However, users can combine it with other AI image generators to create the reference frame first.

How accurate is the lip-sync in InteractAvatar compared to dedicated talking head tools?

InteractAvatar maintains professional-grade lip-sync quality comparable to specialized tools like Wav2Lip or audio-driven models. The key advantage is it preserves this accuracy even while generating complex body movements and object interactions—something most talking head tools cannot do, as they typically keep the body static.

What file formats does InteractAvatar accept for audio input?

Based on typical AI video generator standards, InteractAvatar likely accepts common audio formats including MP3, WAV, and AAC. For best results, use high-quality audio files (at least 44.1kHz sample rate) with clear speech or music.

Can I use InteractAvatar for commercial projects like advertisements or product demos?

Usage rights depend on Tencent’s licensing terms for InteractAvatar. The technology is currently showcased as a research project with a “Try It Now” demo available. For commercial applications, users should contact Tencent HY directly regarding licensing, API access, and usage restrictions.

How does InteractAvatar handle multiple actions in one command?

InteractAvatar processes sequential actions in temporal order using natural language understanding. Connective words like “first,” “then,” “and” signal action boundaries. The model completes each action fully before starting the next, maintaining logical flow. For precise timing, use timestamp notation (0-4s: action one, 8-12s: action two).

What objects can InteractAvatar recognize and interact with?

The model has demonstrated capability with 32+ common object categories including: phones, cups, bags, cameras, hats, flowers, basketballs, headphones, books, cosmetics, toys, and kitchen items. It shows strong generalization to objects within these categories (different cup styles, various phone models) without requiring specific training on each variant.

Does InteractAvatar work with real human videos or only AI-generated avatars?

InteractAvatar is designed for AI-generated digital humans, not real human video editing. The reference image should be an illustrated or rendered avatar rather than a photograph of a real person. For editing real human videos with interaction changes, users would need different tools like video inpainting or motion transfer models.

How long does it take to generate a video with InteractAvatar?

Generation time is not publicly specified but likely ranges from 30 seconds to several minutes depending on video length, complexity, and server load. Simple 4-second single-action videos process faster than 20-second multi-step sequences with audio. As a research-stage model, it’s not yet optimized for real-time generation.

Can InteractAvatar generate videos in different languages besides English and Chinese?

Currently documented support is for English and Chinese text commands. Audio input can be in any language for lip-sync generation, but text-driven action control is limited to these two languages. Multilingual expansion may come in future versions as the technology develops.

In the End

InteractAvatar represents a significant advancement in AI avatar technology by solving the interaction problem that has limited previous talking avatar and text-to-video AI tools. Its ability to understand object contexts, execute multi-step commands, and maintain audio-visual synchronization opens new possibilities for automated video content creation across education, entertainment, and commercial applications.

While currently in research demonstration phase, the technology points toward a future where content creators can produce professional avatar-hosted videos through text descriptions alone—no animation expertise, motion capture, or complex 3D software required.

For creators ready to explore AI-driven video production today, InteractAvatar’s demo offers a glimpse of controllable avatar generation, while complementary tools like Gaga AI provide immediate access to image-to-video conversion, voice cloning, and TTS capabilities that can integrate into comprehensive video workflows.