Key Takeaways

- GLM-5 is a 744B-parameter open-source AI model (40B active) that achieves state-of-the-art performance in coding, reasoning, and agentic tasks

- Performance rivals Claude Opus 4.5 on benchmarks like SWE-bench (77.8), Terminal-Bench 2.0 (56.2), and BrowseComp (75.9)

- Pricing is 7x cheaper than Claude at approximately $0.71/$3.57 per million tokens (input/output) versus Claude’s $5/$25

- Open-source under MIT License with weights available on HuggingFace and ModelScope

- Integrated with popular coding tools including Claude Code, OpenCode, and other agentic frameworks

- DeepSeek Sparse Attention reduces deployment costs while maintaining 200K context window capacity

Table of Contents

What is GLM-5?

GLM-5 is Zhipu AI’s flagship foundation model released in February 2026, designed specifically for complex systems engineering and long-horizon agentic tasks. The model represents a significant leap from its predecessor GLM-4.7, scaling from 355B parameters (32B active) to 744B parameters (40B active) and increasing pre-training data from 23T to 28.5T tokens.

The model achieves best-in-class performance among all open-source models worldwide on reasoning, coding, and agentic benchmarks, effectively closing the gap with frontier proprietary models like Claude Opus 4.5 and GPT-5.2.

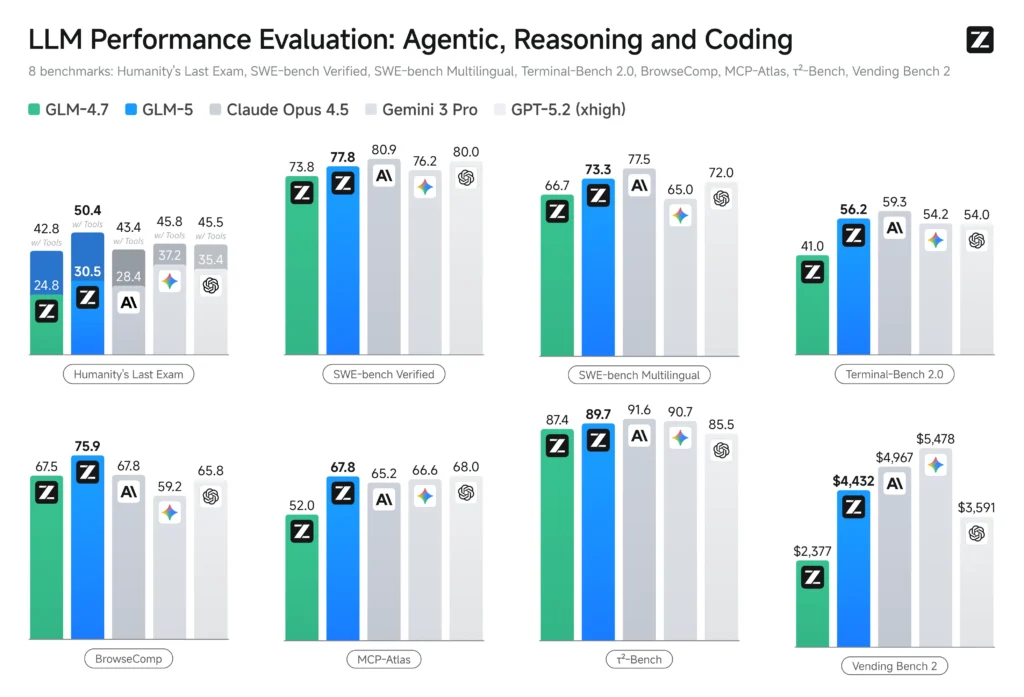

How Does GLM-5 Compare to Other AI Models?

Performance Benchmarks

GLM-5 delivers competitive results across industry-standard benchmarks:

Coding Capabilities:

- SWE-bench Verified: 77.8 (vs Claude Opus 4.5: 80.9, GPT-5.2: 80.0)

- SWE-bench Multilingual: 73.3 (vs Claude Opus 4.5: 77.5)

- Terminal-Bench 2.0: 56.2 (vs Claude Opus 4.5: 59.3, GPT-5.2: 54.0)

Agentic Performance:

- BrowseComp: 62.0 basic, 75.9 with context management (surpassing GPT-5.2’s 65.8)

- Vending Bench 2: $4,432 final balance, ranking #1 among open-source models

- MCP-Atlas Public Set: 67.8 (approaching Claude Opus 4.5’s 65.2)

Reasoning Tasks:

- Humanity’s Last Exam: 30.5 (with tools: 50.4)

- AIME 2026: 92.7

- GPQA-Diamond: 86.0

Pony Alpha Connection

The mysterious “Pony Alpha” model that appeared on OpenRouter in early February 2026 has been confirmed to be GLM-5. This anonymous model garnered significant attention for its exceptional coding performance before its official reveal, with users speculating it was either DeepSeek V4 or GLM-5.

What Makes GLM-5 Different?

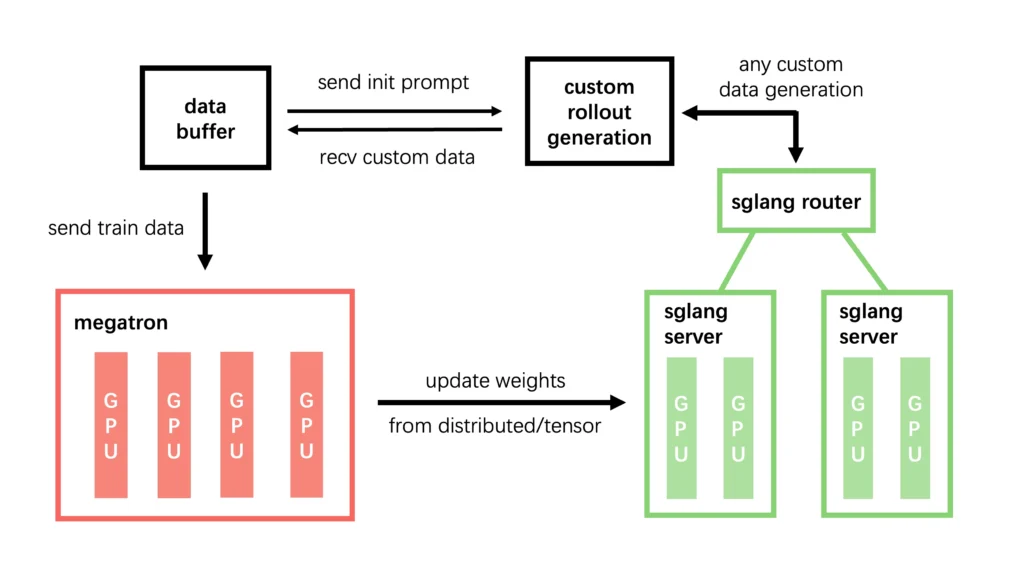

1. Asynchronous Reinforcement Learning (Slime Framework)

GLM-5 introduces a novel “Slime” infrastructure that substantially improves RL training throughput and efficiency. Traditional reinforcement learning for large language models faces significant scalability challenges due to the computational overhead of policy optimization and reward modeling.

The Slime framework addresses these limitations through:

Asynchronous Training Architecture: By decoupling data generation from policy updates, Slime achieves up to 3x higher throughput compared to conventional synchronous RL methods. This allows GLM-5 to iterate through more diverse training scenarios without bottlenecks.

Fine-Grained Iteration Cycles: Unlike models that undergo monolithic RL phases, GLM-5 benefits from continuous micro-adjustments. This approach prevents reward hacking and maintains model stability across extended training runs.

Agent-Centric Reward Modeling: The framework specifically optimizes for long-horizon agentic behaviors, rewarding task completion consistency over superficial metric optimization. This explains GLM-5’s exceptional performance on benchmarks like Vending Bench 2.

This breakthrough enables more fine-grained post-training iterations, bridging the gap between base model competence and production-ready excellence in ways that benefit real-world coding and problem-solving scenarios.

2. DeepSeek Sparse Attention Integration

For the first time in the GLM series, GLM-5 integrates DeepSeek Sparse Attention (DSA), representing a fundamental shift in how the model processes long contexts. Traditional transformer architectures suffer from quadratic complexity growth—doubling context length quadruples computational cost. DSA breaks this scaling ceiling.

3. Complex Systems Engineering Focus

Unlike general-purpose chat models optimized for conversational coherence, GLM-5 is purpose-built for deterministic, multi-step engineering workflows:

Multi-Step Software Development: GLM-5 doesn’t just write functions—it architects entire systems. When tasked with building a web application, it:

- Analyzes requirements and proposes technology stacks

- Scaffolds project structure with appropriate separation of concerns

- Implements frontend, backend, and database layers cohesively

- Integrates error handling, logging, and deployment configurations

- Produces production-ready code, not proof-of-concept snippets

Long-Horizon Agentic Task Execution: The model maintains goal coherence across hundreds of sequential actions. In the Vending Bench 2 evaluation (simulating a year-long business operation), GLM-5 demonstrated:

- Resource allocation over 365 simulated days

- Dynamic strategy adjustment based on market conditions

- Risk management and contingency planning

- Consistent profitability without catastrophic failures

This capability translates directly to real-world scenarios like sustained debugging sessions, iterative refactoring projects, or complex data pipeline construction.

Structured Document Generation: GLM-5 treats documents as first-class engineering artifacts. When generating .docx, .pdf, or .xlsx files, it:

- Applies professional formatting standards automatically

- Structures information hierarchically (headers, sections, subsections)

- Embeds tables, charts, and images with proper alignment

- Ensures consistency across multi-page documents

- Outputs immediately usable deliverables, not markdown approximations

The Z.ai Agent Mode leverages these capabilities through built-in skills, allowing users to request “Create a financial report from this dataset” and receive a polished Excel spreadsheet with formulas, pivot tables, and visualizations—no manual formatting required.

How to Use GLM-5

Option 1: Cloud API Access

Option 2: GLM Coding Plan

Subscribe to GLM Coding Plan for integrated access with popular coding agents:

- Claude Code

- OpenCode

- Cline, Droid, Roo Code, and more

Setup with Claude Code:

# Install Coding Tool Helper

npx @z_ai/coding-helper

# Follow prompts to configure GLM-5

# Update model name to “glm-5” in ~/.claude/settings.json

Subscription Tiers:

- Max Plan: Immediate GLM-5 access

- Other tiers: Progressive rollout

- Note: GLM-5 requests consume more quota than GLM-4.7

Option 3: Local Deployment

Download Model:

- HuggingFace: zai-org/GLM-5-FP8

- ModelScope: Available in BF16 and FP8 precision

Deployment with vLLM:

vllm serve zai-org/GLM-5-FP8 \

–tensor-parallel-size 8 \

–gpu-memory-utilization 0.85 \

–speculative-config.method mtp \

–served-model-name glm-5-fp8

Deployment with SGLang:

python3 -m sglang.launch_server \

–model-path zai-org/GLM-5-FP8 \

–tp-size 8 \

–tool-call-parser glm47 \

–reasoning-parser glm45

Hardware Support:

- NVIDIA GPUs (recommended: 8x for FP8 version)

- Huawei Ascend, Moore Threads, Cambricon, Kunlun Chip, MetaX, Enflame, Hygon

Real-World GLM-5 Use Cases

1. Full-Stack Application Development

GLM-5 can autonomously build complete applications from natural language descriptions. Here’s a detailed breakdown of a real production case:

Project: Cross-Platform Content Distribution Chrome Extension

Initial Prompt: “Develop a Chrome extension for cross-platform content distribution that extracts articles from WeChat public accounts and syncs to Xiaohongshu, Zhihu, and other platforms”

GLM-5 Development Process:

Phase 1 – Requirements Clarification (Turn 1-2): The model began by asking intelligent questions about:

- Extraction method preferences (manual input vs. automated scraping)

- Target platform priorities (which platforms to support first)

- Content format preservation (images, formatting, embedded media)

- User interaction model (popup vs. full-page interface)

This mirrors how senior developers approach vague requirements—seeking clarity before writing code.

Phase 2 – Architecture Design (Turn 3-4): GLM-5 proposed a comprehensive technical architecture:

- Manifest V3 Chrome Extension structure with proper permissions

- Content script injection for WeChat article extraction

- Background service worker for cross-platform API coordination

- Rich text editor integration using Quill.js for content editing

- Platform-specific adapters to handle different publishing APIs

Critically, it presented multiple implementation strategies, explaining trade-offs between complexity and functionality. This allowed for informed decision-making rather than arbitrary defaults.

Phase 3 – Implementation (Turn 5-15): The model generated:

- HTML/CSS interface with responsive design and user-friendly controls

- JavaScript logic for DOM parsing and content extraction

- API integration code for each target platform (Xiaohongshu, Zhihu, etc.)

- Error handling for network failures and invalid content

- State management to track distribution status

Phase 4 – Debugging and Refinement (Turn 16-20): When initial tests revealed content extraction issues (incomplete text, missing images), GLM-5:

- Diagnosed the problem as CORS restrictions and dynamic content loading

- Proposed alternative extraction strategies using content scripts

- Implemented retry logic with exponential backoff

- Added user feedback mechanisms (loading indicators, success/error messages)

Final Deliverable: A production-ready Chrome extension with 2,500+ lines of code across 8 files, deployed and functional within a 2-hour development session. Token consumption: approximately 130,000 tokens—remarkably efficient for the scope.

Key Insight: GLM-5 didn’t just write code; it engaged in software engineering. The iterative dialogue, architectural thinking, and systematic debugging mirror human developer workflows.

2. Intelligent Debugging Assistant

Traditional AI models struggle with complex, multi-file bugs requiring deep context understanding. GLM-5 excels here through persistent reasoning and contextual analysis.

Case Study: OCR Recognition Bug in Game Automation Tool

Scenario: Building a card-counting assistant for a poker game running in a PC emulator. The tool needed to:

- Capture a designated screen region

- Recognize playing cards via OCR

- Update card counts in real-time

Initial Bug: OCR consistently failed to recognize cards, despite correct region capture.

GLM-5 Debugging Process:

Diagnostic Phase: Without being told the root cause, GLM-5:

- Added debug logging to visualize captured screenshots

- Implemented step-by-step verification (capture → preprocessing → recognition)

- Isolated the failure point: OCR was receiving correct images but returning empty results

Analysis Phase: Recognizing the OCR limitation, GLM-5:

- Proposed template matching as an alternative approach

- Explained why grayscale conversion and binary thresholding would improve accuracy

- Recommended creating card templates for pattern matching instead of generic OCR

Implementation Phase: The model autonomously:

- Generated Python code for image preprocessing (cv2.cvtColor, cv2.threshold)

- Created a template matching algorithm using normalized cross-correlation

- Implemented multi-template matching to handle perspective variations

- Added confidence scoring to filter false positives

Validation: To verify the solution wasn’t suboptimal, the same problem was presented to Claude Opus 4.6 and GPT-5.3-codex. Both proposed identical approaches, confirming GLM-5’s technical soundness.

Result: 95%+ recognition accuracy on standard cards, with sub-50ms latency per frame. The only limitation (King/Queen confusion due to identical grayscale patterns) was acceptable for the use case.

Why This Matters: This wasn’t trial-and-error coding—it was methodical engineering. GLM-5 diagnosed, proposed, implemented, and validated a solution autonomously, demonstrating genuine problem-solving ability.

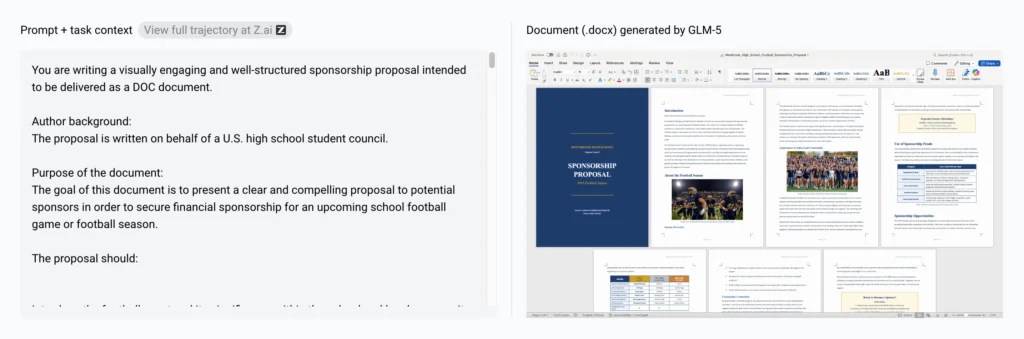

3. Document Generation as Code

GLM-5’s document generation capabilities transform abstract requests into polished, production-ready files—a game-changer for business users.

Example: High School Football Sponsorship Proposal

Input Prompt: “Create a visually engaging sponsorship proposal for a high school football team, targeting local businesses, delivered as a .docx file with images, tables, and professional formatting”

GLM-5 Output:

- 12-page Word document with custom styling and school branding

- Header sections: Cover page, introduction, event details, sponsorship tiers

- Embedded tables: Comparing Gold/Silver/Bronze sponsorship levels with benefits

- Image placeholders: Captioned with “Image: School football team during home game”

- Formatting: Consistent fonts, color scheme aligned with school colors, proper margins

- Call-to-action: Contact information and next steps for sponsors

Process Flow:

- GLM-5 used Python’s python-docx library to programmatically construct the document

- Applied styles (heading levels, font families, colors) consistent with professional standards

- Inserted tables with merged cells and formatted borders

- Added placeholder images with center alignment and captions

- Generated a ready-to-send .docx file in a single execution

Business Impact: Tasks that previously required Microsoft Word expertise and 2+ hours of manual formatting now complete in under 5 minutes. Non-technical users can request deliverables in natural language and receive publication-ready documents.

Other Document Examples:

- Excel financial models with formulas, pivot tables, and conditional formatting

- PDF reports with vector graphics, charts, and multi-column layouts

- Lesson plans with structured activities, timing, and resource lists

4. Custom Tool Development

GLM-5 bridges the gap between “I wish this tool existed” and “Here’s a working implementation.”

Case: YouTube Video Downloader Skill

Prompt: “Package the yt-dlp tool into a reusable skill where I provide a video link and you download it”

GLM-5 Response:

- Analyzed the yt-dlp GitHub repository to understand CLI options

- Created a Python wrapper script with argument parsing

- Implemented error handling for invalid URLs, network failures, and geoblocked content

- Proactively identified authentication requirements: “For YouTube videos, you’ll need to provide cookies if the content is restricted”

- Generated usage documentation and example commands

Contrast with Claude Opus 4.5: When given the same task, Opus 4.5 required 6-7 rounds of debugging, repeatedly claiming the skill was functional when it wasn’t. It never mentioned cookie requirements, leading to frustrating trial-and-error.

GLM-5’s precision—identifying prerequisites upfront—demonstrates superior task understanding.

Other Tool Examples:

- QQ Farm clone: Fully functional browser game with crop growth, harvesting, and localStorage persistence (built in <2 hours)

- Web scraper for e-commerce price monitoring with proxy rotation

- Markdown to HTML converter with custom CSS themes

- Database migration scripts with transaction safety and rollback logic

BONUS: Gaga AI Video Generator Integration

While GLM-5 excels at text and code, you can enhance your AI workflow by combining it with Gaga AI, a cutting-edge video generation platform that offers:

Key Features:

- Image-to-Video AI: Transform static images into dynamic video content

- Video and Audio Infusion: Seamlessly merge custom audio tracks with generated videos

- AI Avatar Creation: Generate realistic talking avatars for presentations

- AI Voice Clone: Clone voices with high fidelity for personalized content

- Text-to-Speech (TTS): Convert written content into natural-sounding narration

Workflow Example:

- Use GLM-5 to generate a marketing script

- Feed the script to Gaga AI’s TTS engine

- Combine with AI avatar for a complete video presentation

- Export and distribute across platforms

This combination enables end-to-end content creation from concept to polished video deliverable.

Common Questions About GLM-5

Is GLM-5 truly open-source?

Yes. GLM-5 is released under the MIT License, with model weights publicly available on HuggingFace and ModelScope. You can download, modify, and deploy the model locally without restrictions.

What is the context window size for GLM-5?

GLM-5 supports a 200K token context window for input and 128K tokens for output, matching GLM-4.7’s capabilities while improving efficiency through DeepSeek Sparse Attention.

Can GLM-5 replace GPT-5 or Claude for coding?

For most development tasks, GLM-5 delivers comparable results to Claude Opus 4.5 and approaches GPT-5.2 performance. However, GPT-5.3-codex still leads on extremely complex debugging scenarios. For the vast majority of users—especially those without ChatGPT subscriptions—GLM-5 offers the best coding experience available.

How does GLM-5 handle long-horizon agent tasks?

GLM-5 excels at tasks requiring sustained goal alignment over multiple steps. On Vending Bench 2 (a year-long business simulation), GLM-5 achieved a $4,432 final balance, demonstrating superior resource management and long-term planning compared to competing open-source models.

What languages does GLM-5 support?

GLM-5 supports multilingual coding and conversation, with strong performance on Chinese and English tasks. The SWE-bench Multilingual score of 73.3 indicates robust support for code repositories in multiple languages.

Does GLM-5 support function calling?

Yes. GLM-5 includes powerful tool invocation capabilities, supporting function calling, MCP (Model Context Protocol) integration, and structured output formats like JSON.

How much does it cost to run GLM-5 locally?

Local deployment requires significant GPU resources (recommended: 8x GPUs for the FP8 version). However, GLM-5 also supports non-NVIDIA accelerators like Huawei Ascend and Moore Threads, potentially reducing hardware costs. Cloud API access at $0.71/$3.57 per million tokens is often more economical for most users.

Can I use GLM-5 with OpenClaw?

Yes. GLM-5 supports OpenClaw, a framework that transforms the model into a personal assistant capable of operating across applications and devices. OpenClaw access is included in the GLM Coding Plan.

What is the “Slime” framework mentioned in GLM-5?

Slime is GLM-5’s novel asynchronous reinforcement learning infrastructure that dramatically improves training efficiency. It enables the model to continuously learn from long-range interactions, bridging the gap between pre-trained competence and production-ready performance.

How does GLM-5 compare on token efficiency?

Users report GLM-5 is exceptionally token-efficient, generating concise, actionable code without excessive verbosity. This contrasts with models like Claude Opus 4.6, which can consume significantly more tokens for equivalent tasks.

Conclusion

GLM-5 represents a watershed moment in open-source AI development. By achieving performance parity with Claude Opus 4.5 on critical benchmarks while maintaining a 7x cost advantage and full open-source availability, Zhipu AI has democratized access to frontier-class coding and agentic capabilities.

Whether you’re a developer seeking to integrate AI into your workflow through Claude Code, a researcher needing local deployment flexibility, or an enterprise balancing performance with budget constraints, GLM-5 offers a compelling alternative to proprietary models.

Get started with GLM-5:

- Try it free at Z.ai

- Access API at api.z.ai

- Download weights from HuggingFace

- Subscribe to GLM Coding Plan

The era of Vibe Coding—where natural language becomes the primary interface for software development—has arrived. And with GLM-5, it’s more accessible than ever before.