Key Takeaways

- LingBot-World is a free, open-source world model that generates interactive, real-time environments from user inputs

- It rivals Google Genie 3 in quality while being fully accessible to developers

- Three model versions exist: Base (Cam) for camera control, Base (Act) for action control, and Fast for real-time interaction

- The model maintains long-term memory, preventing the “ghost wall” effect common in other world models

- It supports diverse visual styles from photorealistic to cartoon and game aesthetics

- Real-time deployment achieves sub-1-second latency at 16 frames per second

Table of Contents

What Is LingBot-World?

LingBot-World is an open-source world simulation framework developed by Robbyant, Ant Group’s embodied intelligence division. It generates interactive, explorable virtual environments in real-time based on user inputs like keyboard commands or text prompts.

Unlike traditional video generation models such as Sora or Kling that produce pre-rendered content, LingBot-World creates worlds dynamically as you explore them. Press W to move forward, and the model generates what lies ahead. Type “make it rain,” and storm clouds gather overhead. Every frame is computed on-the-fly, not retrieved from pre-made footage.

The model was released in January 2026 with full open-source access, including code, weights, and technical documentation. This positions it as the first publicly available world model that approaches the quality of Google’s closed Genie 3.

Three Core Features That Set LingBot-World Apart

1. Stable Long-Term Memory

The most critical capability of any world model is memory consistency. Without it, turning around in a virtual space might reveal an entirely different environment than what you just left. This “ghost wall” effect breaks immersion and renders the simulation useless for practical applications.

LingBot-World solves this problem. In demonstrated cases, users navigated ancient architectural complexes for over ten minutes without environmental collapse. Buildings remained where they should be. Spatial relationships between objects stayed consistent. Looking away and looking back revealed the same scene.

Compare this to other world models where one-minute explorations result in complete environmental breakdown. The difference is fundamental to usability.

2. Strong Style Generalization

Many world models only handle photorealistic environments well. When asked to generate stylized content like anime, pixel art, or game aesthetics, they fail.

LingBot-World maintains quality across visual styles because of its training approach. The model learned from three data sources simultaneously:

- Real-world video teaches physical world appearance and behavior

- Game recordings teach how humans interact with virtual environments

- Unreal Engine synthetic data covers extreme camera angles and movement patterns that are difficult to capture naturally

This mixed training approach, similar to domain randomization techniques in robotics, produces a model that generalizes across visual styles rather than memorizing one aesthetic.

3. Intelligent Action Agent

LingBot-World includes an AI agent that can autonomously navigate and interact with generated worlds. This is not just automated wandering. The agent demonstrates:

- Collision awareness and avoidance

- Contextual speed changes including stops and direction shifts

- Goal-oriented movement planning

The agent uses a fine-tuned vision-language model that observes frames and outputs action commands. This creates a complete loop where AI generates the world and another AI explores it, enabling emergent behaviors and discoveries.

LingBot-World Model Versions Explained

Robbyant has released three distinct versions of LingBot-World, each optimized for different use cases.

LingBot-World-Base (Cam)

This version provides camera pose control for cinematographic applications.

| Specification | Details |

| Control Type | Camera poses and trajectories |

| Resolutions | 480P and 720P |

| Best For | Controlled camera movements, cinematic shots |

| Status | Available now |

Use Base (Cam) when you need precise control over camera movements like tracking shots, orbital movements, tilts, and pans.

LingBot-World-Base (Act)

This version accepts structured action commands for character and agent control.

| Specification | Details |

| Control Type | Action instructions and behavior commands |

| Best For | Character animation, agent behavior simulation |

| Status | Pending release |

Use Base (Act) when your application requires control over subject movement, gestures, and behavioral sequences.

LingBot-World-Fast

Optimized for real-time interaction with minimal latency.

| Specification | Details |

| Latency | Under 1 second |

| Frame Rate | 16 FPS |

| Best For | Interactive applications, real-time simulation |

| Status | Pending release |

Use Fast when building interactive experiences where responsiveness matters more than maximum visual quality.

How to Install LingBot-World

Follow these steps to set up LingBot-World on your system.

Prerequisites

- CUDA-capable GPU (enterprise-grade recommended for full resolution)

- PyTorch 2.4.0 or higher

- Python 3.8+

Step 1: Clone the Repository

| git clone https://github.com/robbyant/lingbot-world.git cd lingbot-world |

Step 2: Install Dependencies

| pip install -r requirements.txt |

Step 3: Install Flash Attention

| pip install flash-attn –no-build-isolation |

Step 4: Download Model Weights

| pip install “huggingface_hub[cli]” huggingface-cli download robbyant/lingbot-world-base-cam –local-dir ./lingbot-world-base-cam |

Alternative download sources include ModelScope for users in regions with limited HuggingFace access.

How to Generate Videos with LingBot-World

Basic 480P Generation

Run this command for standard resolution output with camera control:

| torchrun –nproc_per_node=8 generate.py –task i2v-A14B –size 480*832 –ckpt_dir lingbot-world-base-cam –image examples/00/image.jpg –action_path examples/00 –dit_fsdp –t5_fsdp –ulysses_size 8 –frame_num 161 –prompt “Your scene description here” |

Higher Quality 720P Generation

For better visual fidelity:

| torchrun –nproc_per_node=8 generate.py –task i2v-A14B –size 720*1280 –ckpt_dir lingbot-world-base-cam –image examples/00/image.jpg –action_path examples/00 –dit_fsdp –t5_fsdp –ulysses_size 8 –frame_num 161 –prompt “Your scene description here” |

Extended Video Generation

Increase the frame_num parameter for longer videos. Setting it to 961 produces approximately one minute of footage at 16 FPS, assuming sufficient GPU memory.

Generation Without Control Actions

Remove the –action_path parameter to let the model generate autonomously:

| torchrun –nproc_per_node=8 generate.py –task i2v-A14B –size 480*832 –ckpt_dir lingbot-world-base-cam –image examples/00/image.jpg –dit_fsdp –t5_fsdp –ulysses_size 8 –frame_num 161 –prompt “Your scene description here” |

LingBot-World vs Google Genie 3: Key Differences

| Feature | LingBot-World | Google Genie 3 |

| Access | Open-source, free | Closed, no public access |

| Code Available | Yes | No |

| Model Weights | Downloadable | Not available |

| Real-time Mode | Yes (Fast version) | Unknown |

| Documentation | Full technical report | Limited demos only |

| Commercial Use | Permitted | Not applicable |

The primary advantage of LingBot-World is accessibility. While Genie 3 demonstrated impressive capabilities in late 2024, it remains unavailable for public use. LingBot-World delivers comparable quality with complete transparency.

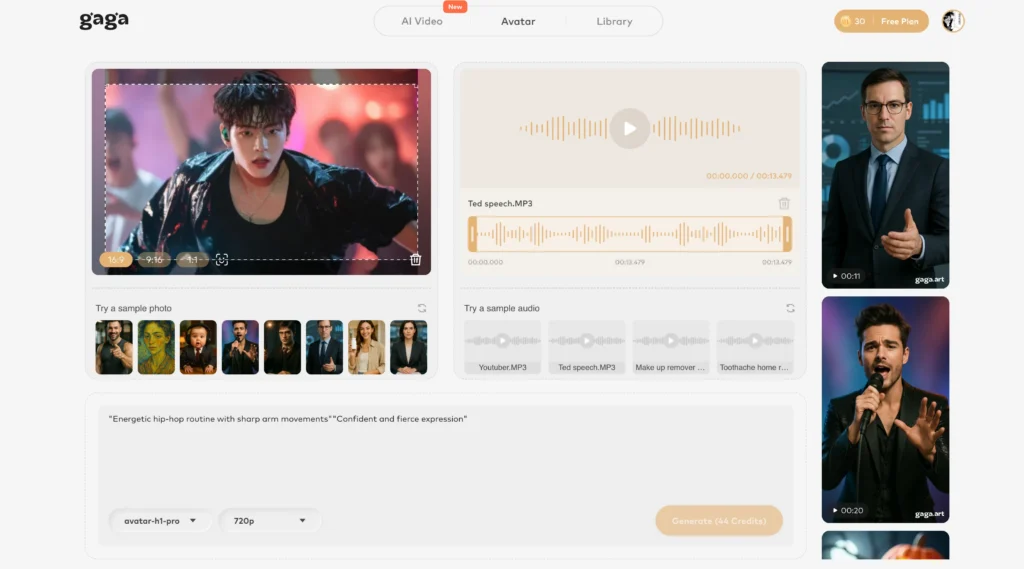

Bonus: Enhance Your AI Video Projects with Gaga AI

While LingBot-World excels at world simulation, content creators often need complementary tools for complete video production workflows. Gaga AI offers several capabilities that pair well with world model outputs.

Image to Video Generation

Transform static images into dynamic video sequences. This works well for creating establishing shots or adding motion to LingBot-World-generated stills.

AI Avatar Creation

Generate realistic digital humans for populating your world model environments or creating presenter-style content without live filming.

Voice Cloning

Replicate specific voices for consistent character dialogue across your generated content. Useful for narration or character voices in world model explorations.

Text-to-Speech

Convert written scripts to natural-sounding audio. Combine with world model footage for documentary-style content or guided virtual tours.

These tools address production needs that world models alone cannot fulfill, creating a more complete content creation pipeline.

Why LingBot-World Matters for AI Development

World models represent a fundamental shift in how AI systems understand and interact with environments. Here is why LingBot-World is significant:

For Game Development

Developers can prototype entire game worlds without traditional asset creation pipelines. The model generates consistent environments that respond to player actions naturally.

For Embodied AI and Robotics

Robots need to understand how the physical world works before operating in it. World models provide low-cost, high-fidelity simulation environments where robotic systems can safely learn and fail.

For Content Creation

Filmmakers and content creators gain access to infinite, controllable virtual sets that respond to direction in real-time.

For AI Research

The open-source release democratizes access to world model technology, enabling researchers without enterprise resources to advance the field.

Current Limitations and Roadmap

Known Constraints

Hardware Requirements: Full-resolution inference requires enterprise GPUs. Consumer hardware cannot run the model at intended quality levels.

Memory Architecture: Long-term consistency emerges from context windows rather than explicit memory modules. Extended sessions may experience environmental drift.

Control Granularity: Current control is limited to basic navigation. Fine manipulation of specific objects is not yet supported.

Quality Trade-offs: The Fast version sacrifices some visual fidelity for real-time performance.

Planned Improvements

The development team has outlined these priorities:

1. Expanded action space supporting complex interactions

2. Explicit memory modules for infinite-duration stability

3. Elimination of generation drift

4. Broader hardware compatibility

Frequently Asked Questions

What exactly is a world model?

A world model is an AI system that simulates interactive environments in real-time. Unlike video generators that output pre-computed footage, world models create content dynamically based on user actions, similar to how a video game engine works but without pre-built assets.

Is LingBot-World free to use?

Yes. LingBot-World is fully open-source with code and model weights available on GitHub, HuggingFace, and ModelScope. Commercial use is permitted.

What hardware do I need to run LingBot-World?

The model requires enterprise-grade GPUs for full resolution inference. Eight GPUs are recommended for the standard multi-GPU inference setup. Consumer hardware will experience significant limitations.

How long can LingBot-World generate videos?

The Base model can generate minute-long videos while maintaining environmental consistency. Setting frame_num to 961 produces approximately 60 seconds at 16 FPS.

Can LingBot-World generate game-style graphics?

Yes. The model handles diverse visual styles including photorealistic, cartoon, anime, and game aesthetics because it was trained on mixed data from real videos, game recordings, and synthetic renders.

What is the difference between LingBot-World and Sora?

Sora generates pre-rendered video content that plays back linearly. LingBot-World creates interactive environments that respond to user input in real-time. Sora is a video player; LingBot-World is a simulator.

How does LingBot-World maintain consistency when I turn around?

The model uses enhanced contextual memory to track environmental state across frames. This prevents the “ghost wall” effect where turning around reveals different scenery than what you left.

Can I control characters in LingBot-World?

The Base (Act) version supports action commands for character control. The Base (Cam) version currently available focuses on camera movement control.

Is LingBot-World better than Google Genie 3?

Quality is comparable based on available demonstrations. The key difference is accessibility. LingBot-World is open-source and usable today, while Genie 3 remains closed.

What applications can I build with LingBot-World?

Practical applications include game prototyping, virtual production for film, robotics simulation, architectural visualization, and interactive entertainment experiences.

Final Words

LingBot-World represents a meaningful advancement in accessible AI technology. By open-sourcing a world model that rivals closed alternatives, Robbyant has enabled researchers, developers, and creators to explore interactive world generation without enterprise budgets or special access.

The technology has immediate applications in gaming, content creation, and robotics simulation. Its limitations around hardware requirements and control granularity are acknowledged and targeted for improvement.

For those working at the intersection of AI and interactive media, LingBot-World provides a practical foundation to build upon today.

Resources:

- GitHub: github.com/robbyant/lingbot-world

- Project Page: technology.robbyant.com/lingbot-world

- Models: HuggingFace and ModelScope