There’s a test in the AI image generation world that has stumped every major model—until now. Type “11:15 on the clock and a wine glass filled to the top,” and watch as even the most sophisticated AI systems fail spectacularly. Clock faces morph into abstract art. Wine glasses hover half-empty or impossibly overfilled. But Nano Banana 2 did something remarkable: it nailed both elements perfectly.

This breakthrough moment signals more than just incremental progress. Google Nano Banana has evolved from an experimental model that appeared mysteriously in LM Arena to a powerhouse that’s redefining what’s possible in AI-powered image creation. With Nano Banana 2, Google has delivered cleaner edges, 3× better instruction accuracy, revolutionary text rendering capabilities, and a multi-step generation workflow that thinks like a professional designer.

Whether you’re a content creator tired of endless re-rolls, a product designer demanding brand-color precision, or a digital marketer seeking faster iteration cycles, understanding what makes Nano Banana AI different isn’t optional anymore—it’s essential.

Table of Contents

What is Nano Banana 2?

Nano Banana 2 represents Google’s most advanced AI image editor and generator, built on the Gemini 2.5 Flash foundation with anticipated upgrades to Gemini 3.0 Pro. Unlike traditional image generators that simply pattern-match keywords, Google Nano Banana employs deep reasoning capabilities to understand context, spatial relationships, and nuanced creative intent.

The model first appeared in LM Arena’s “battle mode,” where users unknowingly tested it alongside other AI systems. Its performance was so striking that it quickly gained attention across developer communities. The preview version later surfaced briefly on Media.io, generating massive buzz when users discovered its unprecedented text rendering accuracy and instruction-following precision.

Nano Banana AI excels at both text-to-image generation and image-to-image editing through natural language commands. What sets it apart is its ability to “think through” visual tasks—planning output, generating initial images, self-reviewing through built-in analysis, correcting errors, and iterating before final delivery. This multi-step approach mimics how professional designers work, resulting in outputs that require minimal post-processing.

The Nano Banana model supports comprehensive aspect ratios (1:1, 2:3, 3:2, 3:4, 4:3, 9:16, 16:9, 21:9) with native 2K output and optional 4K upscaling. This versatility makes it ideal for Instagram posts, YouTube thumbnails, website mockups, and print materials—all from a single platform. As part of Google’s broader Gemini ecosystem, it represents a significant leap in AI’s ability to understand and execute complex visual tasks with human-level contextual awareness.

Nano Banana 2 vs Nano Banana 1: Key Improvements

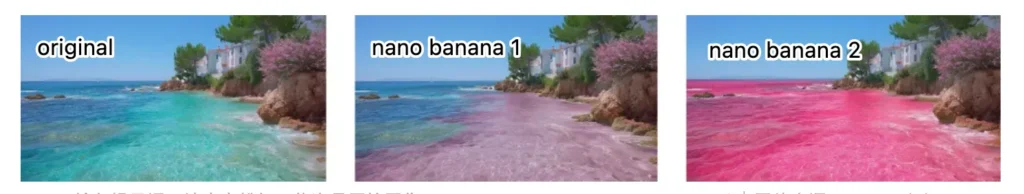

Visual Quality Enhancements

The quality leap from version 1 to Nano Banana 2 is immediately visible in side-by-side comparisons. Early test images on Media AI platforms reveal noticeably cleaner edges and dramatically fewer artifacts. The same prompts that caused version 1 to produce morphing earrings or extra teeth now render flawlessly in Nano Banana 2.

Testing groups report up to 3× better instruction-following accuracy compared to the original model. Edge fidelity has improved substantially—fabric weave appears with realistic texture, hair strands maintain individual definition, and metal reflections capture environmental lighting with precision. Native 2K output with optional 4K upscaling means tiny text on product packaging and micro-details on watch faces finally render legibly without external enhancement tools.

One particularly revealing test involved a moody product photograph: “matte-black thermos on wet concrete, blue rim light, 35mm, shallow DOF.” Version 1 produced decent results but muddied rim lights and frequently forgot surface wetness entirely. Nano Banana 2 nailed reflections, maintained believable bokeh, and preserved specific lighting cues with remarkable consistency. Users report finding keeper images on the first or second generation instead of hunting through ten attempts.

Color accuracy has also seen major upgrades. Brand-color precision was a persistent pain point with version 1, but Nano Banana 2 follows color prompts more faithfully, landing closer to target HEX values and maintaining consistency across successive runs. Skin tones appear less waxy, especially under mixed lighting scenarios where version 1 often flattened out tonal variation.

Technical Architecture Upgrades

Under the hood, Nano Banana 2 leverages Gemini 2.5 Flash as its foundation, with potential future upgrades to Gemini 3.0 Pro as the base model matures. The most intriguing architectural change is a revolutionary multi-step generation workflow that fundamentally changes how the AI approaches image creation.

Instead of generating images in a single pass, Nano Banana 2 follows a five-stage process:

1. Planning phase: The model spends considerable time analyzing the prompt and planning the optimal output approach

2. Initial generation: Creates a preliminary image based on the planned approach

3. Self-review: Uses built-in image analysis capabilities to evaluate the generated result

4. Error correction: Identifies specific issues like incorrect text, misplaced objects, or lighting inconsistencies

5. Iterative refinement: Makes targeted improvements before delivering the final result

This iterative correction loop explains the dramatically cleaner results in preview samples. The practical effect is better prompt adherence without the brittle “over-literal” look that plagued earlier models. Nano Banana AI also recovers hands and small props more reliably—likely from improved fine-grain supervision in the training loop.

The architecture improvements enable the model to handle complex scenarios that stumped version 1: precise coloring tasks, advanced viewpoint control, and accurate text element correction. It’s the difference between an AI that guesses at your intent and one that actually understands it.

Resolution & Format Support

Version 1 often defaulted to 768×768 resolution to avoid edge quality degradation. Nano Banana 2 maintains stable native 2K output without the mushiness that affected lower-resolution generations. The expanded aspect ratio support transforms workflow efficiency—creators can now generate for Instagram (1:1, 4:3), YouTube thumbnails (16:9), Pinterest (2:3), and ultra-wide displays (21:9) without format conversion hacks.

Multiple output modes (1K, 2K, 4K) provide flexibility for different use cases. Social media posts might only need 1K resolution, while print materials benefit from 4K output. Importantly, upscaling from Nano Banana 2 artifacts significantly less than version 1—lines stay crisp after 2× magnification, and fine details don’t dissolve into blur.

This resolution consistency matters enormously for professional workflows. Product photographers can generate catalog images that hold up under close inspection. UI designers can create mockups with readable interface text. Marketing teams can produce ad creatives that work across digital and print channels without separate generation runs.

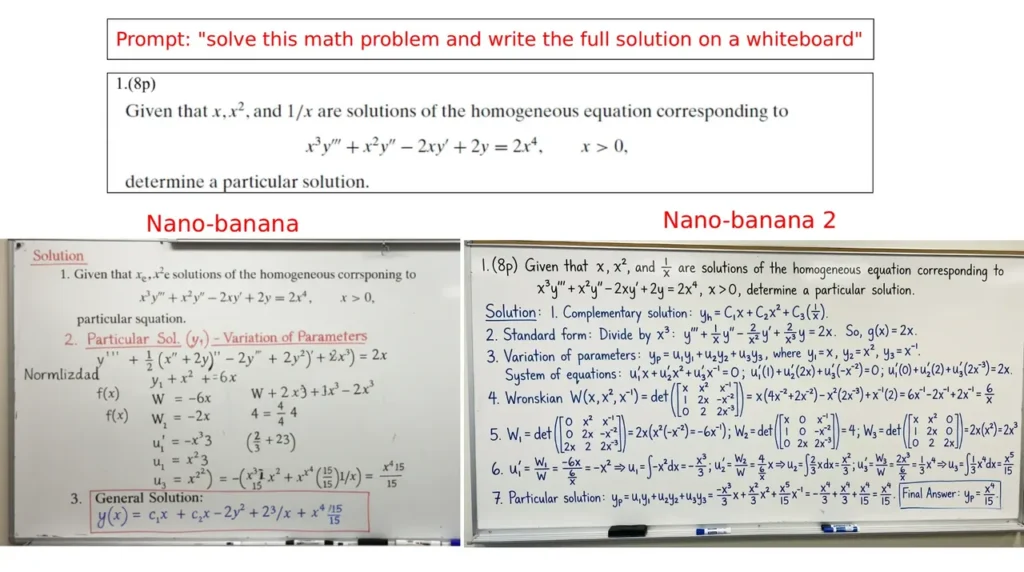

Text Rendering Breakthrough

Perhaps the most dramatic improvement in Nano Banana 2 is its text rendering capability. The model can now accurately generate complex text scenarios that were impossible for version 1 and remain challenging for most competitors.

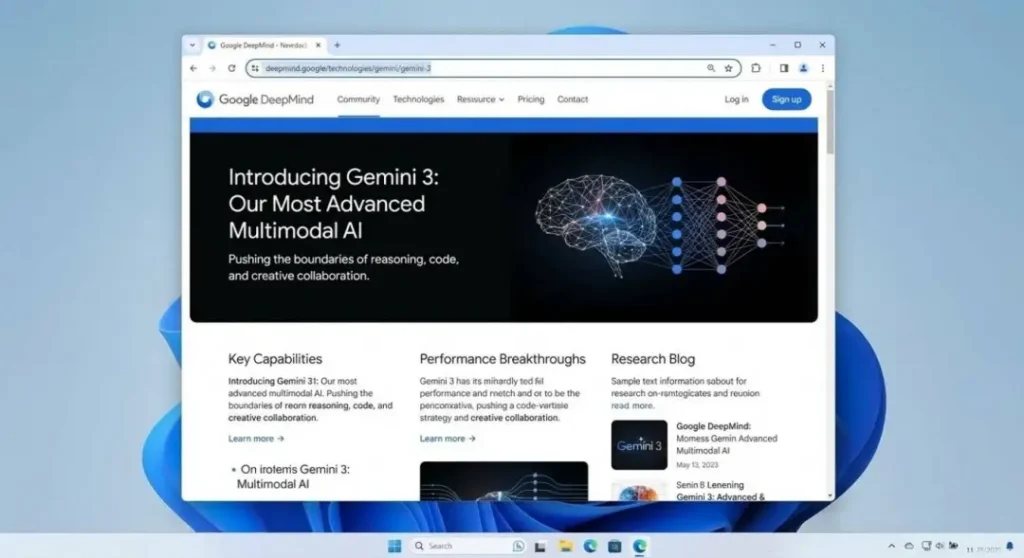

Windows desktop screenshots with readable browser content? Check. News broadcast graphics with accurate chyron text and proper reflections on studio floors? Done. Website mockups with dense paragraphs that avoid gibberish? Absolutely. One impressive example showed a Google DeepMind webpage screenshot with correctly formatted text throughout—though eagle-eyed observers noted occasional minor typos like “Gemini 31” instead of “Gemini 3.”

The model handles multi-language text across different font styles, from handwritten chalk equations on blackboards to polished broadcast graphics. When text content is focused rather than overwhelming, Nano Banana 2’s error rate drops dramatically. A test involving a news broadcast showed perfect text rendering on screen, on the lower-third chyron, and even in floor reflections—all while maintaining natural lighting and composition.

This breakthrough in text accuracy eliminates one of the most frustrating bottlenecks in AI image generation. Designers no longer need to generate blank templates and add text in post-production. Marketing teams can create complete ad mockups in a single pass. Educational content creators can generate explanatory diagrams with legible annotations.

Advanced Features of Nano Banana 2

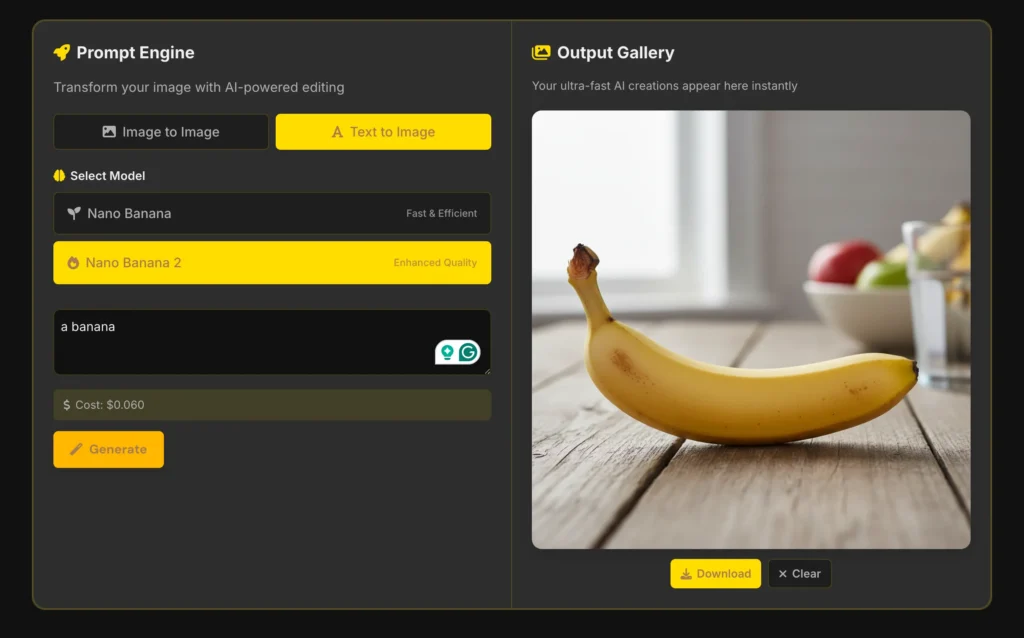

Image to Image Capabilities

Image to image editing with Nano Banana 2 transforms how creators manipulate existing visuals. The one-shot editing approach allows natural language commands to make precise changes while preserving overall scene integrity. Instead of masking, layering, and extensive manual adjustments, you can simply describe the desired change.

The model’s advanced neural networks comprehend 3D relationships within 2D images, enabling sophisticated object manipulation. Change an old man’s clothes to a denim cowboy jacket, and the system understands how fabric drapes, where shadows fall, and how the new garment interacts with the body beneath. Replace David with Iron Man on a magazine cover, and the lighting, perspective, and composition all adjust intelligently.

Context-aware editing represents a significant leap over traditional image editors. Nano Banana AI doesn’t just swap pixels—it understands spatial relationships, lighting conditions, and visual logic. Make water pink in a landscape photo, and the reflections, transparency, and environmental lighting all shift appropriately. This contextual understanding eliminates the uncanny “pasted-on” look that plagues simpler editing tools.

Background replacement, object addition and removal, texture adjustments, and lighting modifications all benefit from this sophisticated spatial awareness. The image to image workflow maintains consistency across edits, meaning character faces stay recognizable across carousel posts, product colors remain accurate through multiple variations, and brand aesthetics persist throughout batch generations.

Text to Image Generation

Text to image generation with Nano Banana 2 creates stunningly realistic images from written descriptions with unprecedented accuracy and detail. The model’s enhanced world knowledge means it understands cultural references, brand identities, and stylistic conventions that version 1 missed entirely.

Request a GTA 6 trailer screenshot, and Nano Banana 2 generates an authentic-looking YouTube page complete with the correct logo styling. Ask for a Netflix live-action adaptation scene, and it recognizes appropriate branding and even relevant actors. This world knowledge extends to anime characters, cyberpunk aesthetics, architectural styles, and countless other domains.

Style versatility separates professional-grade text to image generation from novelty tools. Nano Banana 2 handles photorealistic portraits, anime illustrations, cyberpunk cityscapes, film-grain vintage photography, and countless other aesthetic approaches. The model doesn’t just apply filters—it understands the underlying principles that make each style distinctive.

A test prompt for a cyberpunk hacker robot working among monitors yielded images with appropriate neon accents, gritty textures, holographic displays, and atmospheric lighting that felt genuinely cinematic. Another request for a film-grain group photo produced images with authentic analog photography characteristics: natural color grading, realistic depth of field, and the subtle imperfections that make analog images feel real.

The Nano Banana model also follows technical specifications that photographers and cinematographers care about. Specify “35mm lens look” and get appropriate perspective compression. Request “macro” and see exaggerated depth of field. Ask for “telephoto look” and receive natural background compression. This technical accuracy matters enormously for professional creators who need specific visual characteristics.

Deep Reasoning & Prompt Understanding

One of version 1’s most frustrating limitations was prompt drift—asking for specific attributes and getting generic interpretations instead. Nano Banana 2 addresses this through genuinely improved reasoning capabilities that interpret nuanced instructions accurately.

The model now reliably follows lens specifications (35mm, macro, telephoto) with appropriate perspective and depth-of-field characteristics. Lighting cues (rim light, overcast, golden hour) translate into accurate illumination patterns rather than vague approximations. Color temperature requests (warm tungsten, cool daylight, mixed sources) produce believable lighting scenarios.

This deep prompt understanding extends to complex, multi-attribute requests. A prompt combining specific camera angle, lighting setup, color palette, subject positioning, and stylistic treatment no longer requires multiple attempts to capture all elements. Nano Banana AI processes the entire request holistically, understanding how each element interacts with the others.

The reduction in prompt drift means creators can trust their instructions will be followed. This reliability transforms creative workflows—less time wasted on re-rolls, fewer compromises accepting “close enough” results, and more predictable outcomes when iterating on successful prompts. For professional work where client specifications must be met precisely, this consistency is invaluable.

Real-World Use Cases & Applications

Product Design & Marketing

Brand color accuracy and edge precision make Nano Banana 2 invaluable for product visualization. An e-commerce company reportedly cut ad-visual generation time from 8 hours to 45 minutes using early access builds. Product mockups maintain exact HEX color values, packaging text stays legible, and material textures render realistically.

Marketing teams can generate A/B test variants maintaining consistent product positioning while varying backgrounds, lighting, and compositional elements. The higher keeper-per-batch ratio (7-8 out of 10 versus 4-5 with version 1) means fewer rejected images and faster approval cycles.

Content Creation

Social media managers, YouTubers, and digital publishers benefit from format versatility and rapid generation speeds. Create Instagram carousel posts with consistent character appearances across all slides. Generate YouTube thumbnails with eye-catching compositions and readable text overlays. Produce blog header images matching specific aspect ratios without cropping compromises.

Sub-10-second generation times enable real-time creative direction during team meetings. Instead of describing concepts verbally and waiting hours for design mockups, stakeholders can iterate visually during active discussions.

Digital Art & Illustration

Artists use Nano Banana 2 for concept exploration, reference generation, and base image creation. The style versatility allows quick exploration across aesthetic approaches—test cyberpunk versus steampunk interpretations, compare warm versus cool color palettes, evaluate different compositional arrangements.

The image to image capabilities enable artists to refine AI-generated bases with traditional tools, combining computational efficiency with human artistic judgment. This hybrid workflow accelerates production while maintaining creative control.

Photo Restoration & Enhancement

Historical photo restoration benefits from Nano Banana AI’s understanding of period-appropriate aesthetics. Colorize black-and-white images with believable skin tones and environmental colors. Repair damage while maintaining authentic film-grain characteristics. Enhance low-resolution images without introducing the artificial sharpness that betrays digital enhancement.

E-commerce Catalog Generation

Retailers generating hundreds of product images benefit enormously from batch consistency and speed improvements. A 10-image product catalog that previously required 2+ minutes now completes in under 90 seconds. More importantly, quality remains stable throughout the batch—no late-run degradation requiring manual quality checks.

Educational Content

The breakthrough text rendering enables educational applications that were previously impossible. Generate mathematical explanations on blackboards with accurate notation. Create anatomical diagrams with properly labeled structures. Produce historical infographics with legible dates, names, and statistical information.

Performance & Speed Improvements

Nano Banana 2 generates complex images in under 10 seconds compared to version 1’s typical 12-15 second window. While a few seconds may seem minor, this improvement transforms iterative creative workflows. The psychological difference between “instant” and “wait a moment” is substantial—faster feedback loops maintain creative momentum and enable more experimental exploration.

Batch processing efficiency compounds these single-image gains. Ten images dropping from 2+ minutes to under 90 seconds changes what’s feasible in time-constrained scenarios. Real-time client presentations become viable. Same-day campaign launches from concept to execution become realistic.

Memory-efficient attention mechanisms in preview builds suggest stable performance even on moderate hardware. The multi-step workflow sounds computationally intensive, but optimized pipeline implementation keeps generation times competitive. This efficiency means creators don’t need cutting-edge GPUs to access advanced capabilities.

The higher keeper-per-batch ratio deserves emphasis beyond raw speed metrics. If accepted outputs rise from 40-50% to 70-80%, effective generation time drops even further. Seven usable images from ten generations is dramatically more efficient than five usable images, even if individual generation times were identical. Combined with faster generation, workflow acceleration is substantial—potentially 3-4× faster end-to-end for real projects.

Consistency improvements also reduce quality-check overhead. When successive generations with the same seed stay tighter, batch work becomes more predictable. Character faces remain recognizable across series. Product angles stay consistent through variations. This reliability enables confident batch launches without reviewing every single output.

Beyond Images: Exploring AI Video Generation with Gaga AI, Sora, and Veo 3.1

Gaga AI – Your Complete AI Video Solution

While Nano Banana 2 dominates static image generation, many creative workflows require motion. Gaga AI fills this gap as an advanced AI video generator that brings the same breakthrough quality to animated content.

Gaga AI offers comprehensive video generation capabilities including text-to-video creation from written descriptions, image-to-video animation that brings static visuals to life, and intelligent video editing through natural language commands. The platform handles marketing videos, social media content, product demonstrations, explainer animations, and countless other motion-based applications.

The synergy between Nano Banana 2 and Gaga AI creates a complete visual content production pipeline. Generate product images with precise brand colors and sharp details using Nano Banana AI, then animate those images into dynamic product reveals with Gaga AI. Create character portraits with consistent features using image to image editing, then bring those characters to life in narrative sequences with video generation.

Gaga AI excels in scenarios where motion amplifies impact: product demos showing functionality, before-and-after transformations with smooth transitions, testimonial videos with engaging visual backing, and social content where movement captures attention in crowded feeds. The platform’s competitive advantages include faster rendering times than comparable tools, better motion consistency across frames, and intelligent scene understanding that maintains spatial relationships during camera movements.

For content creators managing both static and motion assets, the combination delivers unmatched efficiency. Design thumbnail images with Nano Banana 2, create the actual video content with Gaga AI, and maintain consistent visual branding across all assets without switching between disconnected tools or mismatched aesthetic outputs.

OpenAI Sora

OpenAI’s Sora represents another frontier in AI video generation with impressive capabilities for generating realistic video sequences from text prompts. Sora excels at understanding physical dynamics, creating believable character movements, and maintaining consistency across longer video durations.

The model’s strengths include sophisticated scene composition, natural motion physics, and the ability to generate multiple shots maintaining consistent characters and environments. However, Sora currently faces limitations in generation speed, availability constraints, and pricing that may restrict accessibility for smaller creators and rapid-iteration workflows.

Best use cases for Sora include cinematic short films where quality trumps speed, conceptual demonstrations requiring physically accurate simulations, and creative exploration where generation time isn’t critical. For production environments requiring fast turnaround, Gaga AI offers more practical accessibility.

Google Veo 3.1

Google’s Veo 3.1 brings the company’s AI expertise into video generation, complementing the image capabilities demonstrated by Google Nano Banana. Veo emphasizes controllability, allowing creators to specify camera movements, editing styles, and visual effects with precision.

The integration potential between Nano Banana 2 and Veo 3.1 within Google’s ecosystem could enable seamless workflows where static image generation and video animation share consistent styling, character representations, and brand aesthetics. As both technologies mature, expect tighter integration that leverages shared understanding of visual concepts.

Compared to Gaga AI, Veo 3.1 offers deeper integration with Google’s broader suite of creative tools but may have steeper learning curves for creators unfamiliar with Google’s platforms. Gaga AI’s focused approach on accessible video generation provides a more streamlined experience for creators who prioritize speed and simplicity.

Connecting Back to Image Foundation

These video generation tools build upon the same foundational capabilities that make Nano Banana 2 revolutionary: deep understanding of visual logic, accurate instruction following, and consistent quality across outputs. Whether generating static images or animated sequences, the underlying AI reasoning principles remain constant. For creators building comprehensive content strategies, combining Nano Banana 2’s image precision with Gaga AI’s video capabilities creates a powerful end-to-end production pipeline that maintains quality and consistency from concept through final delivery.

Nano Banana 2 vs Competitors

| Capability | Nano Banana 2 | Flux Kontext | Gemini 2.0 Flash | DALL-E 3 |

| Reasoning Ability | Superior multi-step workflow | Basic pattern matching | Moderate contextual understanding | Good concept interpretation |

| Text Preservation | Perfect with minimal errors | Poor, frequent gibberish | Moderate accuracy | Limited complex text |

| Spatial Understanding | Comprehensive 3D awareness | Surface-level composition | Moderate depth perception | Good but occasional failures |

| Edit Consistency | Perfect across iterations | Inconsistent variations | Good within sessions | Moderate drift |

| Prompt Following | 3× better accuracy | Standard interpretation | Above average | Strong but literal |

| Resolution Options | Native 2K, optional 4K | Typically 1K | 1K-2K variable | Up to 1K standard |

| Generation Speed | Under 10 seconds | 10-15 seconds | 8-12 seconds | 15-20 seconds |

Performance testing reveals Nano Banana AI consistently outperforms competitors in critical areas that matter for professional workflows. The text preservation advantage alone eliminates hours of post-production editing for projects requiring readable in-scene typography. Superior spatial understanding means fewer uncanny composition failures where objects float impossibly or perspective breaks down.

Flux Kontext offers impressive stylistic range but struggles with precise instruction following—great for artistic exploration where unexpected variations are welcome, less suitable for brand-accurate product visualization. Gemini 2.5 Flash demonstrates Google’s broader AI capabilities but lacks the specialized image-generation optimizations present in Nano Banana 2.

DALL-E 3 from OpenAI remains a strong competitor with excellent concept interpretation and artistic capabilities. However, its tendency toward literal prompt interpretation can feel rigid, and text rendering limitations persist. For creators needing accurate text in complex scenes—news broadcasts, website mockups, educational diagrams—Nano Banana 2 holds decisive advantages.

Pricing considerations remain uncertain as Nano Banana 2 hasn’t announced final commercial terms. Preview access suggests tiered options with free limited-generation plans and premium tiers for higher resolution, faster processing, and commercial licensing. If pricing lands within 10-20% of comparable tools, the quality and speed advantages likely justify any premium for professional users.

Who Should Use Nano Banana 2?

Beginners and Casual Users

If you’re exploring AI image generation for personal projects, social media posts, or creative experimentation, Nano Banana 1 remains perfectly adequate. The original version handles quick ideation sketches, heavily stylized art, and content where pixel-perfect accuracy doesn’t matter.

Stick with version 1 if you’re working on older hardware, operating under tight budgets, or generating throwaway content with short lifespans. For meme creation, rough concept exploration, or content that will be heavily post-processed anyway, version 1’s lower costs make financial sense.

Consider upgrading to Nano Banana 2 when you consistently encounter color accuracy frustrations, spend excessive time hunting through generations for acceptable results, or need text that actually reads correctly. If your rejection rate exceeds 30-40% with version 1, the upgrade will likely save time and frustration.

Professional and Power Users

Professional designers, marketers, product photographers, and content creators working on client projects or commercial applications should strongly consider Nano Banana 2 when it launches publicly. The quality improvements directly translate to reduced retouching time, faster client approval cycles, and more predictable project timelines.

Upgrade immediately if you require accurate brand colors and crisp in-scene text, generate batch catalogs where consistency matters, run rapid A/B testing requiring character continuity, or face iteration speed bottlenecks during reviews. The compound time savings across real production workflows justify premium pricing for professional contexts.

Decision Checklist

Choose Nano Banana 2 if you answer “yes” to these questions:

- Do projects require brand-accurate colors matching specific HEX values?

- Is legible small text essential for your outputs (product packaging, infographics, mockups)?

- Are you currently rejecting more than 30% of generations due to quality issues?

- Does iteration speed directly impact project deadlines or client satisfaction?

- Will you generate batches where consistency across outputs matters?

- Do your use cases justify spending 10-20% more per image for better results?

Stick with version 1 if:

- You primarily generate content for rapid consumption (24-hour social posts)

- Heavy post-processing will occur regardless of initial quality

- Hardware limitations or budget constraints are primary concerns

- Stylistic exploration matters more than precise execution

Cost-Benefit Framework

Calculate your effective cost per usable image, not just generation cost. If version 1 costs $0.10 per generation but only 40% are acceptable, your effective cost is $0.25 per keeper. If Nano Banana 2 costs $0.12 per generation with 75% acceptance, your effective cost drops to $0.16 per keeper—cheaper despite higher nominal pricing.

Factor in time savings beyond raw generation costs. If each approved asset saves an hour of retouching, and Nano Banana 2 doubles your acceptance rate, the labor savings dwarf any subscription premium. For agencies billing hourly, faster iteration directly increases profit margins.

How to Access Nano Banana 2

Current Access Methods

Nano Banana 2 currently appears through preview access on select platforms. Mixboard and https://banananano.ai/ have offered limited testing opportunities, though availability fluctuates as Google manages rollout phases. The original Nano Banana model remains accessible through LM Arena’s battle mode, where it appears randomly alongside other models.

Preview platforms like Gempix2.ai have announced plans to offer free access immediately upon official release. These platforms typically provide limited daily generations for free accounts with premium tiers for higher resolution, faster processing, and commercial licensing.

Expected Release Timeline

Based on UI leaks and developer activity patterns, Nano Banana 2 was initially expected around November 11, 2025, though some sources suggest slight delays to November 18-20, 2025. Announcement cards appearing in the Gemini interface typically signal public rollout within days.

Google hasn’t issued official announcements regarding pricing structure, API access, or enterprise licensing. Monitor Google’s AI blog and Gemini platform announcements for official launch details.

Integration Possibilities

For creators combining static and motion content, Gaga AI offers complementary video generation capabilities that work seamlessly alongside Nano Banana 2 image outputs. Generate brand-accurate product images with precise color matching, then animate those images into marketing videos maintaining visual consistency.

The workflow integration allows maintaining consistent character designs across image and video assets, matching color grading between static and motion content, and coordinating release timing for multimedia campaigns. As both tools continue developing, expect deeper integration enabling one-click transitions from image generation to video animation.

Free Tier vs Premium Options

While official pricing remains unannounced, expect tiered structures similar to other Google AI products. Free tiers will likely include limited daily generations (perhaps 50-100), standard resolution output (1K-2K), and basic commercial usage rights. Premium tiers should offer unlimited or high-volume generation, 4K output options, priority processing, advanced editing features, and full commercial licensing.

For professional workflows, premium subscriptions will likely justify costs through time savings, higher output quality, and reduced post-production requirements. Evaluate tiers based on your monthly generation volume, resolution requirements, and commercial licensing needs.

Conclusion

Nano Banana 2 represents more than incremental improvement—it’s a fundamental leap in AI image generation capability. The breakthrough text rendering that finally passed the impossible clock-and-wine test signals deeper reasoning abilities that permeate every aspect of the model’s performance. From 3× better instruction accuracy to native 2K output with 4K upscaling, from sub-10-second generation times to perfect brand-color matching, Google Nano Banana has addressed the pain points that limited professional adoption of AI image tools.

The multi-step generation workflow—planning, creating, reviewing, correcting, and iterating—mimics how skilled designers approach visual challenges. This architectural sophistication delivers practical benefits: fewer failed generations, more predictable results, faster approval cycles, and dramatically reduced post-production requirements. For professionals where time directly translates to money, these improvements justify premium pricing many times over.

The image to image and text to image capabilities open creative possibilities previously constrained by technological limitations. Edit photographs with natural language precision. Generate product mockups with brand-accurate colors. Create educational content with legible text annotations. Produce marketing materials maintaining character consistency across dozens of variations. These workflows were theoretically possible with earlier tools but practically frustrating—Nano Banana 2 makes them reliably executable.

Looking forward, the integration between Nano Banana AI’s image capabilities and Gaga AI’s video generation creates comprehensive visual content pipelines. Generate static assets with precision, animate them with consistent quality, and maintain brand aesthetics across mixed-media campaigns—all without switching between disconnected tools or compromising on quality.

Whether you’re a content creator seeking faster iteration, a product designer demanding color accuracy, or a marketing professional managing complex campaigns, Nano Banana 2 deserves serious evaluation. The compound time savings, quality improvements, and workflow efficiencies represent genuine competitive advantages in increasingly visual-first digital landscapes.

Ready to transform your visual content workflow? Experience Gaga AI for professional video generation, and stay tuned for Nano Banana 2’s public release to complete your end-to-end creative production pipeline. The future of AI-powered visual content isn’t coming—it’s already here.

Frequently Asked Questions

What’s the difference between Nano Banana 2 and Nano Banana 1?

Nano Banana 2 delivers 3× better instruction-following accuracy, native 2K output with 4K upscaling, breakthrough text rendering with minimal errors, and sub-10-second generation times. The revolutionary multi-step workflow—planning, generating, reviewing, correcting, and iterating—produces cleaner edges, truer colors, and more consistent results. Version 1 remains suitable for casual use, but version 2’s quality improvements justify upgrades for professional workflows where brand accuracy, legible text, and rapid iteration matter.

Is Nano Banana 2 free to use?

Nano Banana 2 will likely offer free limited access similar to other Google AI products, with daily generation caps and standard resolution outputs. Premium tiers should provide unlimited generations, 4K resolution, priority processing, and full commercial licensing. Preview platforms like Gempix2.ai have announced free access plans immediately upon official release. Exact pricing structures await Google’s official announcement, expected around November 18-20, 2025.

What does “image to image” mean in Nano Banana 2?

Image to image editing allows transforming existing photos through natural language commands while preserving scene integrity. Upload an image and describe desired changes—”change the water to pink,” “replace the shirt with a denim jacket,” “add Iron Man to the magazine cover.” Nano Banana AI understands 3D spatial relationships, adjusts lighting appropriately, and maintains consistent style. This context-aware approach eliminates the artificial “pasted-on” look from simpler editors, enabling professional-quality modifications through simple text instructions.

How does text to image work in Nano Banana 2?

Text to image generation creates complete images from written descriptions. Describe a scene—”cyberpunk hacker robot among monitors with neon lighting”—and Nano Banana 2 generates photorealistic or stylized visuals matching your specifications. Enhanced world knowledge means the model understands brand logos, architectural styles, cultural references, and technical photography terms (35mm, macro, rim light). The deep reasoning capabilities interpret nuanced prompts accurately, following composition, lighting, color, and style instructions reliably without the prompt drift that plagued earlier models.

Can Nano Banana 2 generate accurate text in images?

Yes—this represents Nano Banana 2’s most dramatic breakthrough. The model accurately renders complex text scenarios that stump competitors: Windows desktop screenshots with readable browser content, news broadcasts with correct chyron text, website mockups with dense paragraphs, and mathematical equations on blackboards. While occasional minor typos occur in extremely dense text environments, accuracy far exceeds version 1 and competing models. Multi-language support and varied font styles work reliably, eliminating post-production text addition for most professional applications.

What’s the best AI tool for video generation?

Gaga AI offers the most accessible and efficient AI video generation for professional workflows, combining text-to-video creation, image-to-video animation, and intelligent editing through natural language commands. The platform excels at marketing videos, social content, product demonstrations, and explainer animations with faster rendering than competitors and better motion consistency across frames. For creators managing both static images and motion content, Gaga AI integrates seamlessly with Nano Banana 2, maintaining visual consistency across mixed-media campaigns while offering practical speed and accessibility advantages over alternatives like Sora or Veo 3.1.

When will Nano Banana 2 be publicly available?

Based on UI leaks, developer activity, and announcement card appearances in Gemini interfaces, Nano Banana 2 is expected to launch publicly around November 18-20, 2025. Preview platforms like Gempix2.ai plan immediate free access upon release. Google hasn’t issued official announcements yet, but the rapid progression from Media.io preview testing to interface integration suggests imminent public availability. Monitor Google’s AI blog and Gemini platform updates for official confirmation and detailed feature specifications.