While AI has mastered the art of the short clip, the dream of AI-generated cinematic narratives has remained elusive… until now. We have seen countless AI tools generate stunning 5-second visuals, but ask them to tell a story with consistent characters across multiple cuts, and the illusion breaks.

Enter HoloCine, a groundbreaking open-source text-to-video model from researchers at HKUST and Ant Group. Unlike its predecessors, HoloCine isn’t just a clip generator; it is a coherent, multi-shot video narrative engine.

The “narrative gap”—the inability of AI to maintain character persistence and story flow between shots—has been the biggest hurdle in AI filmmaking. HoloCine marks a pivotal shift in the industry, moving us beyond simple clip synthesis to automated cinematic storytelling. Here is why this model is a game-changer for filmmakers and creators alike.

Table of Contents

What is HoloCine? Bridging the Narrative Gap

At its core, HoloCine is a holistic text-to-video model. Instead of generating one shot and then trying to “stitch” a second shot to it (which often results in errors), HoloCine generates entire multi-shot sequences simultaneously. This ensures “global consistency”—meaning the AI understands the beginning, middle, and end of your scene before it generates a single pixel.

To achieve this, HoloCine utilizes two key architectural innovations:

- Window Cross-Attention (The Director): This mechanism allows for precise directorial control. It localizes your text prompts to specific shots. If you ask for a “close-up” in shot 1 and a “wide shot” in shot 2, HoloCine ensures those instructions don’t bleed into each other.

- Sparse Inter-Shot Self-Attention (The Editor): Generating long videos is computationally expensive. This mechanism keeps the model efficient by being “dense” (high detail) within a specific shot but “sparse” (efficient connections) between different shots.

The Result: The “narrative gap” is effectively bridged. HoloCine maintains character identity, environmental details, and lighting consistency across multiple cuts, something that was previously nearly impossible without complex manual workflows.

HoloCine’s Remarkable Emergent Abilities

HoloCine isn’t just following instructions; it appears to be learning the language of cinema.

Persistent Memory

One of the most frustrating aspects of AI video has been “morphing”—where a character’s shirt changes color or a prop disappears after a cut. HoloCine possesses persistent memory, allowing it to “remember” that the protagonist is wearing a red scarf in the first shot and ensure they are still wearing it in the third shot, even after a change in camera angle.

Intuitive Grasp of Cinematic Techniques

HoloCine understands standard cinematographic commands without needing complex workarounds.

- Shot-Reverse-Shot: It can handle dialogue scenes by switching angles between characters.

- Camera Angles: It distinguishes between close-ups, medium shots, and wide shots with high fidelity.

- Camera Movements: Pans, zooms, and tracking shots are executed with smooth motion.

This turns the AI into a collaborative “director’s assistant” rather than just a random image generator.

Minute-Level Generation

By optimizing efficiency, HoloCine allows for minute-scale narratives. This is a significant leap from the standard 4-second generation windows, opening the door for complex story arcs and longer scenes to be generated in a single pass.

HoloCine vs. The Competition: A New State-of-the-Art

How does HoloCine stack up against the giants of the industry?

| Feature | HoloCine | Commercial Models (e.g., Kling) | Sora 2 / Veo 3 |

| Primary Focus | Multi-shot Narratives | Single Clip Quality | High-Fidelity Physics |

| Consistency | High (Global Attention) | Varies across clips | Very High |

| Accessibility | Open Source | Paid / Closed API | Closed / Waitlist |

| Control | Precise Shot-Level | Prompt Dependent | Natural Language |

- Open-Source vs. Commercial: While models like Kling produce beautiful individual clips, they often struggle to link them into a story.

- The Sora 2 Comparison: As an open-source initiative, HoloCine is punching significantly above its weight. Its ability to generate narrative structures rivals the narrative coherence of industry leaders like Sora 2, democratizing access to high-end storytelling tools for developers and independent creators.

How You Can Experience HoloCine

For Developers & AI Enthusiasts

The true power of HoloCine lies in its open availability. The team has released the HoloCine-14B model (built on the Wan 2.2 base) in both “full” and “sparse” versions.

- Get Started: You can clone the repository from GitHub. You will likely need an environment with FlashAttention enabled to handle the sparse attention mechanisms efficiently.

- Prompting: You can use “Structured Prompts” (a global caption + specific shot captions) to exert maximum directorial control.

Example Structured Prompt:

Global: A detective investigates a rainy alleyway.

Shot 1: Close-up of boots stepping into a puddle.

Shot 2: Wide shot of the detective looking up at a neon sign.

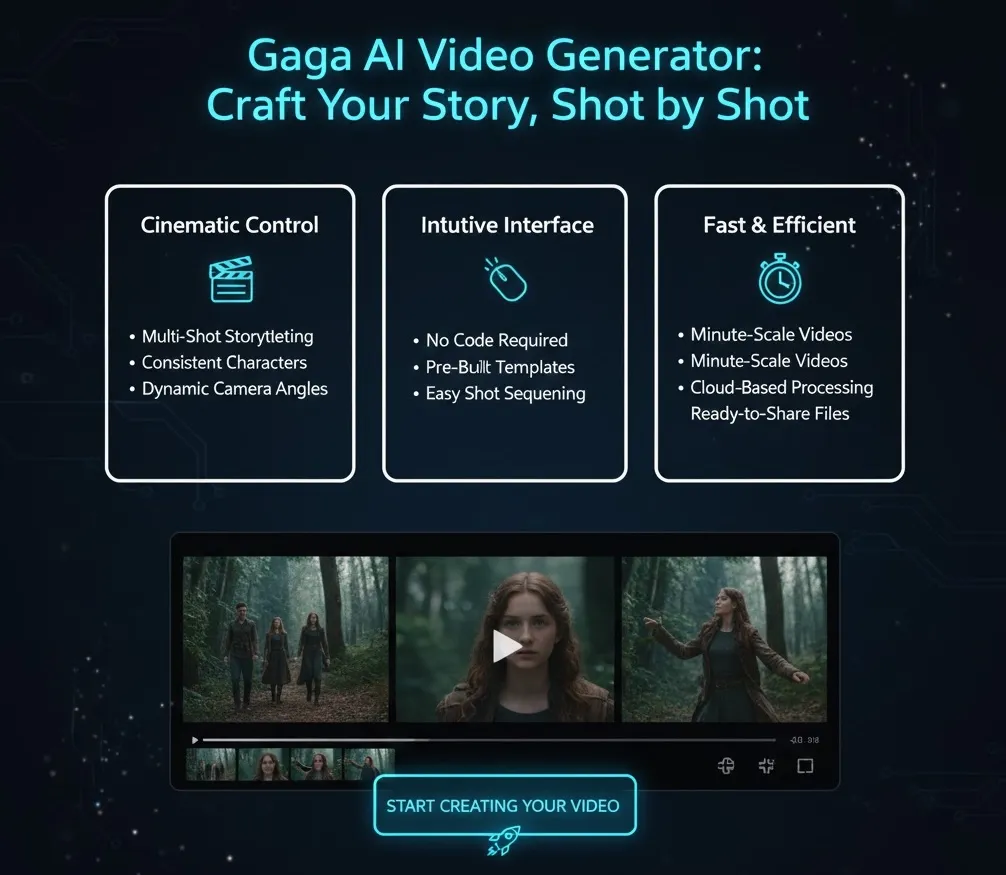

For Creators: The Gaga AI Video Generator

Not a coder? No problem. You don’t need to set up a Python environment to use cinematic AI.

Gaga AI has integrated the principles of advanced narrative generation into our platform. For creators, marketers, and businesses looking for an immediate, hassle-free way to generate cinematic videos, the Gaga AI Video Generator is your go-to solution. We handle the complex compute so you can focus on the story.

Final Words

HoloCine represents more than just a new model; it is a paradigm shift. We are moving away from the era of disjointed “clip synthesis” and entering the age of true cinematic storytelling. For independent filmmakers and content creators, this means the barrier to entry for high-quality production is lowering every day.

At Gaga AI, we are committed to bringing these cutting-edge innovations from the research lab directly to your creative toolkit.

What’s your take? Is multi-shot consistency the missing link you’ve been waiting for? Let us know, or try generating your first narrative on Gaga AI now.

FAQ

What is HoloCine?

HoloCine is an open-source AI video generation model developed by researchers at HKUST and Ant Group. It specializes in creating coherent, multi-shot video narratives rather than just single clips.

How does HoloCine differ from other AI video generators?

Unlike most models that generate one clip at a time, HoloCine uses a “holistic” approach, generating multiple shots simultaneously to ensure characters and backgrounds remain consistent throughout the video.

Can HoloCine maintain character consistency across shots?

Yes. Thanks to its global consistency architecture and “persistent memory,” HoloCine excels at keeping character features (like clothing and faces) consistent across different camera angles and cuts.

Is HoloCine free to use?

The code and model weights are open-source and free for developers to use (requires GPU hardware). For non-technical users, platforms like Gaga AI offer accessible interfaces to similar technology.

What are the system requirements for HoloCine?

Running the full HoloCine-14B model locally generally requires a high-end GPU with significant VRAM (e.g., NVIDIA A100 or H100), though “sparse” versions may run on consumer hardware with optimization.

How long can HoloCine videos be?

HoloCine is designed for “minute-scale” generation, meaning it can generate coherent narratives that last significantly longer than the standard 5-10 second clips seen in other models.