The AI video generation model has become one of the most transformative innovations of the 2020s. From simple animated GIF-like clips to today’s hyper-realistic, cinematic-quality productions, the technology is redefining content creation across industries.

With OpenAI’s Sora 2, Google’s Veo 3, and Alibaba’s open-source Wan 2.5, the competition is fierce. Each new release pushes the boundaries of realism, motion dynamics, and accessibility. Yet, a new challenger—Gaga AI with its GAGA-1 model—is quickly earning a reputation for making AI video gen accessible, character-driven, and affordable for creators worldwide.

In this article, we’ll explore:

- The evolution of the AI video generation model.

- The 2025 landscape of top models, from Sora 2 to Kling 2.1.

- A comparative analysis of Sora 2, Veo 3, and Gaga AI.

- The role of open source vs. proprietary approaches.

- Why Gaga AI may be the best AI video generation model for creators today.

Table of Contents

The Evolution of the AI Video Generation Model

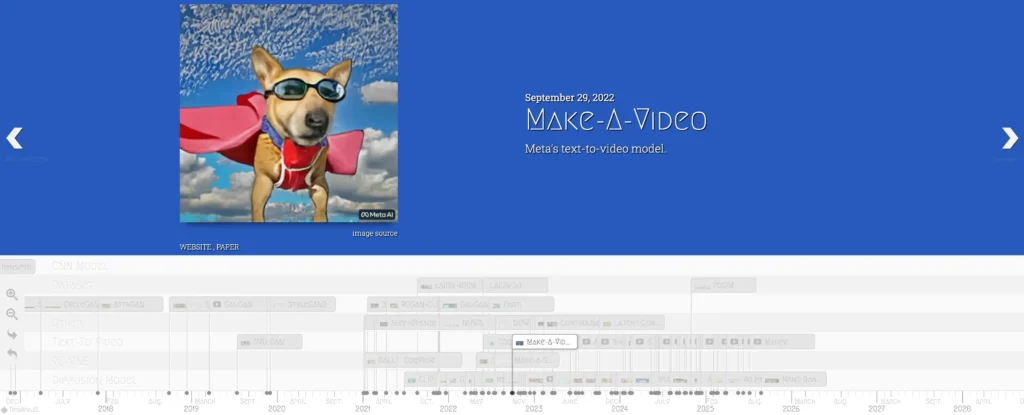

The journey of the AI video generation model has been incredibly fast-paced, with groundbreaking releases almost every few months. Below is a clear timeline that traces how the field evolved—from early experiments to today’s highly advanced, multimodal video AI systems.

2022 – Early Foundations

- CogVideo (2022) → One of the first large-scale text-to-video models, laying the foundation for later generations.

- Make-A-Video by Meta (2022) → Meta’s early entry into text-to-video, capable of generating short animated clips from text prompts.

- Phenaki (2022) → Introduced the ability to generate longer, coherent video sequences from text descriptions.

- Imagen Video by Google (2022) → Showed Google’s early experiments with high-quality text-to-video outputs.

Early 2023 – From Research to Public Tools

- Gen-1 by RunwayML – March 27, 2023

A video-to-video model allowing users to edit videos with generative visuals using text or image prompts.

- Gen-2 by RunwayML – March 20, 2023 (announced just before Gen-1’s public launch)

A text-to-video model built on the same research as Gen-1, marking Runway’s shift to text-first video generation.

- ModelScope Text2Video – Early 2023

Released by Alibaba, this model generated short 2-second clips from English prompts, becoming a popular open-source baseline.

- NUWA-XL – March 22, 2023

Microsoft’s multimodal model capable of generating longer, high-quality videos using diffusion architectures.

Mid–Late 2023 – Open Source Acceleration

- Zeroscope – June 3, 2023 → Open-source text-to-video model based on ModelScope, with different versions for quality improvements.

- Potat1 – June 5, 2023 → The first open-source model generating 1024×576 resolution videos, by Camenduru.

- Pika Labs – June 28, 2023 → Gained traction on Discord as an accessible text-to-video generator. Later announced Pika 1.0 in November 2023.

- AnimateDiff – July 10, 2023 → Added animation capabilities by adapting Stable Diffusion models to motion.

- Show-1 – September 27, 2023 → Released by NUS ShowLab, improving GPU efficiency for video generation.

- MagicAnimate – November 27, 2023 → Allowed subject transfer from still images into motion sequences.

2024 – The Year of Breakthroughs

- Pixverse – January 15, 2024 → Became popular for its ease of use, growing into a large creator platform.

- Lumiere by Google – January 23, 2024 → A diffusion-based video generator with advanced temporal consistency.

- Boximator – February 13, 2024 → ByteDance plug-in allowing motion control with bounding boxes.

- Sora by OpenAI – February 15, 2024 → Major milestone: generated up to one minute of hyper-realistic video. Initially limited access until Turbo release on Dec 9, 2024.

- Snap Video – February 22, 2024 → Snapchat’s entry into generative video.

- Veo – May 14, 2024 → Google’s powerful text-to-video model, supporting text, image, and video input.

- ToonCrafter – May 28, 2024 → Specialized in cartoon interpolation and sketch colorization.

- KLING – June 6, 2024 → By Kuaishou; first serious competitor to Sora, generating up to 2 minutes of video.

- Dream Machine by Luma Labs – June 13, 2024 → Accessible text/image-to-video model, public release.

- Gen-3 Alpha by Runway – June 17, 2024 → More stylistic control compared to Gen-1/Gen-2, limited to paying users.

- Vidu – July 31, 2024 → By Shengshu Technology & Tsinghua University.

- CogVideoX – August 6, 2024 → Open-source follow-up to CogVideo, capable of 6-second clips.

- Hailuo AI – September 1, 2024 → By MiniMax, improved prompt adherence and flexibility.

- Adobe Firefly Video – September 11, 2024 → Adobe’s safe, commercial-ready video model (waitlist only).

- Meta Movie Gen – October 4, 2024 → Meta’s tool for editing, face integration, and text-to-video.

- Pyramid Flow – October 10, 2024 → Open-source autoregressive method using Flow Matching.

- Oasis – October 31, 2024 → Interactive generative video with real-time user input, first of its kind.

- LTX-Video – November 22, 2024 → Open-source model producing smooth 24FPS video.

- Hunyuan by Tencent – December 3, 2024 → Tencent’s first generative video model, praised for open-source quality.

- Sora (Turbo Release) – December 9, 2024 → OpenAI’s long-awaited public release. Introduced a storyboard interface for sequential video creation.

- Veo 2 – December 16, 2024 → Google DeepMind’s upgrade, with stronger causality and prompt adherence.

2025 – Toward Next-Gen AI Video

- OmniHuman-1 – February 3, 2025 → By ByteDance, specializing in realistic lip-sync and human motion.

- VideoJAM – February 4, 2025 → Meta framework to improve motion realism in video generation.

- SkyReels V1 – February 18, 2025 → Fine-tuned on film/TV clips for cinematic quality.

- Wan (Wan 2.1) – February 22, 2025 → Open-source Alibaba model, highly customizable with LoRA fine-tuning.

- Runway Gen-4 – March 31, 2025 → Improved motion flexibility and reference-image integration.

- Veo 3 – May 20, 2025 → First major model to natively generate video + sound/voice in one pipeline.

- Seedance 1.0 – June 12, 2025 → By ByteDance, positioned as a cost-efficient Veo 3 competitor.

- Marey – July 8, 2025 → Closed model by Moonvalley & Asteria Film, trained only on licensed data.

- Sora 2 – September 30, 2025 → OpenAI’s second-generation model, pushing realism, consistency, and long-form storytelling beyond its predecessor.

In just three years, we’ve gone from blurry two-second clips to feature-quality storytelling tools.

The 2025 Landscape: Top AI Video Generation Models

Here’s a breakdown of today’s leading AI video generation models:

1. Sora 2 (OpenAI) – The Physics & Realism Leader

- Features: Physics-based accuracy, synchronized audio, cameo feature.

- Strengths: Stunning realism for short clips.

- Weakness: Limited access, costly API.

- Keyword use: sora ai video generation model.

2. Veo 3 (Google/DeepMind) – The Cinematic Powerhouse

- Features: 4K cinematic quality, native audio, long-form ambition.

- Strengths: Deep integration into Google ecosystem.

- Weakness: Premium, often waitlisted.

- Keywords: veo 3 ai video generation model, google ai video generation model.

3. Gaga AI (Gaga.art) – The Creator’s Choice

- Model: GAGA-1, powered by Magi-1 autoregressive architecture.

- Features:

- Consistent characters across videos.

- Emotional realism—facial expressions and dialogue match.

- Easy workflow (upload an image + add a text prompt).

- Affordable/free access with generous credits.

- Why It Stands Out: Gaga AI democratizes AI video gen by removing high entry barriers while still producing professional results.

4. Runway Gen-4 (Aleph) – The VFX & Editing Hub

- Strong in video-to-video editing, effects, and professional post-production.

5. Seedance 1.0 (ByteDance) – The Multi-Shot Specialist

- Efficient, affordable, optimized for storytelling across multiple shots.

6. Hailuo AI (MiniMax) – The Short-Form Director’s Tool

- Generates polished 6-second cinematic clips with director controls.

7. Wan 2.5 (Alibaba) – The Open-Source Pioneer

- Globally available and customizable.

- Keyword: alibaba makes ai video generation model free to use globally.

8. Kling 2.1 (Kuaishou) – The Long-Form Challenger

- Clips up to 2 minutes.

- OpenPose skeleton prompting for dance/pose videos.

- Keyword: kling 2.1 ai video generation model.

9. OmniHuman 1.5 (ByteDance) – Human Motion & Lip-Sync Specialist.

Choosing the Right Model: Sora 2 vs. Veo 3 vs. Gaga AI

So, what is the best AI video generation model in 2025? It depends on your needs:

- Physics & Realism: Sora 2 dominates for scientific accuracy and polished visuals.

- Cinematic Quality & Integration: Veo 3 wins for filmmakers tied to Google’s ecosystem.

- Character-Driven & Accessible Content: Gaga AI is unmatched for creators who want expressive characters, emotional depth, and AI video gen without technical complexity.

Workflow:

- Gaga AI: Plug-and-play with image + prompt.

- Sora 2 & Veo 3: Complex APIs, high barrier to entry.

Cost & Access:

- Gaga AI: Free/affordable, open to all.

- Sora 2/Veo 3: Waitlists, premium subscription tiers.

Verdict: For most creators, Gaga AI is the best AI video generation model—especially for social, marketing, and character-driven storytelling.

Open Source vs. Proprietary AI Video Generation Model

Open-source platforms like Wan 2.5 provide customization and experimentation. However, learning how to setup ai video generation model locally requires heavy GPUs, coding skills, and large datasets—barriers that exclude most creators.

In contrast, platforms like Gaga AI offer instant access through a web interface, combining power with usability.

The open-source movement, championed by models like Alibaba’s Wan, is vital for driving innovation. However, for most creators, accessing and running these models comes with significant hurdles.

While some advanced users may want to learn how to setup ai video generation model locally using open-source projects, this requires specialized hardware (high-end GPUs), complex coding knowledge, and constant maintenance. The high barrier to entry and resource intensity makes this impractical for most content creators.

Proprietary, user-friendly platforms like Gaga AI abstract away this complexity. By offering the power of an advanced AI video generation model (GAGA-1) through a simple, web-based interface, Gaga AI empowers millions of creators to produce high-quality AI video gen content without investing in a data center or learning command lines.

In The End

The AI video generation model landscape is evolving at lightning speed. While Sora 2 and Veo 3 set benchmarks for realism and cinematic quality, they remain locked behind exclusive access and steep costs.

Gaga AI, with its GAGA-1 model, offers something different: a free, creator-friendly, emotionally intelligent platform that empowers anyone to tell stories through video.

If you’re ready to step into the future of content creation without technical roadblocks, Gaga AI is the AI video gen tool to try today.