Key Takeaways (BLUF)

- CoDance is a state-of-the-art AI framework for character image animation, capable of animating any number of subjects, of any type, in any spatial layout.

- It solves the core limitation of existing methods by removing rigid pose-to-image alignment through an Unbind–Rebind paradigm.

- CoDance achieves SOTA performance in video quality, identity preservation, and temporal consistency.

- It supports single-subject and multi-subject animation, long videos, music-driven motion, and non-human characters.

- The framework is built on Diffusion Transformers (DiT) and introduces a new benchmark: CoDanceBench.

Table of Contents

What Is CoDance?

CoDance is a generative AI framework designed to animate characters from static images using pose sequences, without requiring spatial alignment between the pose and the reference image.

Unlike earlier character animation methods, CoDance can animate multiple characters simultaneously, even when the driving pose does not spatially match the reference image.

In simple terms:

CoDance separates motion from location, then reassigns it intelligently.

This makes it uniquely capable of handling:

- Group dances

- Crowded scenes

- Characters with different shapes or proportions

- Complex layouts where pose and image don’t line up

Why Existing Character Animation Methods Fail

Most current animation models fail because they rely on rigid spatial binding.

The Core Problems

1. Single-subject limitation

Models like Animate Anyone or MagicAnimate are designed for one character only.

2. Pose-image misalignment

If the pose skeleton doesn’t match the image position exactly, results break.

3. Identity confusion

Motion is often applied to the background or the wrong character.

4. Poor scalability

Adding more characters dramatically degrades quality.

CoDance directly targets these failure points.

How CoDance Works: The Unbind–Rebind Framework

Bottom Line

CoDance works by first breaking the forced spatial relationship between pose and image, then rebuilding it using semantic and spatial guidance.

Step 1: What Does “Unbind” Mean in CoDance?

Unbind removes rigid pose-to-image alignment so the model learns motion as a semantic concept, not a pixel location.

Pose Shift Encoder (Key Innovation)

The Unbind module introduces a Pose Shift Encoder, consisting of:

1. Pose Unbind (Input Level)

- Randomly translates and scales skeleton poses

- Breaks physical alignment with the reference image

- Forces location-agnostic motion understanding

2. Feature Unbind (Feature Level)

- Randomly duplicates and overlays pose features

- Prevents diffusion model from memorizing spatial shortcuts

- Strengthens robustness in complex scenes

Result:

The model learns what the motion means, not where it happens.

Step 2: What Does “Rebind” Mean in CoDance?

Rebind reassigns learned motion to the correct subjects using semantic and spatial signals.

Semantic Rebind (Text-Guided Control)

- Uses text prompts to specify:

- Which subjects move

- How many subjects animate

- What type of characters they are

- Powered by umT5 encoder and cross-attention

- Trained with mixed data (animation + text-to-video)

Example:

“Five cartoon characters dancing together”

Spatial Rebind (Mask-Guided Precision)

- Uses SAM-generated subject masks

- Ensures motion stays inside correct regions

- Prevents background or object animation errors

Together, semantic + spatial rebind guarantee accurate control.

CoDance Architecture Overview

CoDance is built on a Diffusion Transformer (DiT) backbone, chosen for scalability and video generation performance.

Core Components

- DiT (Diffusion Transformer) as the main generator

- VAE Encoder for reference image latent extraction

- Pose Shift Encoder for Unbind operations

- Mask Encoder for spatial rebind

- umT5 Encoder for text understanding

- LoRA fine-tuning on pretrained T2V models

What Makes CoDance Unique

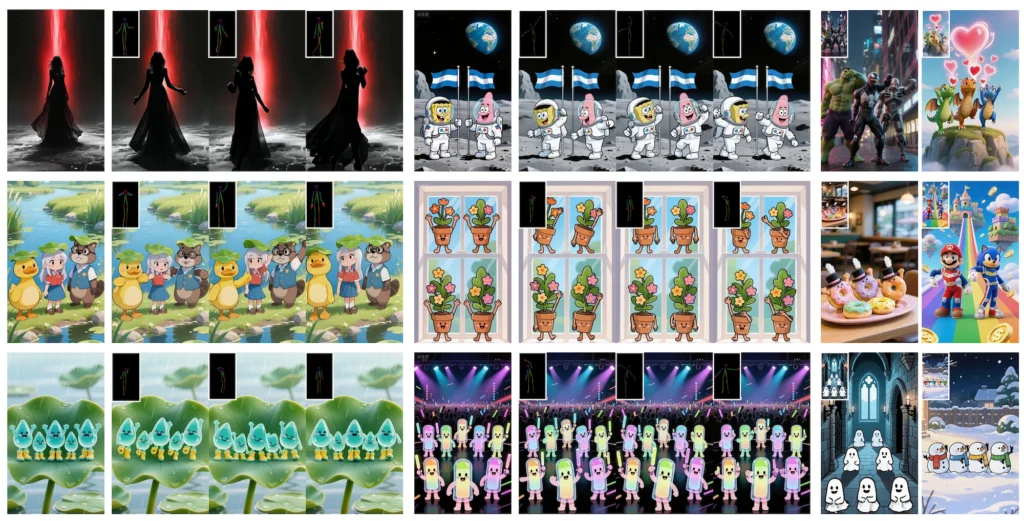

CoDance is the first framework to achieve four types of “arbitrary” animation simultaneously:

1. Arbitrary subject type (human, cartoon, non-human)

2. Arbitrary subject count (one or many)

3. Arbitrary spatial layout

4. Arbitrary pose sequence, even misaligned

This is why CoDance represents a paradigm shift, not an incremental upgrade.

Real-World Applications of CoDance

Character Animation in Games & Cartoons

- Animate multiple NPCs with one pose input

- No need for manual rig alignment

Celebrity & Influencer Animation

- Preserve facial identity accurately

- Avoid pose mismatch artifacts

Multi-Subject Dance Videos

- Coordinated group dances

- Long sequences synced to music

AI Video Content Creation

- Works with stylized and photorealistic characters

- Supports long-form storytelling

Performance: Why CoDance Is SOTA

CoDance outperforms all existing methods across key metrics.

Comparison with SOTA methods

User Study Results

| Metric | CoDance | Best Baseline |

| Video Quality | 0.90 | 0.79 |

| Identity Preservation | 0.88 | 0.50 |

| Temporal Consistency | 0.83 | 0.78 |

Benchmark Dominance

- CoDanceBench (new standard)

- Follow-Your-Pose-V2

CoDanceBench: Why It Matters

CoDanceBench is the first benchmark specifically designed for multi-subject animation.

It evaluates:

- Identity consistency across subjects

- Motion correctness

- Spatial robustness

This fills a major evaluation gap in the field.

Single-Subject vs Multi-Subject Animation with CoDance

| Feature | Traditional Methods | CoDance |

| Single subject | ✅ | ✅ |

| Multiple subjects | ❌ | ✅ |

| Misaligned pose | ❌ | ✅ |

| Text-controlled subjects | ❌ | ✅ |

| Non-human characters | Limited | Full |

Common Questions About CoDance (FAQ)

What is CoDance used for?

CoDance is used for animating characters from images using pose sequences, especially in multi-character and complex scenes.

Is CoDance better than Animate Anyone?

Yes. CoDance supports multiple subjects, spatial misalignment, and text-guided control, which Animate Anyone cannot handle.

Can CoDance animate long videos with music?

Yes. CoDance supports long temporal sequences and coordinated motion, making it suitable for music-driven animation.

Does CoDance work with non-human characters?

Yes. CoDance generalizes across humans, cartoons, and anthropomorphic characters.

Is CoDance open source?

The authors have stated that code and model weights will be open-sourced.

Bonus: How CoDance Complements Gaga AI Video Generator

Gaga AI is a powerful AI video generator focused on text-to-video creativity.

When paired conceptually with CoDance-style motion control, creators gain:

- Strong visual creativity from Gaga AI

- Precise character motion control inspired by CoDance

- Better multi-character storytelling

For creators, this represents the future of AI-native animation pipelines.

Final Thoughts

CoDance redefines what’s possible in AI character animation.

By abandoning rigid spatial alignment and introducing the Unbind–Rebind paradigm, it unlocks:

- True multi-subject animation

- Robust identity preservation

- Scalable, flexible video generation

For researchers, creators, and AI video platforms, CoDance is a foundational breakthrough.